OpenAI has open-sourced a 34-page white paper on Best Practices for Agents.

OpenAI quietly released another document yesterday—a 34-page official guide titled *A Practical Guide to Building Agents*! (So, is this meant to compete with Google’s Agents White Paper?)

The overall content is quite comprehensive and seems to offer more in-depth insights compared to Google’s Agents white paper. It defines what an Agent is and provides some specific and practical suggestions, ranging from model selection, tool design, and instruction writing to complex orchestration patterns and safety guardrails.

What on earth is an AI Agent?

OpenAI has clearly defined the core characteristics of Agents. It is not merely a chatbot or a simple invocation of LLMs. The key lies in its ability to independently execute workflows. An Agent can act on behalf of users and, to a certain extent, independently complete a series of steps to achieve a goal, whether it’s ordering food, handling customer service requests, or submitting code changes.

The decision-making core of the Agent is LLM-driven. It utilizes large models to manage the execution of workflows, make judgments, identify when tasks are completed, and even proactively correct its behavior when necessary.

At the same time, the Agent needs to interact with the external world through tools. It must be able to access and dynamically select appropriate tools (such as APIs, function calls, or even simulated UI operations) to obtain information or perform specific actions.

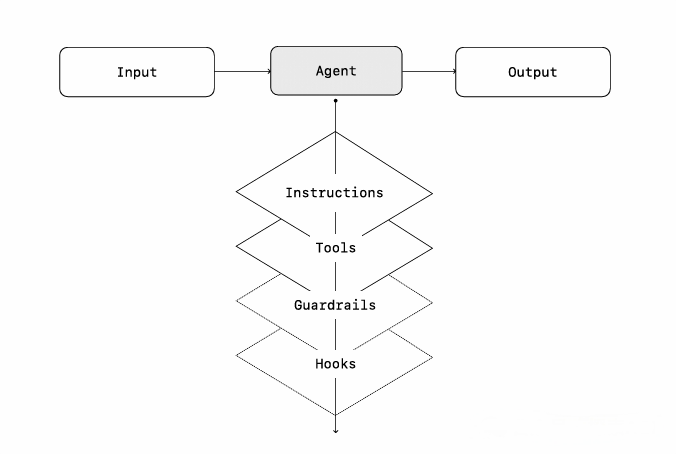

Simple summary: Agent = LLM (brain) + tools (hands and feet) + instructions (code of conduct) + autonomous workflow execution. In OpenAI’s view, those LLM applications that only process information without controlling the entire process, such as simple Q&A robots or sentiment analysis tools, cannot be considered as Agents.

When should an Agent be built?

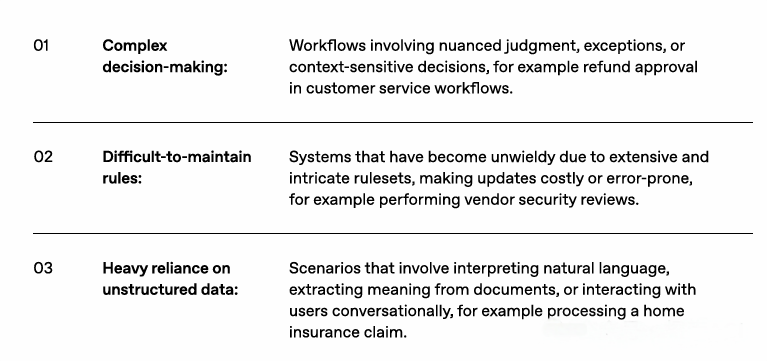

Not all automation scenarios are naturally suitable for introducing Agents. We should prioritize those complex workflows that are difficult to effectively solve with traditional automation methods.

Especially when your workflow involves complex decision-making, such as requiring meticulous judgment, handling various exceptions, or relying heavily on contextual information (refund approval in customer service scenarios is a typical example).

Or, when you are faced with a system with extremely complex and difficult-to-maintain rules, an Agent may be a better choice. For example, for those supplier security review processes with a huge and constantly changing set of rules.

In addition, if the process heavily relies on unstructured data, requires a deep understanding of natural language, extracting key information from documents, or conducting multi-round conversational interactions with users (such as handling complex home insurance claims processes), then the capabilities of the Agent will be highly valuable.

If your scenario does not meet the above points, then a well-designed deterministic solution may be sufficient, and there is no need to force the use of an Agent.

The three major design foundations of Agent

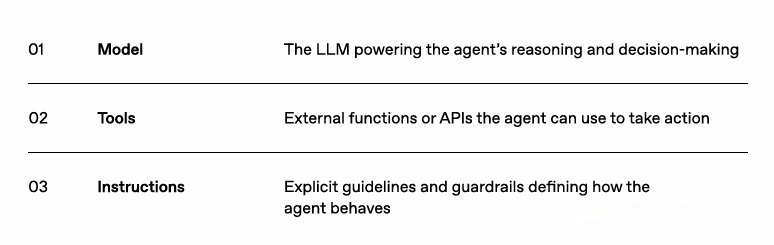

To build an Agent, whether simple or complex, these three core cornerstones are indispensable:

model

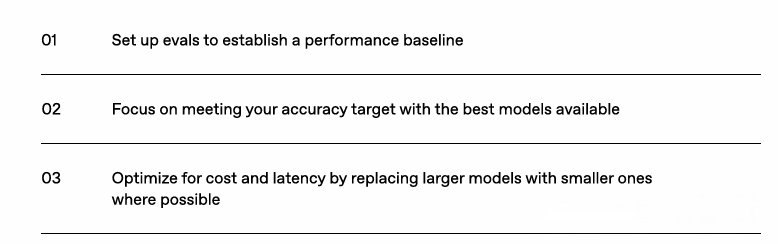

Selecting the appropriate model is the first step. OpenAI offers a highly pragmatic model selection strategy: begin by using the most capable model (e.g., the current GPT-4o) to build your Agent prototype. This approach aims to first identify the performance ceiling and establish a reliable benchmark.

Next, attempt to replace specific steps in the workflow with smaller, faster, and lower-cost models (such as GPT-4o mini or o3-mini/o4-mini). Evaluate whether the modified system still meets business requirements while maintaining core capabilities. This allows gradual optimization of costs and response speed without sacrificing critical functionality. The key lies in finding the optimal balance between task complexity, latency, and cost.

Tools

Tools serve as the bridge for Agents to interact with the external world, greatly expanding their capabilities. These tools can take various forms, such as APIs, custom functions, or even the so-called “computer usage models” (which can be understood as more intelligent UI automation) for directly operating application interfaces in the case of legacy systems that do not provide APIs.

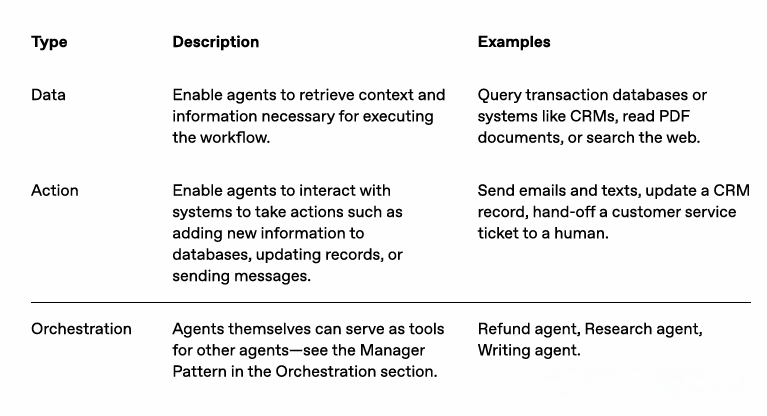

The guide categorizes tools into three main types:

- Data Tools: These are used to acquire the information and context needed to perform tasks, such as querying databases, reading content from PDF documents, or conducting web searches.

- Action Tools: These are used to perform specific operations in external systems, thereby changing their state. Examples include sending emails, updating customer records in a CRM system, or submitting a new order.

- Orchestration Tools: An Agent itself can also be encapsulated as a tool and invoked by another Agent. This is utilized in the Manager Orchestration Mode discussed later.

When designing tools, OpenAI emphasizes the principles of standardized definitions, clear documentation, thorough testing, and reusability. This not only increases the likelihood of the tools being discovered and understood but also simplifies version management and avoids redundant development of similar features within the team.

instructions

High-quality instructions are crucial for the performance of an Agent, and their importance even surpasses that of ordinary LLM applications. Clear and explicit instructions can significantly reduce ambiguity, improve the decision-making quality of the Agent, thereby making the entire workflow execute more smoothly and reducing the probability of errors.

How to write good instructions? OpenAI provides some best practices:

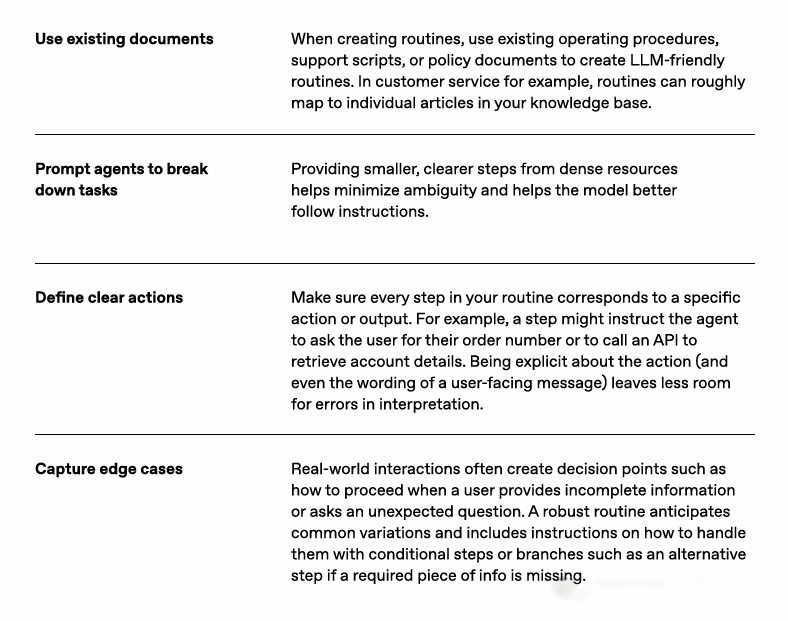

- Make good use of existing documents: Use your existing standard operating procedures (SOPs), customer support scripts, or relevant policy documents as the basis for writing LLM instructions.

- Break down complex tasks: Divide complex and lengthy tasks into a series of smaller and clearer steps. This helps the model better understand and follow them.

- Specify concrete actions: Ensure that each step in the instruction clearly corresponds to a specific action or output. For example, explicitly instruct the Agent to “ask the user for their order number” or “call the get_account_details API to retrieve account details”. You can even specify the exact wording the Agent should use when responding to the user.

- Thoroughly consider edge cases: Real-world workflows are full of various unexpected situations. Anticipate scenarios where users may provide incomplete information or ask unforeseen questions, and include corresponding handling logic in the instructions, such as designing conditional branches or alternative steps.

OpenAI also shares a “lazy” tip: you can use more powerful models like GPT-4o or o3-mini to automatically convert your existing documents into structured Agent instructions! The guide provides a ready-to-use Prompt example.

You are an expert in writing instructions for an LLM agent. Convert the

following help center document into a clear set of instructions, written in

a numbered list. The document will be a policy followed by an LLM. Ensure

that there is no ambiguity, and that the instructions are written as

directions for an agent. The help center document to convert is the

following {{help_center_doc}}

Orchestration of Agents

After having the three fundamental components – the model, tools, and instructions – the next step is to consider how to organize them effectively so that the Agent can smoothly execute complex workflows. This is the problem that orchestration aims to solve.

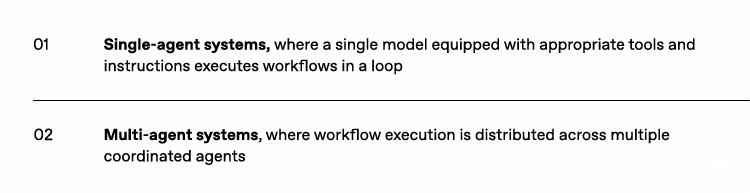

OpenAI emphasizes that adopting an incremental approach is often easier to succeed than building a large and complex system from scratch. It is recommended to start with a relatively simple single-agent system and gradually evolve to a multi-agent collaborative model based on actual needs.

Single-Agent Systems

This is the most fundamental mode. Its core mechanism can be understood as a “Run Loop”. Within this loop, the Agent runs continuously: it invokes the LLM to think and make decisions, selects and uses tools to interact with the external environment based on the decision results, and then enters the next round of the loop with new information.

This loop will continue until a preset exit condition is met. Common exit conditions include: the Agent invokes a tool marked as “final output”; or the LLM, at a certain step, does not call any tool but directly provides a response intended for the user.

When a single Agent needs to handle multiple different but logically similar tasks, maintaining a large number of independent Prompts can become very troublesome. At this time, an effective strategy is to use Prompt Templates. Design a basic Prompt framework that includes variables (such as {{user_first_name}}, {{policy_document}}, etc.). When handling different tasks, dynamically fill in these variables. This method can significantly simplify the maintenance and evaluation of Prompts.

When is it necessary to introduce multi-agents?

Although multi-agent systems may sound more powerful, OpenAI’s suggestion is to first fully explore and leverage the potential of a single agent. This is because introducing more agents will inevitably bring additional complexity and management overhead.

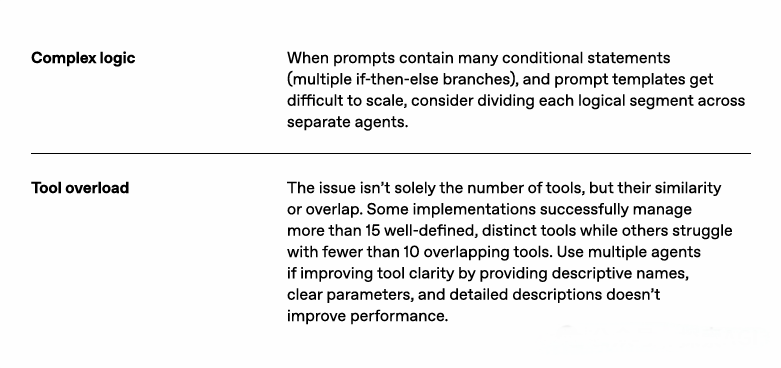

Only in the following two typical scenarios is it necessary to seriously consider splitting tasks among multiple Agents:

- Overly Complex Logical Branches: When your core Prompt contains a large number of conditional statements (e.g., multi-level nested if-then-else), making the Prompt itself difficult to understand, maintain, and extend.

- Tool Overload or Functional Overlap: The issue is not the absolute number of tools but whether they are clearly defined and functionally unique. If an Agent frequently struggles to choose between multiple tools with similar functions or unclear descriptions, and its performance still cannot be improved even after optimizing the tools’ names, descriptions, and parameter definitions, then splitting the related tools and logic into separate, specialized Agents may become necessary.

Interestingly, the guidelines mention that in some successful cases, a single Agent has effectively managed more than 15 well-defined tools, while in other cases, even fewer than 10 tools with overlapping functions could lead to confusion.

Multi-Agent Systems

When you determine that multiple Agents are needed to collaborate on completing a task, OpenAI mainly introduces two common orchestration patterns with broad applicability:

Manager Mode (Agents as Tools):

In this mode, there is a central “Manager” Agent that plays the role of a coordinator. It is responsible for receiving the initial user request and then assigning different parts of the task to multiple subordinate “Expert” Agents by invoking them as tools (via tool calls). Each Expert Agent specializes in a specific task or domain. Finally, the Manager Agent collects and integrates the results from all the Expert Agents to form a unified final output.

The advantage of this mode lies in its highly clear control process, making it easy to manage and understand. It is particularly suitable for complex workflows that require a unified external interaction interface and the need to synthesize results from multiple sub-tasks.

In terms of technical implementation, each Expert Agent is typically encapsulated as a function or API, allowing it to be invoked by the Manager Agent just like a regular tool (for example, in OpenAI’s Agents SDK, the `agent.as_tool()` method can be used).

Decentralized mode (Agents Handing Off to Agents)

In this decentralized model, Agents are in a relatively equal relationship with each other. They transfer the control of the workflow through a mechanism called “Handoff”. Handoff is usually a one-way transfer of control rights. When an Agent decides to hand over a task to another Agent, it will call a special Handoff tool or function.

Once Handoff is invoked, the system’s execution focus will immediately switch to the Agent being handed off, and typically, the current conversation state (context information) will be passed along as well. After the new Agent takes over, it can independently continue processing the task and interact with the user.

This model is particularly suitable for scenarios that do not require a central coordinator to manage or aggregate results. A typical application is **Conversation Triage**. For example, an initial Triage Agent first receives the user’s request, determines the problem type, and then directly hands off the conversation to an Agent specialized in sales, technical support, or order management.

The flexibility of this model lies in the fact that you can design it as a completely one-way handoff, or you can configure the corresponding Handoff tool on the target Agent, allowing it to hand over the control back when necessary.

(Guardrails) – Ensure the Safe and Reliable Operation of Agents

For any Agent system intended for deployment in a production environment, designing and implementing effective Guardrails is an indispensable and critical step. The primary purpose of Guardrails is to help you manage and mitigate various potential risks, such as preventing the leakage of sensitive data (e.g., system Prompts), avoiding inappropriate remarks generated by the Agent that could damage the brand’s reputation, and defending against malicious instruction injection attacks.

OpenAI emphasizes the concept of layered defense. A single guardrail measure is often insufficient to provide comprehensive protection. It is necessary to combine multiple guardrails of different types and focuses to build a defense-in-depth system.

Common types of safeguards include:

- Relevance Classifier: Used to determine whether user inputs or agent responses deviate from predefined topic boundaries, preventing off-topic conversations.

- Safety Classifier: Specifically detects malicious inputs attempting to exploit system vulnerabilities, such as jailbreaks or prompt injection attacks.

- PII Filter: Inspects agent outputs before delivery to users, removing or redacting Personally Identifiable Information (PII).

- Moderation API: Leverages content moderation services (e.g., OpenAI or third-party providers) to filter harmful or inappropriate inputs/outputs involving hate speech, harassment, violence, etc.

- Tool Safeguards: Conducts risk assessments for each tool available to the agent (e.g., distinguishing read-only vs. write operations, reversibility, permission requirements, financial implications). Implements tiered security policies based on risk levels. For example, enforcing additional safety checks before high-risk tool executions or requiring manual confirmation.

- Rules-based Protections: Employs simple yet effective deterministic rules to counter known threats, such as blocklists, input length restrictions, or regular expressions (Regex) to filter potential attack patterns like SQL injection.

- Output Validation: Ensures agent responses align with brand image and values through meticulous prompt engineering and output inspections, avoiding reputation-damaging content.

Strategic Recommendations for Building Guardrails:

- First, focus on the two most critical areas: data privacy protection and content security.

- During the system’s operation, gradually identify new risk points based on actual edge cases and failure modes encountered, and add or improve corresponding guardrail measures in a targeted manner.

- Continuously seek a balance between security and user experience. Overly stringent guardrails may impact the Agent’s normal functionality and user experience. Adjustments and optimizations should be made continuously according to the development of the Agent and changes in its application scenarios.

It is worth mentioning that, in OpenAI’s own Agents SDK, a strategy called “Optimistic Execution” is adopted by default: the main Agent will first attempt to generate output or execute actions, while relevant guardrail checks are carried out concurrently in the background. If a policy violation is detected, the guardrail will promptly throw an exception to interrupt unsafe operations.

Don’t forget to plan for human intervention

Finally, the guide particularly emphasizes the necessity of planning an artificial intervention mechanism. Especially in the early stage of Agent deployment, establishing a smooth manual intervention process is crucial.

This is not only the ultimate safety guarantee but also a crucial step in continuously improving the performance of the Agent. By analyzing cases that require human intervention, you can identify the shortcomings of the Agent, detect unforeseen edge cases, and provide valuable real-world data for model fine-tuning and evaluation.

Generally, there are two main scenarios that typically require human intervention:

- Exceeding the preset failure threshold: For example, you can set it so that when an Agent still cannot understand the user’s intention after several consecutive attempts, or when a certain operation fails continuously for a certain number of times, the task will be automatically escalated to a human for processing.

- Performing high-risk operations: For operations that are sensitive, irreversible, or involve significant interests (such as canceling a user’s order, approving a large refund, executing a payment, etc.), before the reliability of the Agent is fully verified, a human review or confirmation step should be triggered by default.

Conclusion

Understanding the essence of an Agent lies in the combination of LLM, tools, and instructions, enabling it to autonomously execute complex workflows. Foundational work is crucial: selecting appropriate model capabilities, designing a standardized and well-documented toolset, and writing clear and comprehensive instructions. Based on the actual complexity requirements, choose an appropriate orchestration mode, and it is recommended to start with a simple single-agent system and iteratively evolve from there. Building a layered and robust safety guardrail mechanism is a must for any production-grade Agent deployment and must not be overlooked. Finally, embrace the mindset of iterative optimization, make good use of human intervention mechanisms to identify issues, gather feedback, and continuously enhance the performance and reliability of the Agent.

Related Posts