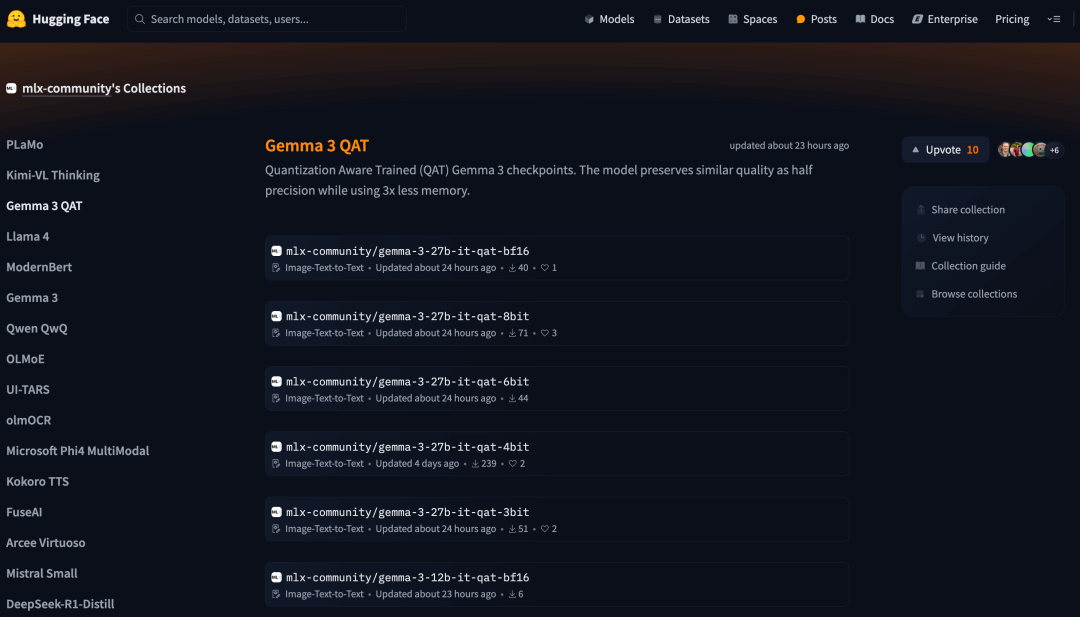

Just 16GB to Run 27B! Gemma-3 QAT Breaks the Local Deployment Barrier

Google has recently released a QAT (Quantization-Aware Training) version of its Gemma-3-27B model. This version maintains quality comparable to half-precision while reducing memory usage by two-thirds. The 4-bit quantized version requires only around 16GB, making it highly suitable for local deployment.

Currently, major single-machine inference frameworks such as Ollama, LM Studio, MLX, Gemma.cpp, and llama.cpp all support running Gemma-3. Users can access the MLX and GGUF versions via the provided links to explore various applications powered by this new lightweight model.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...