Are Large Models No Longer “Large”? BitNet Reinvents AI with Just 1 Bit

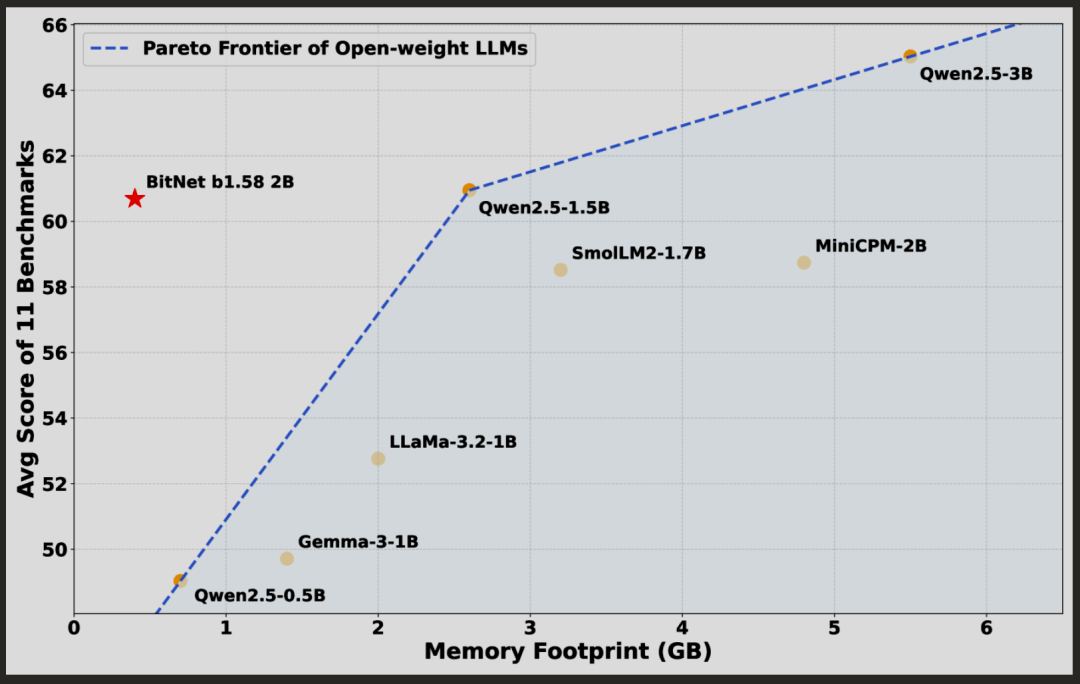

Microsoft Research has open-sourced BitNet b1.58 2B4T, the first native 1-bit large language model. With just 0.4GB of memory and 2 billion parameters, BitNet demonstrates a compelling combination of low precision and high efficiency. Its parameters are limited to only {-1, 0, +1}, thanks to an innovative 1.58-bit quantization technique that breaks traditional barriers in memory consumption and energy usage. Despite its minimalist design, BitNet performs competitively across multiple benchmarks, achieving results comparable to full-precision models. It also features a custom CPU-optimized inference framework, unlocking new possibilities for on-device AI. Through extensive training and optimization, BitNet has shown strong performance in language understanding, mathematical reasoning, and dialogue, marking a significant milestone in the journey toward lightweight AI.

Related Posts