Flex.2 – preview: A text – to – image diffusion model launched by Ostris

What is Flex.2-preview?

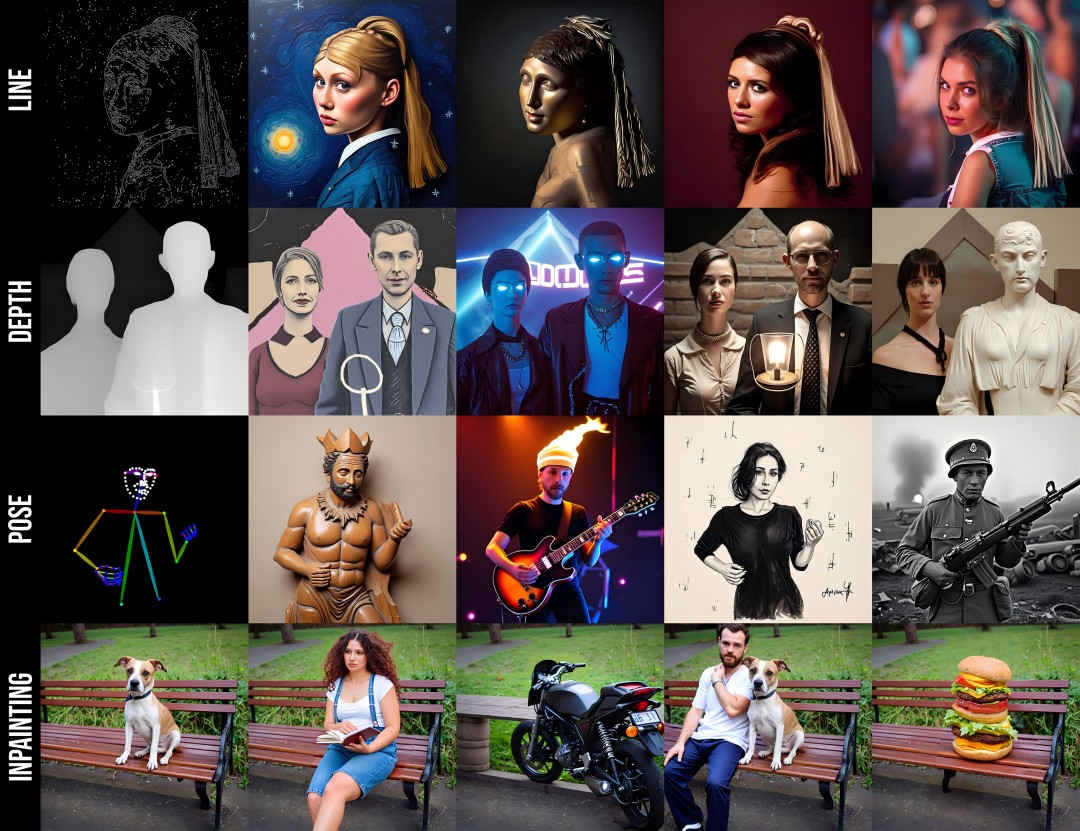

Flex.2-preview is an open-source 8-billion-parameter text-to-image diffusion model released by Ostris. It supports general control inputs (such as sketches, poses, and depth maps) and comes with built-in inpainting capabilities. Designed to meet various creative demands within a single model, it supports long text inputs (up to 512 tokens) and can be easily used with ComfyUI or the Diffusers library.

Currently in its early preview phase, Flex.2-preview demonstrates strong flexibility and potential, making it suitable for creative generation and experimental development.

Key Features of Flex.2-preview

-

Text-to-Image Generation:

Generates high-quality images from textual descriptions, supporting up to 512 tokens of input for understanding and visualizing complex prompts. -

Built-in Inpainting:

Supports targeted image repair or replacement. Users can provide an image and a mask, and the model generates new content within the specified area. -

General Control Inputs:

Accepts various forms of guidance such as sketch maps, pose estimations, and depth maps to steer the image generation process. -

Flexible Fine-Tuning:

Allows for fine-tuning using techniques like LoRA (Low-Rank Adaptation) to adapt the model for specific styles or tasks.

Technical Overview of Flex.2-preview

-

Diffusion Model Architecture:

Based on step-wise denoising to generate images. The model starts from random noise and learns how to transform it into images aligned with the textual description. -

Multi-Channel Inputs:

-

Text Embeddings: Convert text prompts into embeddings that the model can interpret.

-

Control Inputs: Guide image generation based on additional inputs like pose or depth maps.

-

Inpainting Inputs: Combine the original image and mask to regenerate targeted areas with new content.

-

-

16-Channel Latent Space:

Utilizes a 16-channel latent space that accommodates inputs such as noise, inpainting masks, source images, and control data. -

Optimized Inference Algorithm:

Incorporates high-efficiency techniques like the Guidance Embedder to speed up generation while maintaining high output quality.

Project Link

-

HuggingFace Model Repository:

https://huggingface.co/ostris/Flex.2-preview

Application Scenarios for Flex.2-preview

-

Creative Design:

Rapidly generate concept art and illustrations, supporting artists and designers in visualizing ideas. -

Image Restoration:

Repair photo defects or fill in missing areas, making it ideal for image editing tasks. -

Content Creation:

Generate assets for ads, videos, and games, enhancing production efficiency. -

Education and Research:

Provide AI-generated teaching materials and serve as a platform for AI research experiments. -

Personalized Customization:

Fine-tune the model to generate images that match individual artistic styles or specific needs.

Related Posts