Step1X-Edit – A universal image editing framework open-sourced by StepFun

What is Step1X-Edit?

Step1X-Edit is a universal image editing framework developed by the StepFun team. It integrates multimodal large language models (MLLM) and diffusion models, aimed at reducing the performance gap between open-source image editing models and advanced closed-source models like GPT-4o and Gemini2 Flash. The framework processes reference images and user-editing instructions, extracting latent embeddings to generate the target image. Step1X-Edit’s dataset is built through a high-quality pipeline that includes over 1 million image-instruction pairs.

Key Features

-

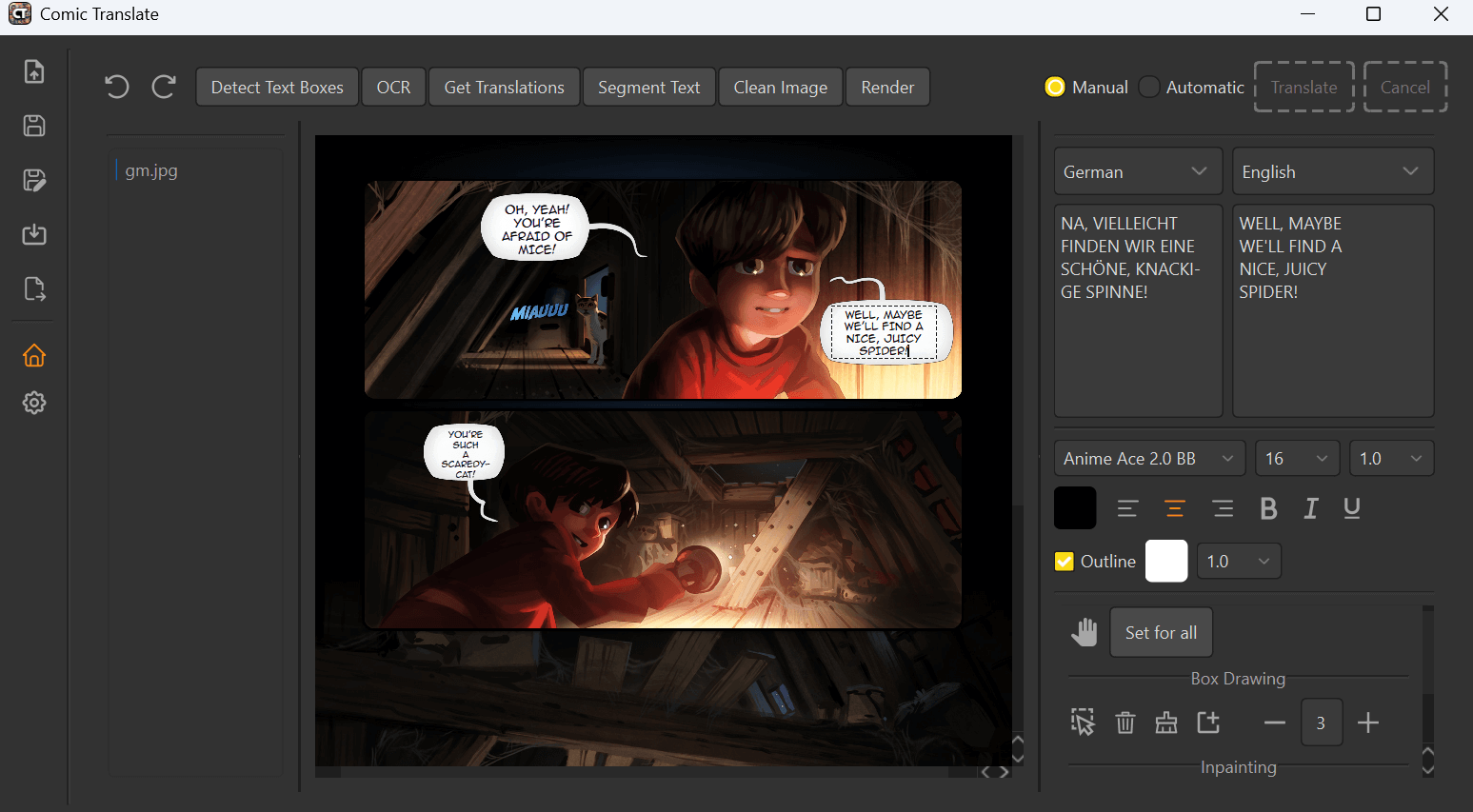

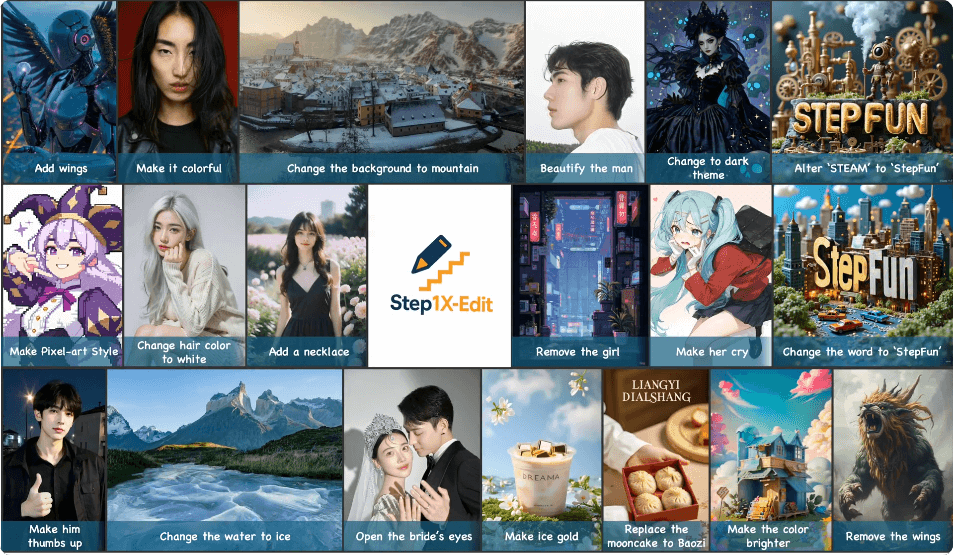

Versatile Editing Capabilities

Step1X-Edit supports a wide range of image editing tasks such as adding, removing, or replacing subjects, changing backgrounds, color adjustments, material modifications, style transfer, portrait beautification, text alterations, tone adjustments, and more. -

Natural Language Instruction

The framework allows users to issue editing commands in natural language. It can understand and execute complex editing instructions based on text descriptions. -

High-Quality Image Generation

Step1X-Edit is designed to generate high-fidelity, realistic images, ensuring the output is of professional quality. -

Real-World Scene Adaptability

Trained on a large-scale, high-quality dataset, the model is capable of handling complex editing tasks commonly encountered in real-world scenarios.

Technical Principles

-

Multimodal Large Language Models (MLLM)

Step1X-Edit utilizes MLLMs to process both reference images and user instructions, extracting semantic information. The powerful semantic understanding of MLLMs generates embedding vectors that are highly relevant to the editing task. -

Diffusion Models

The framework leverages diffusion models (e.g., DiT-style architecture) to decode the embeddings into the target images. These models provide high-fidelity image generation capabilities, making them ideal for realistic image creation. -

Data Generation Pipeline

Step1X-Edit benefits from a robust data generation pipeline that creates over 1 million image-instruction pairs. This large dataset covers various editing tasks, ensuring the model can learn diverse editing operations. -

Training Strategy

The framework’s training begins with text-to-image model initialization and focuses on preserving aesthetic quality and visual consistency. By connecting joint training modules with downstream diffusion models, it optimizes overall performance. -

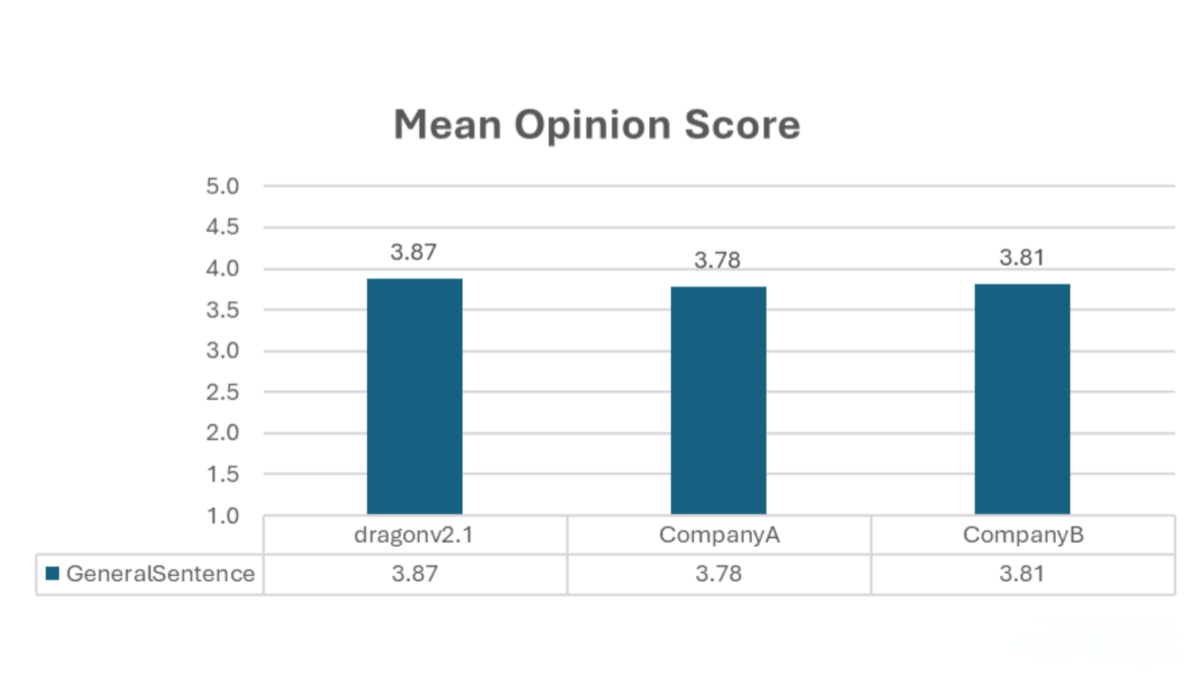

Benchmarking with GEdit-Bench

Step1X-Edit introduces a new benchmark, GEdit-Bench, designed to evaluate the model’s real-world performance using real user instructions. It includes various editing tasks, ensuring the model’s effectiveness in practical scenarios.

Project Links

-

Official Website: https://step1x-edit.github.io/

-

GitHub Repository: https://github.com/stepfun-ai/Step1X-Edit

-

HuggingFace Model Library: https://huggingface.co/stepfun-ai/Step1X-Edit

-

arXiv Paper: https://arxiv.org/pdf/2504.17761

-

Online Demo: https://huggingface.co/spaces/stepfun-ai/Step1X-Edit

Application Scenarios

-

Creative Design

Quickly generate creative images by changing backgrounds, adjusting colors, and adding elements, enhancing design efficiency. -

Film Post-Production

Apply effects like adding/removing objects, changing appearances, or adjusting tones to save post-production costs. -

Social Media

Beautify photos, add fun elements, or adjust styles to enhance the appeal of content. -

Game Development

Generate characters, scenes, and props, quickly adjust outfits or styles, reducing art resource development time. -

Education

Generate educational materials such as modified historical photos or scientific illustrations to improve teaching effectiveness.

Related Posts