The Secret Weapon for AI Model Deployment: A Deep Dive into the ApX VRAM Calculator

🧠 What Is the ApX VRAM Calculator?

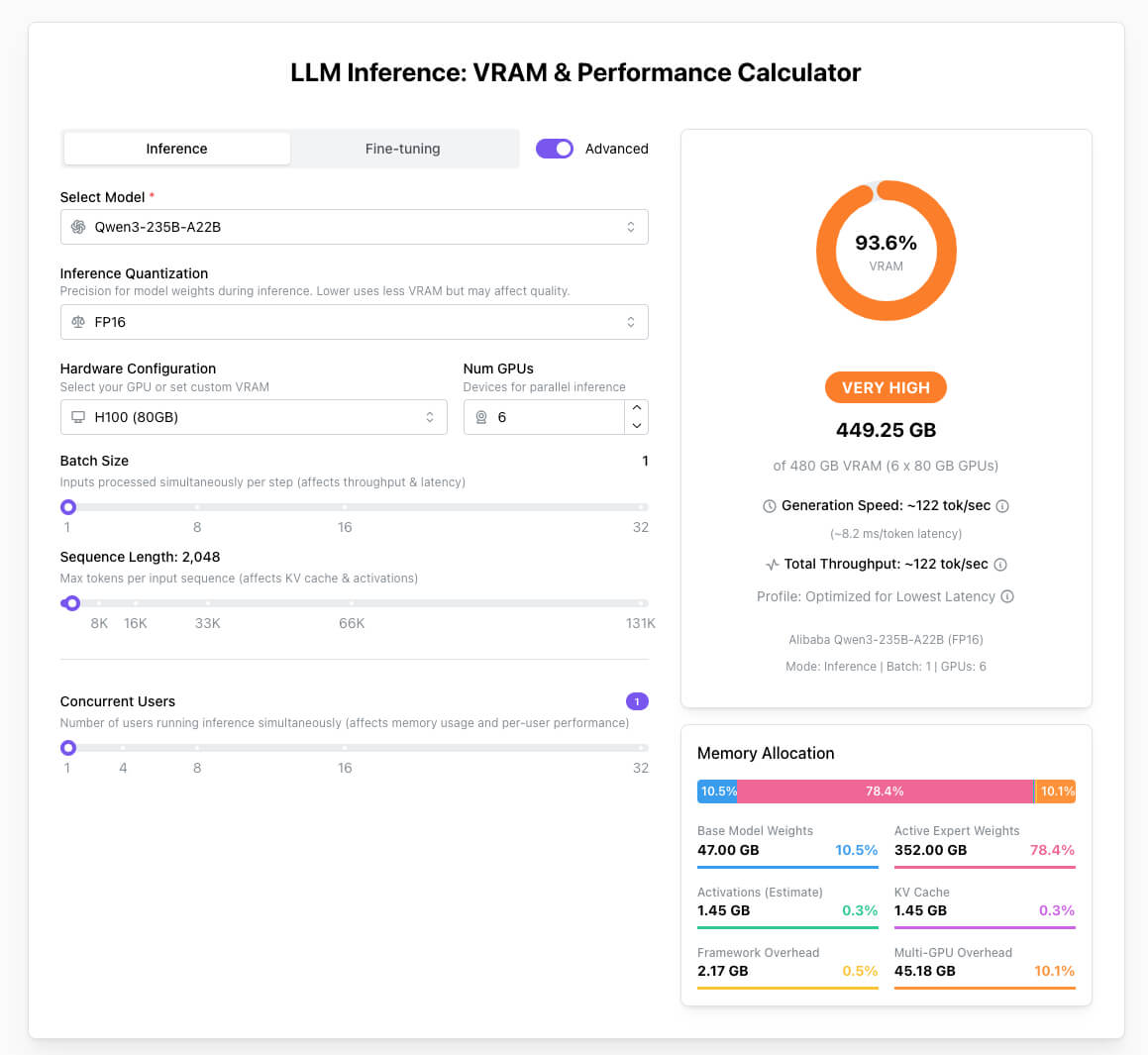

The ApX VRAM Calculator is a web application that helps users estimate the amount of GPU VRAM needed to run LLMs based on a variety of input parameters such as model configuration, quantization level, hardware specs, batch size, and sequence length. It’s an essential resource for model deployment, inference optimization, and hardware planning.

🔧 Key Features

-

Model Configuration: Supports various mainstream LLMs and allows custom parameter input.

-

Quantization Options: Offers several quantization settings (e.g., FP16, INT8) to evaluate how precision affects VRAM usage.

-

Hardware Customization: Enables users to select or manually input GPU type, memory size, and number of GPUs—perfect for simulating multi-GPU scenarios.

-

Batch Size & Sequence Length Control: Adjust these inputs to see how they impact VRAM consumption.

-

Real-Time Estimates: Instantly provides estimated VRAM usage, generation speed, and total throughput for each configuration.

⚙️ How It Works

The ApX VRAM Calculator bases its estimates on a combination of the following technical elements:

-

Model Architecture: Includes parameter count, layer numbers, hidden dimensions, etc.

-

Quantization Level: Different levels of precision change the bit size of each parameter, which directly affects memory usage.

-

Sequence Length & Batch Size: These determine the size of intermediate activations and key-value caches, both of which occupy VRAM.

-

Hardware Specs: GPU model and quantity influence how the model is distributed across devices, affecting overall VRAM demand.

By factoring in all of the above, the tool uses an internally developed estimation model to provide near-accurate memory usage predictions.

📍 Project Link

You can access the ApX VRAM Calculator here:

🔗 https://apxml.com/tools/vram-calculator

🧩 Use Cases

-

Pre-deployment VRAM Planning: Estimate GPU memory needs before launching LLMs in production environments.

-

Inference Optimization: Find the best balance between performance and resource usage by tweaking quantization and batch size.

-

Hardware Sizing: Choose the right GPU model and configuration based on projected memory requirements.

-

Education & Research: A valuable teaching tool for understanding how various factors impact memory needs in AI models.

Related Posts