What is QLIP

QLIP (Quantized Language-Image Pretraining) is a vision tokenization method introduced by NVIDIA and collaborators, combining high-quality image reconstruction with powerful zero-shot image understanding capabilities. QLIP is trained using a Binary Spherical Quantization (BSQ) autoencoder, jointly optimizing for both reconstruction and language-image alignment objectives. It can function as a visual encoder or image tokenizer, seamlessly integrating into multimodal models and delivering strong performance in both understanding and generation tasks. QLIP offers a new paradigm for building unified multimodal models.

Key Features of QLIP

-

High-Quality Image Reconstruction: Reconstructs high-quality images even at low compression rates.

-

Robust Semantic Understanding: Generates semantically rich visual tokens that support zero-shot image classification and multimodal understanding tasks.

-

Support for Multimodal Tasks: Works as a visual encoder or image tokenizer that can be integrated into multimodal systems for tasks like text-to-image generation and image-to-text generation.

-

Unified Multimodal Model Capability: Enables a single model to handle pure text, image-to-text, and text-to-image tasks concurrently.

Technical Principles of QLIP

-

Binary Spherical Quantization (BSQ): BSQ encodes images into discrete visual tokens by mapping high-dimensional points onto binary vertices on a unit hypersphere, enabling efficient quantization and compression.

-

Contrastive Learning Objective: QLIP introduces a contrastive learning objective to align visual tokens with language embeddings via image-text pairing. It uses an InfoNCE loss to pull matching image-text pairs closer in embedding space while pushing apart non-matching ones. This alignment enables both semantic understanding and faithful image reconstruction.

-

Two-Stage Training Strategy:

-

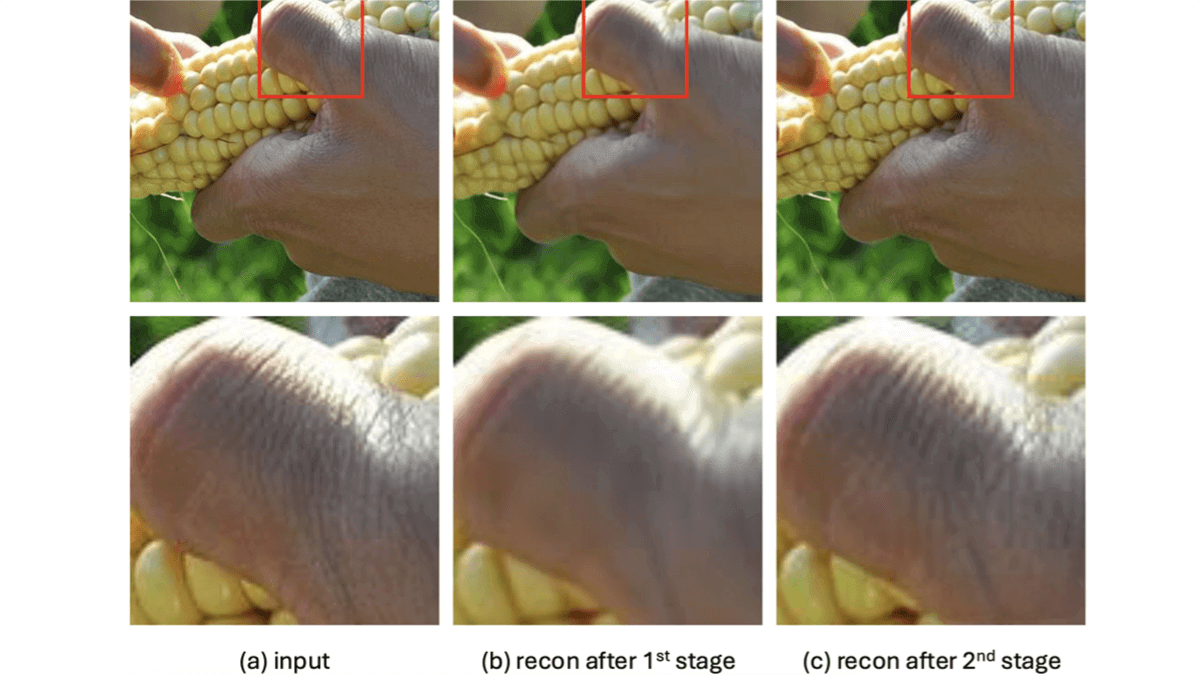

Stage 1: Jointly optimizes a weighted combination of reconstruction loss, quantization loss, and contrastive loss to learn semantically meaningful visual representations while preserving reconstruction quality.

-

Stage 2: Builds on stage 1 to further refine image reconstruction, especially high-frequency details, by fine-tuning the quantization bottleneck and visual decoder. In this stage, the text encoder is discarded and the visual encoder is frozen to avoid performance degradation during large-batch training.

-

-

Dynamic Loss Balancing: Dynamically adjusts the weights between contrastive and reconstruction losses using inverse loss values to balance the convergence speeds and resolve conflicts between the two objectives.

-

Accelerated Training & Better Initialization: Initializes the visual and text encoders from pretrained models (e.g., Masked Image Modeling or CLIP), greatly improving training efficiency and reducing required data.

QLIP Project Resources

-

Official Website: https://nvlabs.github.io/QLIP/

-

GitHub Repository: https://github.com/NVlabs/QLIP/

-

Hugging Face Models: https://huggingface.co/collections/nvidia/qlip

-

arXiv Paper: https://arxiv.org/pdf/2502.05178

Application Scenarios for QLIP

-

Multimodal Understanding: Applied in visual question answering (VQA) and graphical question answering (GQA) tasks to help models interpret images and generate accurate responses.

-

Text-to-Image Generation: Generates high-quality images from textual descriptions with semantically aligned details.

-

Image-to-Text Generation: Creates precise image captions, improving the accuracy of generated textual content.

-

Unified Multimodal Models: Enables a single model to perform text-only, image-to-text, and text-to-image tasks simultaneously.

Related Posts