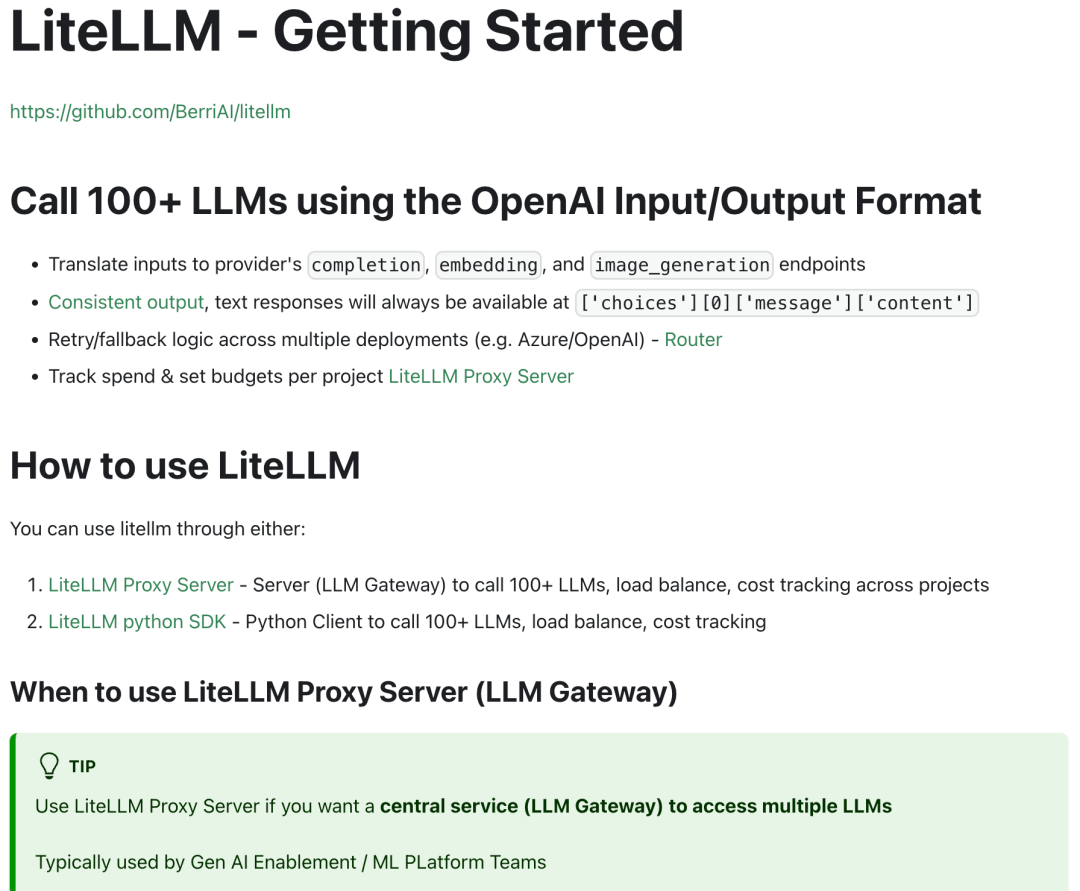

LiteLLM: The Ultimate AI Integration Tool for 100+ Language Models

What is LiteLLM?

LiteLLM is a lightweight tool that allows developers to call LLMs from different providers, including OpenAI, Azure, Anthropic, Cohere, Hugging Face, and more, using a unified API format.

It simplifies the integration complexity of multiple models by standardizing input-output formats, handling exceptions, and providing load balancing, making it easier for developers to work with various AI models.

Key Features

1. Unified API Interface

LiteLLM provides an API format consistent with OpenAI, allowing developers to use a consistent code structure to call models from different providers.

2. Support for Multiple Models and Providers

LiteLLM supports calling over 100 different LLMs, including but not limited to:

-

OpenAI (GPT-3.5, GPT-4)

-

Azure OpenAI

-

Anthropic (Claude series)

-

Cohere

-

Hugging Face (LLaMA, StableLM, Starcoder, etc.)

-

AWS Bedrock, VertexAI, Replicate, Groq, and more.

3. Load Balancing and Failover

With built-in retry and fallback mechanisms, LiteLLM ensures that when a service from one provider is unavailable, it automatically switches to another available service, enhancing the system’s reliability.

4. Cost Control and Rate Limiting

LiteLLM allows developers to set budgets and rate limits for each project, API key, or model, helping manage resources effectively and control costs.

5. Asynchronous Calls and Streaming Responses

LiteLLM supports asynchronous calls and streaming responses, making it suitable for applications that require real-time feedback, such as chatbots and live translation services.

6. Logging and Observability

LiteLLM offers predefined callback functions to send data to various logging tools (e.g., Sentry, Posthog, Helicone) for easy logging and performance monitoring.

Technical Principles

1. Standardized Input and Output

LiteLLM converts inputs from different providers into a unified OpenAI format and outputs them in a consistent structure (['choices'][0]['message']['content']), simplifying the developer workflow.

2. Exception Handling

LiteLLM maps exceptions from different providers into a unified exception type, making it easier for developers to handle errors in a consistent manner.

3. Routing and Load Balancing

LiteLLM’s built-in router supports request distribution across multiple deployments (e.g., Azure/OpenAI) and can handle more than 1000 requests per second, ensuring stability in high concurrency scenarios.

4. Plugin and Extension Support

LiteLLM supports custom plugins, allowing developers to extend functionality as needed, such as adding support for new models or custom logging.

Project Links

-

GitHub Repository: https://github.com/BerriAI/litellm

-

Official Documentation: https://docs.litellm.ai/docs/

-

Official Website: https://www.litellm.ai/

Use Cases

1. Multi-Model Integration Platform

LiteLLM is ideal for platforms that need to integrate multiple LLMs, such as AI chatbots and intelligent question-answering systems, simplifying multi-model management.

2. Education and Research

LiteLLM provides educational institutions and researchers with a unified interface for comparing and studying multiple models, making it a valuable tool for teaching and research demonstrations.

3. Enterprise Applications

Enterprises can leverage LiteLLM to quickly integrate various LLMs, building intelligent applications like customer service systems and content generation tools, improving operational efficiency.

4. API Gateway and Proxy Service

LiteLLM can serve as an API gateway for LLMs, providing a unified access point for managing and monitoring multiple LLMs.

Related Posts