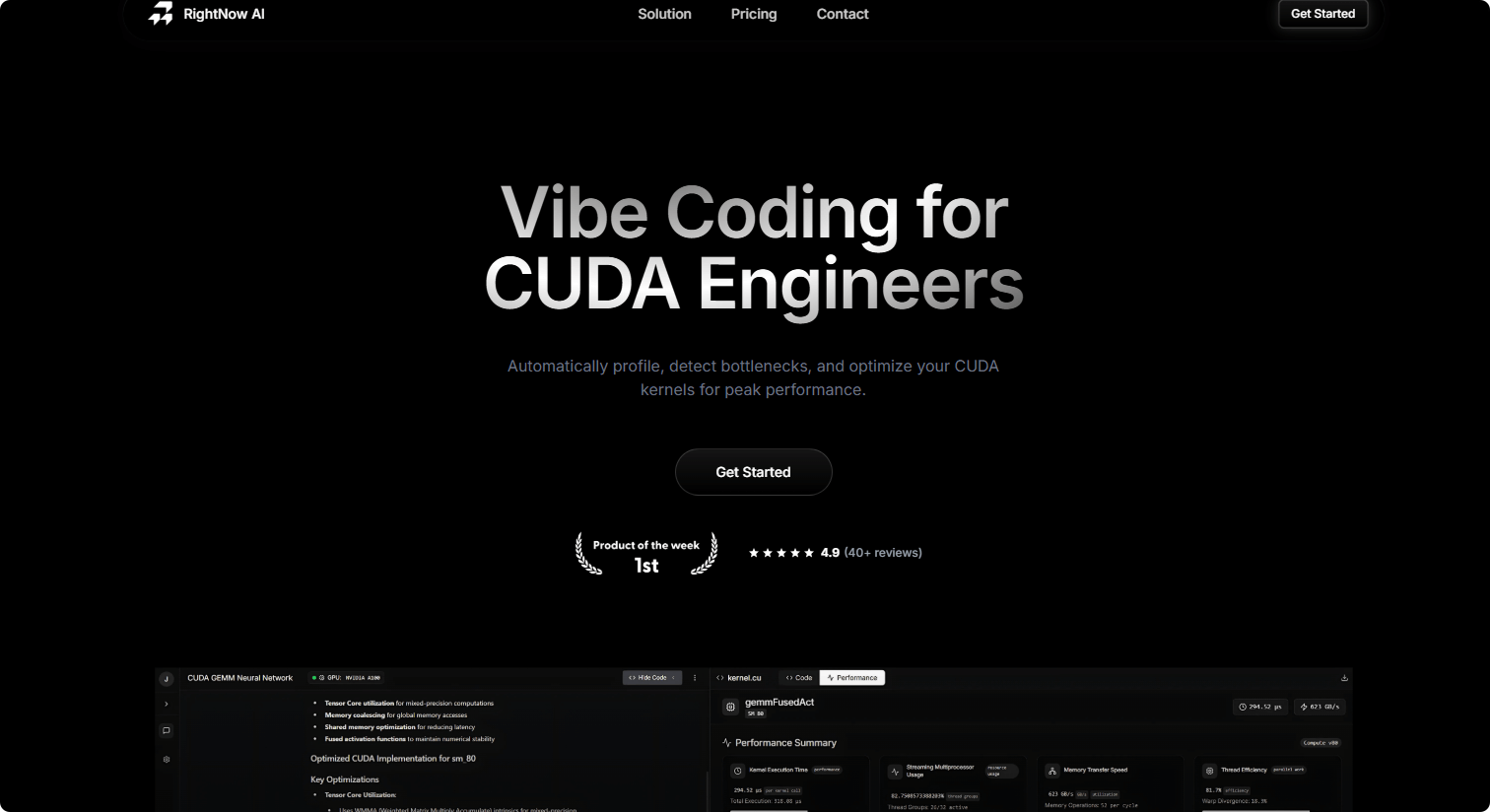

RightNow AI: AI-Driven CUDA Kernel Optimization Platform to Boost GPU Performance

What is RightNow AI?

RightNow AI is a browser-based, AI-driven CUDA kernel optimization platform. Users can describe their optimization goals, and RightNow AI automatically analyzes the code, identifies performance bottlenecks, and generates efficient CUDA kernel code, helping developers improve GPU performance without manual tuning.

Main Features

1. AI-Driven CUDA Kernel Generation

Users can describe optimization goals using natural language, and RightNow AI generates high-performance CUDA kernel code, providing performance improvements of up to 2-4 times.

2. Real-Time Performance Bottleneck Analysis

RightNow AI offers server-independent GPU performance analysis and automatically detects performance bottlenecks in the code, helping developers quickly locate issues.

3. Support for Multiple NVIDIA GPU Architectures

RightNow AI supports a variety of NVIDIA GPU architectures, including Ampere, Hopper, Ada Lovelace, and Blackwell, meeting optimization needs across different hardware environments.

4. PyTorch Model Optimization Support

RightNow AI supports the optimization of PyTorch models, enabling users to achieve up to 10 times performance improvements across all NVIDIA architectures.

5. Simplified Optimization Process

Unlike traditional complex optimization tools, RightNow AI provides an all-in-one CUDA optimization solution that streamlines the developer’s workflow.

Technical Principles

RightNow AI is based on advanced AI and machine learning technologies, integrating natural language processing (NLP) and deep learning models to understand users’ optimization needs and generate efficient CUDA kernel code. By performing real-time performance analysis on the code, RightNow AI can identify performance bottlenecks and offer targeted optimization recommendations to help developers enhance GPU performance.

Project Address

-

Official website: https://www.rightnowai.co/

Application Scenarios

-

High-Performance Computing (HPC): Optimizing CUDA kernels to improve computational efficiency in scientific computing and engineering simulations.

-

Deep Learning: Optimizing CUDA kernels to enhance performance during model training and inference.

-

Image Processing: Optimizing CUDA kernels to accelerate processing speed in image and video tasks.

-

Data Analysis: Enhancing CUDA kernels to improve efficiency in large-scale data processing and analysis.

Related Posts