Xbench – Sequoia China Launches a Brand-New AI Benchmarking Tool

What is xbench?

xbench is a new AI benchmark tool launched by Sequoia China. Built on a dual-track evaluation framework, it provides a multi-dimensional dataset to assess both the theoretical performance ceiling of AI models and the practical value of AI agents in real-world applications. With an evergreen evaluation mechanism, xbench dynamically updates its testing content to ensure timeliness and relevance. The initial release features two core benchmark sets: the Science Question Answering Benchmark and the Chinese Web Deep Search Benchmark. xbench aims to offer a scientific and sustainable evaluation guide to drive technological breakthroughs and product iteration in AI, ultimately enhancing real-world utility.

Main Features of xbench

-

Dual-Track Evaluation: Simultaneously evaluates the theoretical limits and technical boundaries of AI systems, as well as their real-world utility and effectiveness.

-

Evergreen Evaluation Mechanism: Dynamically updates test content to maintain relevance and prevent overfitting from test leakage, enabling continuous tracking of model evolution and capturing key breakthroughs in agent development.

-

Core Benchmark Sets:

-

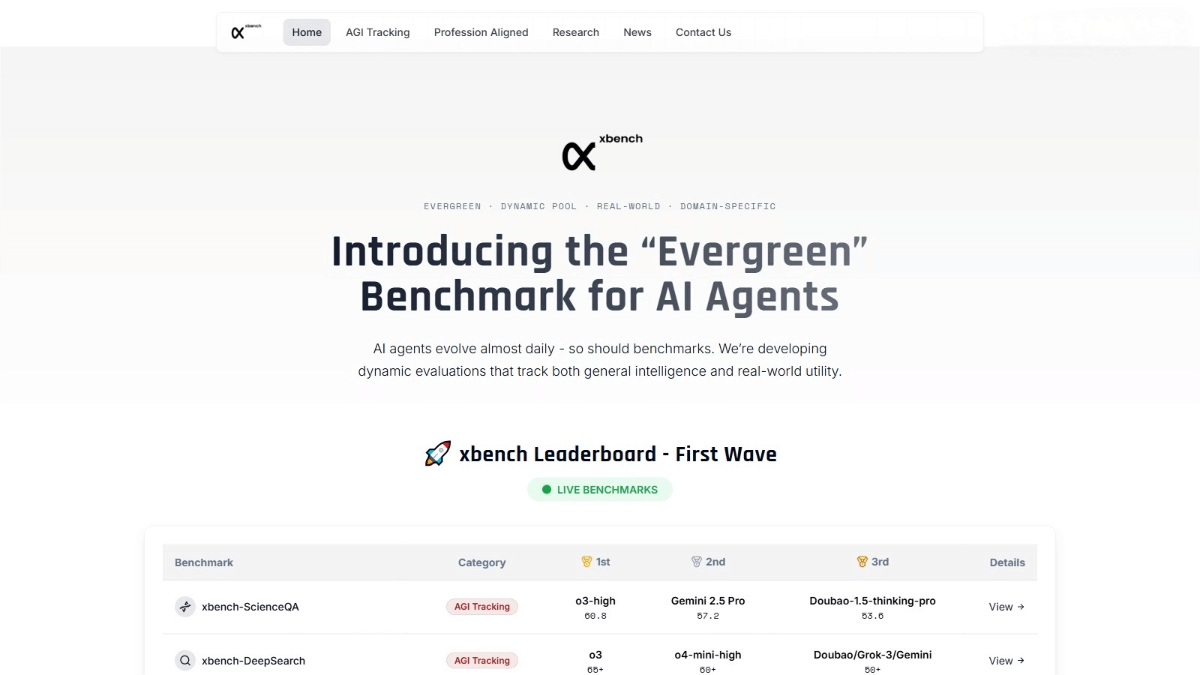

xbench-ScienceQA: Assesses subject knowledge and reasoning capabilities.

-

xbench-DeepSearch: Evaluates deep web search abilities.

Test questions are updated monthly or quarterly.

-

-

Vertical Domain Agent Evaluation: Builds tasks, execution environments, and validation methods aligned with expert behavior in fields such as recruitment and marketing, annotating tasks with economic value and predefined tech-market fit goals.

-

Real-Time Updates & Leaderboard: Continuously updates evaluation results and displays the performance of various agent products across different benchmark sets, serving as a reference for developers and researchers.

xbench Official Website

- Website: xbench.org

Application Scenarios of xbench

-

Model Capability Assessment: Assists developers of foundational models and agents in evaluating the theoretical limits and boundaries of their products, uncovering intelligence frontiers and guiding technical iteration.

-

Quantification of Real-World Utility: Measures the practical value of AI systems in real-world scenarios such as marketing and recruitment, helping enterprises assess the commercial potential of AI tools.

-

Product Iteration Guidance: Tracks key breakthroughs in agent development, providing real-time feedback and strategic direction for continuous optimization.

-

Establishment of Industry Standards: Collaborates with industry experts to build dynamic benchmark sets tailored to specific sectors, promoting AI adoption in vertical domains and setting evaluation standards for different industries.

-

Tech-Market Fit Analysis: Analyzes the cost-effectiveness of agents, forecasts technology-market fit points, and offers forward-looking guidance to developers and the market to accelerate AI commercialization.

Related Posts