What is Gemma 3n?

Gemma 3n is an on-device multimodal AI model introduced at the Google I/O Developer Conference. Built on the Gemini Nano architecture, it employs per-layer embedding technology to reduce memory usage to the level of 2–4B parameter models. While the model has 5B and 8B parameters respectively, its memory footprint is equivalent to that of 2B and 4B models.

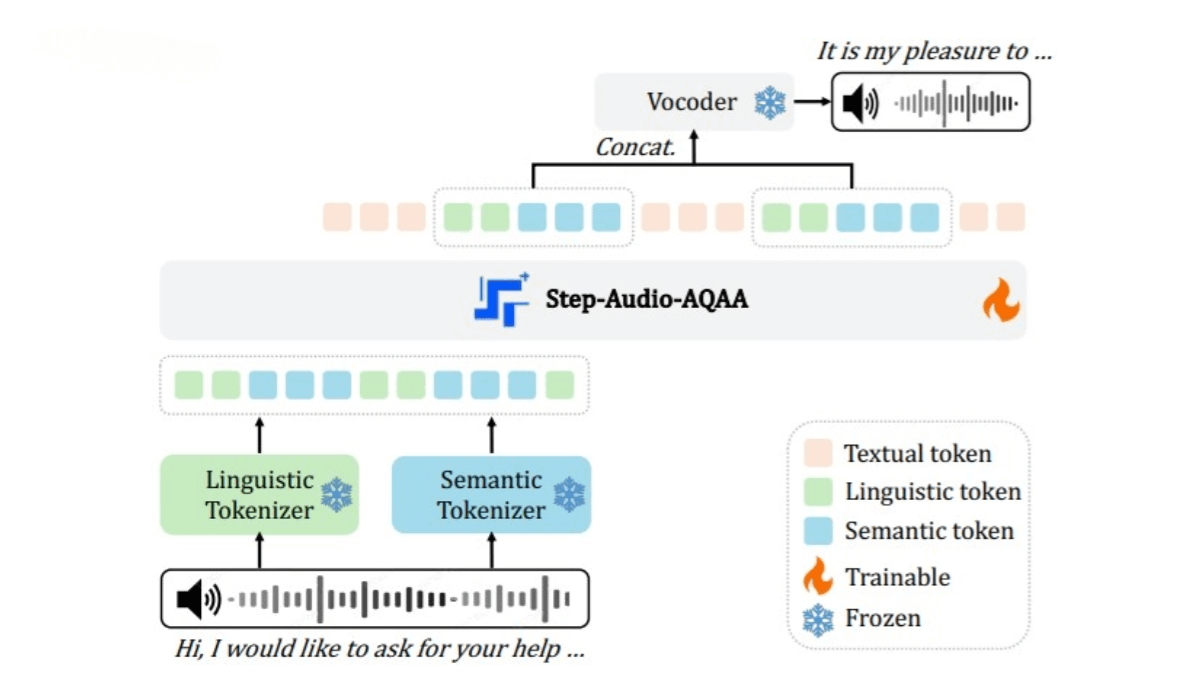

Gemma 3n supports input from text, images, short videos, and audio, and can generate structured text output. With newly added audio processing capabilities, it can transcribe speech in real time, recognize background sounds, and analyze audio sentiment. It is accessible directly in the browser via Google AI Studio.

Key Features of Gemma 3n

-

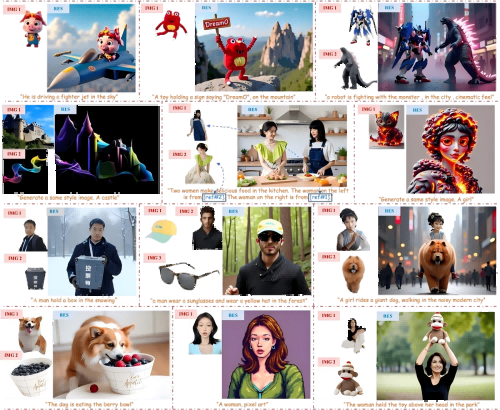

Multimodal Input: Supports input from text, images, short videos, and audio, and generates structured text output. For example, users can upload a photo and ask “What plant is this?”, or issue a voice command to analyze short video content.

-

Audio Understanding: New audio processing capabilities allow for real-time speech transcription, background sound recognition, and sentiment analysis—ideal for voice assistants and accessibility applications.

-

On-Device Execution: All inference runs locally without requiring cloud connection, with response times as low as 50 milliseconds, ensuring low latency and privacy protection.

-

Efficient Fine-Tuning: Supports quick fine-tuning on Google Colab. Developers can customize the model for specific tasks with just a few hours of training.

-

Long Context Support: Gemma 3n supports context lengths of up to 128K tokens.

Technical Principles Behind Gemma 3n

-

Based on Gemini Nano Architecture: Gemma 3n inherits the lightweight architecture of Gemini Nano, optimized for mobile devices. Through knowledge distillation and Quantization-Aware Training (QAT), it maintains high performance while significantly reducing resource demands.

-

Per-Layer Embeddings (PLE): This technique greatly reduces memory requirements. Though the models have 5B and 8B parameters, their memory usage corresponds to only 2B and 4B models, requiring just 2GB or 3GB of dynamic memory to run.

-

Multimodal Fusion: Incorporates the tokenizer from Gemini 2.0 and enhanced data mixing, enabling support for over 140 languages in text and visual processing—meeting global user needs.

-

Local/Global Layer Interleaving Design: Uses a 5:1 local-to-global layer interleaving mechanism, where every five local layers are followed by one global layer. The first computation begins with a local layer, which helps mitigate key-value (KV) cache explosion when handling long contexts.

Project Link

-

Official Project Website: https://deepmind.google/models/gemma/gemma-3n/

Application Scenarios for Gemma 3n

-

Speech Transcription & Sentiment Analysis: Capable of real-time transcription, background sound recognition, and audio sentiment analysis—ideal for voice assistants and accessibility tools.

-

Content Generation: Enables on-device generation of image captions, video summaries, or speech transcripts, suitable for content creators editing short videos or social media posts.

-

Academic Task Customization: Developers can fine-tune Gemma 3n on Colab for academic purposes, such as analyzing experimental images or transcribing lecture audio.

-

Low-Resource Devices: Designed for low-resource environments, requiring only 2GB of RAM to run smoothly on mobile phones, tablets, and laptops.

Related Posts