BAGEL – ByteDance’s Open-Source Multimodal Foundation Model

What is BAGEL?

BAGEL is an open-source multimodal foundation model developed by ByteDance. It has 14 billion parameters, with 7 billion active parameters. Utilizing a Mixture-of-Transformer-Experts (MoT) architecture, it employs two separate encoders to capture pixel-level and semantic-level features of images.

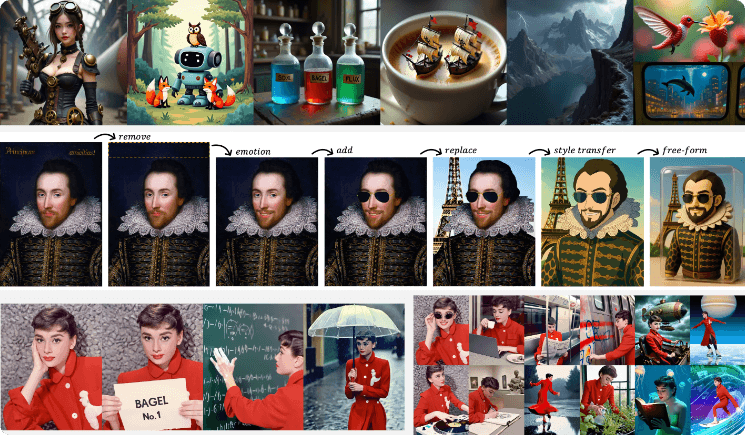

BAGEL is trained under the “next token group prediction” paradigm using massive amounts of labeled multimodal data—including language, images, video, and web content. In terms of performance, BAGEL outperforms top open-source vision-language models such as Qwen2.5-VL and InternVL-2.5 in multimodal understanding benchmarks. It delivers text-to-image generation quality on par with SD3 and surpasses many open-source models in image editing tasks.

BAGEL supports free-form image editing, future frame prediction, 3D manipulation, and world navigation tasks.

Key Features of BAGEL

-

Image-Text Fusion Understanding: BAGEL excels at understanding the relationship between images and text, accurately integrating image content with textual descriptions.

-

Video Content Understanding: It can process video data to understand dynamic and semantic information, identifying key aspects and enabling effective analysis.

-

Text-to-Image Generation: Users can input a text description to generate matching high-quality images.

-

Image Editing and Modification: BAGEL supports editing existing images based on instructions, enabling free-form image manipulation.

-

Video Frame Prediction: It can predict future frames in a video sequence, helping restore missing content based on previous frames.

-

3D Scene Understanding and Manipulation: BAGEL can recognize, locate, and manipulate 3D objects—for example, moving or modifying them in virtual environments.

-

World Navigation: Equipped with navigation capabilities, BAGEL can plan and follow paths in both virtual and real-world 3D environments.

-

Cross-Modal Retrieval: BAGEL enables cross-modal retrieval, such as finding relevant images or videos based on a text query, or retrieving textual information based on image content.

-

Multimodal Fusion Tasks: BAGEL can effectively fuse data from different modalities (e.g., image, text, audio), generating comprehensive results for complex tasks.

Technical Architecture of BAGEL

-

Dual Encoder Design: BAGEL adopts a Mixture-of-Transformer-Experts (MoT) architecture with two independent encoders—one for pixel-level features and the other for semantic-level features. This dual design allows the model to capture both low-level details and high-level semantic information.

-

Expert Mixture Mechanism: Within the MoT architecture, each encoder contains multiple “expert” modules—essentially smaller sub-networks specialized for certain tasks or features. During training, the model dynamically selects the most appropriate expert combinations to handle complex multimodal inputs efficiently.

-

Tokenization Processing: BAGEL tokenizes multimodal inputs such as images and text. Images are divided into patches treated as individual tokens, while words or subwords are treated as tokens for text input.

-

Prediction Task: The model is trained to predict the next group of tokens. It observes part of a token sequence and attempts to predict what comes next.

-

Compression and Learning: Through this predictive training, BAGEL learns the internal structure and relationships of multimodal data. Predicting the next tokens compels the model to compress and understand essential information from the input.

-

Massive Data Pretraining: BAGEL is pretrained on trillions of multimodal tokens from language, images, video, and web data, covering a wide range of scenarios and domains to learn diverse features and patterns.

-

Optimization Strategies: The training process incorporates advanced optimization techniques such as mixed-precision training and distributed training, improving both training efficiency and model performance.

Project Resources

-

Official Website: https://bagel-ai.org/

-

GitHub Repository: https://github.com/bytedance-seed/BAGEL

-

HuggingFace Model Hub: https://huggingface.co/ByteDance-Seed/BAGEL-7B-MoT

-

arXiv Paper: https://arxiv.org/pdf/2505.14683

Application Scenarios for BAGEL

-

Content Creation and Editing: Users can generate high-quality images from text descriptions or edit existing images based on prompts.

-

3D Scene Generation: BAGEL can generate 3D scenes, providing rich visual content for VR and AR applications.

-

Visualized Learning: It can present complex concepts through images or video, helping learners grasp difficult topics more effectively.

-

Creative Advertising Generation: Advertisers can use BAGEL to create engaging image and video content—for example, designing creative posters or promotional short videos based on product features.

-

User Interaction Experience: In e-commerce platforms, BAGEL can generate 3D product models and virtual displays to enhance the shopping experience.

Related Posts