Gemini Diffusion: Google DeepMind’s Next-Gen Multimodal AI Model

What is Gemini Diffusion?

Gemini Diffusion is part of DeepMind’s suite of generative models, focusing on creating and editing visual content through natural language prompts. It leverages the capabilities of the Gemini family of models to understand and process multiple data modalities, including text, images, and videos. This integration allows for seamless generation and transformation of media content, making it a powerful tool for creative and practical applications.

Key Features

-

Multimodal Content Generation: Capable of generating and editing images and videos based on textual descriptions.

-

Natural Language Control: Allows users to manipulate visual content using detailed natural language prompts.

-

Advanced Editing Capabilities: Supports complex edits such as style transfer, object addition/removal, and scene alterations.

-

High Fidelity Outputs: Produces high-quality, coherent visual outputs suitable for various applications.

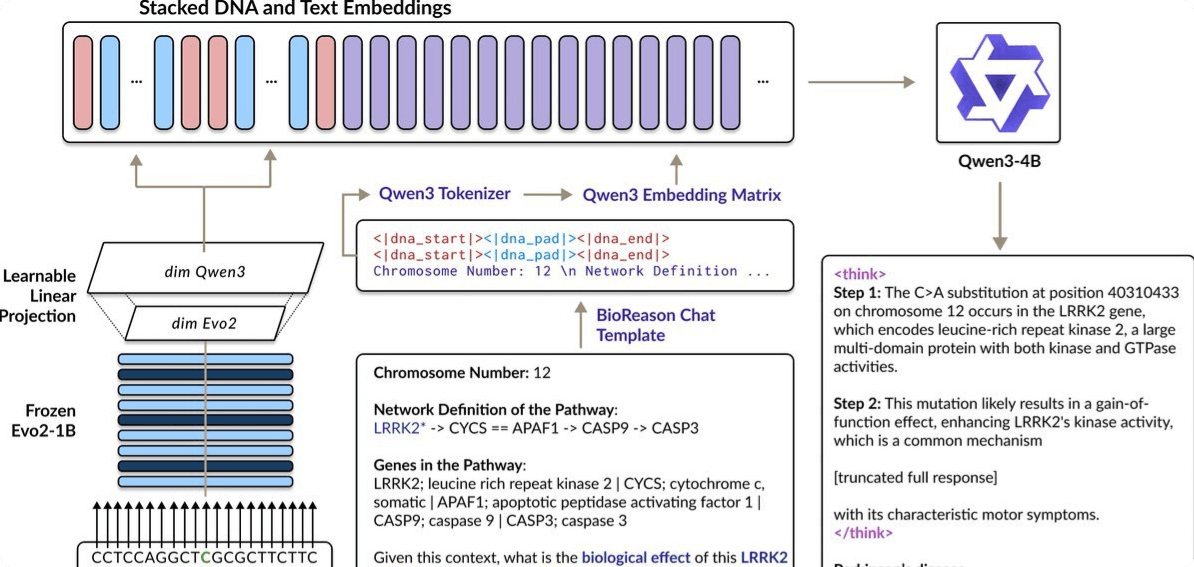

Technical Foundations

Gemini Diffusion builds upon the diffusion model framework, which involves iteratively refining random noise into coherent data samples. By integrating this with the Gemini architecture, the model benefits from enhanced understanding and generation capabilities across different data types. This combination allows for more accurate and contextually relevant content creation.

Project Link

👉 https://deepmind.google/models/gemini-diffusion/

Application Scenarios

-

Creative Industries: Artists and designers can generate concept art, illustrations, and animations.

-

Media Production: Streamlines the creation of visual content for films, games, and advertisements.

-

Education: Develops engaging visual materials for learning and presentations.

-

Accessibility: Assists in creating descriptive visuals for individuals with visual impairments.

-

Research: Facilitates data visualization and simulation in scientific studies.

Related Posts