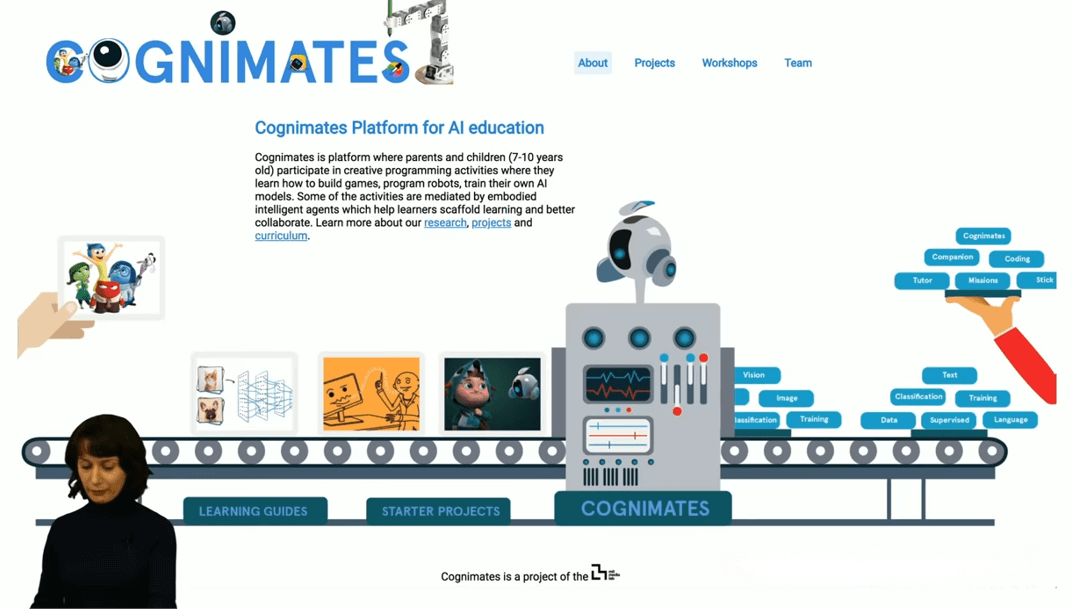

HRAvatar – A monocular video-based 3D avatar generation technology jointly launched by Tsinghua University and IDEA

What is HRAvatar?

HRAvatar is a monocular video-based 3D avatar reconstruction technology jointly developed by Tsinghua University and the IDEA research team. It enables the generation of high-quality, relightable 3D head avatars from standard single-camera video input. HRAvatar utilizes learnable deformation bases and linear blend skinning, and integrates a precise expression encoder to reduce tracking errors and improve reconstruction fidelity. The system decomposes the appearance of the avatar into attributes like albedo, roughness, and Fresnel reflectance, and employs a physically based rendering model for realistic lighting effects.

HRAvatar outperforms existing methods across multiple benchmarks and supports real-time rendering at approximately 155 FPS, offering a cutting-edge solution for digital humans, virtual streamers, AR/VR, and other immersive applications.

![]()

Key Features of HRAvatar

-

High-Quality Reconstruction: Generates detailed and expressive 3D avatars from standard monocular video.

-

Real-Time Performance: Supports rendering speeds of up to ~155 frames per second, making it suitable for real-time applications.

-

Relightability: Enables realistic relighting of avatars in real time to match various lighting environments.

-

Animation Support: Allows for animating the avatar with expressions and head movements.

-

Material Editing: Offers control over material properties such as albedo, roughness, and reflectivity for visual customization.

-

Multi-View Rendering: Renders avatars from multiple viewpoints with consistent 3D geometry.

Technical Foundations of HRAvatar

-

Accurate Expression Tracking: Uses an end-to-end expression encoder jointly optimized with 3D avatar reconstruction to extract precise expression parameters. This approach reduces dependency on pre-tracking and improves accuracy through supervision with Gaussian reconstruction loss.

-

Geometric Deformation Model: Employs learnable linear blendshape bases for each Gaussian point, incorporating shape, expression, and pose bases. Through linear blend skinning, these points are transformed into the posed space, allowing for adaptable geometric deformation.

-

Appearance Modeling: Decomposes avatar appearance into albedo, roughness, and Fresnel reflectance. Uses a BRDF-based physical rendering model and a simplified SplitSum approximation for high-quality real-time relighting. Introduces a pseudo-prior for albedo to disentangle material properties and prevent local lighting artifacts from corrupting reflectance estimates.

-

Normal Estimation and Material Priors: Estimates normals using the shortest axis of each Gaussian point, supervised by normal maps derived from depth gradients to ensure geometric consistency. Pseudo-ground-truth albedo is extracted using pretrained models, and material attributes (roughness and base reflectance) are constrained within realistic bounds for greater material realism.

Project Links

-

Project Website: https://eastbeanzhang.github.io/HRAvatar/

-

GitHub Repository: https://github.com/Pixel-Talk/HRAvatar

-

arXiv Paper: https://arxiv.org/pdf/2503.08224

Application Scenarios for HRAvatar

-

Digital Humans & Virtual Streamers: Create lifelike avatars capable of real-time expressions and motion for interactive streaming and virtual personas.

-

AR/VR: Enhance immersive environments by integrating real-time relightable 3D avatars.

-

Immersive Conferencing: Use high-quality 3D avatars to enable more natural and realistic remote communication.

-

Game Development: Quickly generate expressive, high-fidelity 3D character avatars to elevate the gaming experience.

-

Film Production: Apply in VFX pipelines to efficiently generate realistic 3D head avatars and accelerate content creation workflows.

Related Posts