RelightVid – A video relighting model jointly launched by Shanghai AI Laboratory and universities such as Fudan University

What is RelightVid?

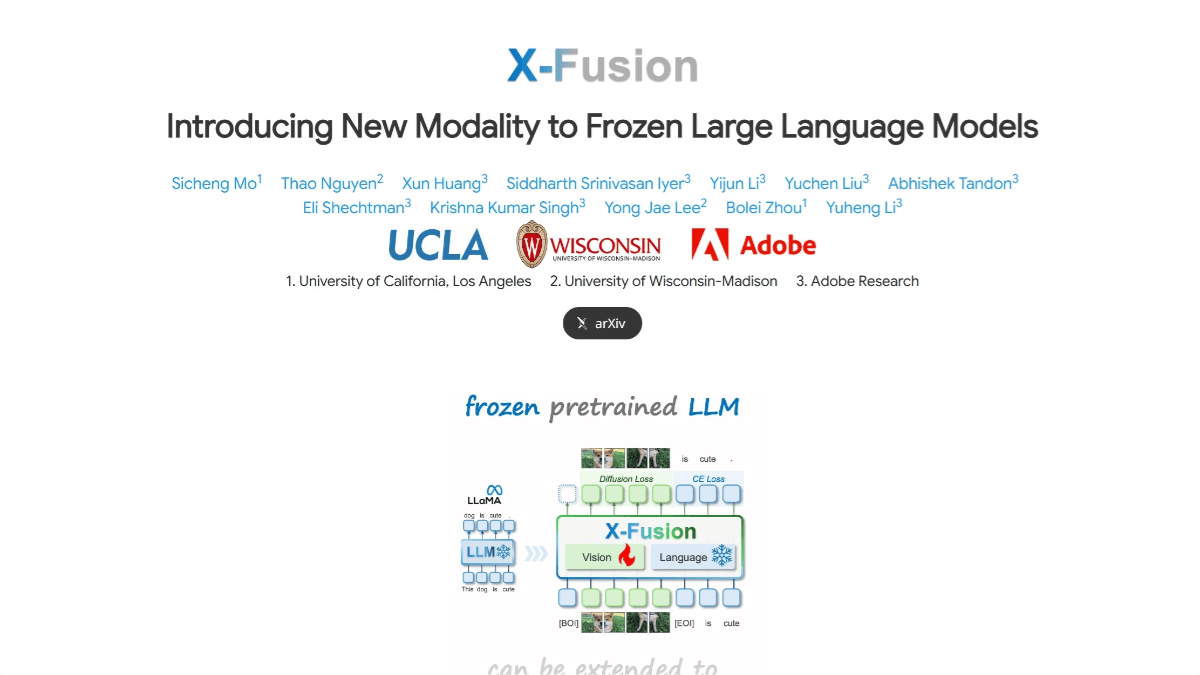

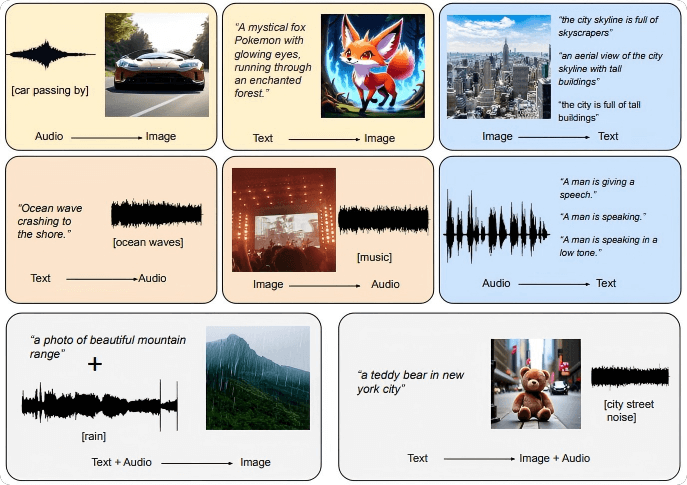

RelightVid is a temporally consistent diffusion model for video relighting, developed by Shanghai AI Lab, Fudan University, Shanghai Jiao Tong University, Zhejiang University, Stanford University, and The Chinese University of Hong Kong. It enables fine-grained and consistent scene editing of input videos based on text prompts, background videos, or HDR environment maps. The model supports both full-scene relighting and foreground-preserving relighting.

RelightVid is built upon a custom-enhanced pipeline that generates high-quality paired video relighting data, combining real-world videos and 3D-rendered scenes. It extends a pre-trained image-based lighting diffusion framework (IC-Light) with trainable temporal layers to enhance relighting consistency and quality in videos. RelightVid excels in maintaining temporal coherence and lighting detail, offering new possibilities for video editing and generation.

Key Features of RelightVid

-

Text-Guided Relighting: Relight videos based on user-provided text descriptions, such as “sunlight filtering through leaves” or “soft morning light, golden hour effect.”

-

Background Video-Guided Relighting: Use a background video as lighting context to dynamically match foreground lighting with the background illumination.

-

HDR Environment Map-Guided Relighting: Precisely control lighting conditions using HDR environment maps, achieving high-quality relighting effects.

-

Full-Scene Relighting: Relight both foreground and background to match the desired lighting condition for a cohesive scene.

-

Foreground-Preserving Relighting: Relight only the foreground while preserving the original background—ideal for scenarios where the foreground subject needs emphasis.

Technical Principles Behind RelightVid

-

Diffusion Model Extension: RelightVid is based on a pre-trained image relighting diffusion model (e.g., IC-Light), extended to handle video input by introducing temporal layers that capture inter-frame dependencies and ensure temporal consistency in relighting.

-

Multi-Modal Conditional Training: The model supports simultaneous conditioning on background videos, text prompts, and HDR maps. These inputs are encoded and embedded into the model via a cross-attention mechanism for coherent multi-modal editing.

-

Illumination-Invariant Ensemble (IIE): Enhances the robustness of the model to various lighting conditions by applying brightness augmentation to input videos and averaging noise predictions to prevent inconsistencies in reflectance.

-

Data Augmentation Pipeline (LightAtlas): Generates high-quality video relighting pairs using a combination of real videos and 3D-rendered data, enriching the model with diverse lighting priors and improving its adaptability to complex lighting environments.

Project Links

-

Project Website: https://aleafy.github.io/relightvid/

-

GitHub Repository: https://github.com/Aleafy/RelightVid

-

arXiv Paper: https://arxiv.org/pdf/2501.16330

-

Online Demo: https://huggingface.co/spaces/aleafy/RelightVid

Application Scenarios for RelightVid

-

Film and TV Production: Modify scene lighting in movies or TV shows to align with narrative needs or creative vision, without reshooting.

-

Game Development: Dynamically adjust scene lighting in games to enhance immersion and visual quality across different times and weather conditions.

-

Augmented Reality (AR): Real-time relighting of virtual objects in AR to match real-world lighting, improving realism and user experience.

-

Video Advertising & Marketing: Quickly generate various lighting styles for ad videos, aligning with different brands or campaign themes to increase visual appeal.

-

Video Content Creation: Empower content creators to easily change lighting moods in videos—simulating different times of day or weather—to enrich their storytelling.

Related Posts