HunyuanPortrait – A portrait animation generation framework launched jointly by Tencent Hunyuan and institutions such as Tsinghua University

What is HunyuanPortrait?

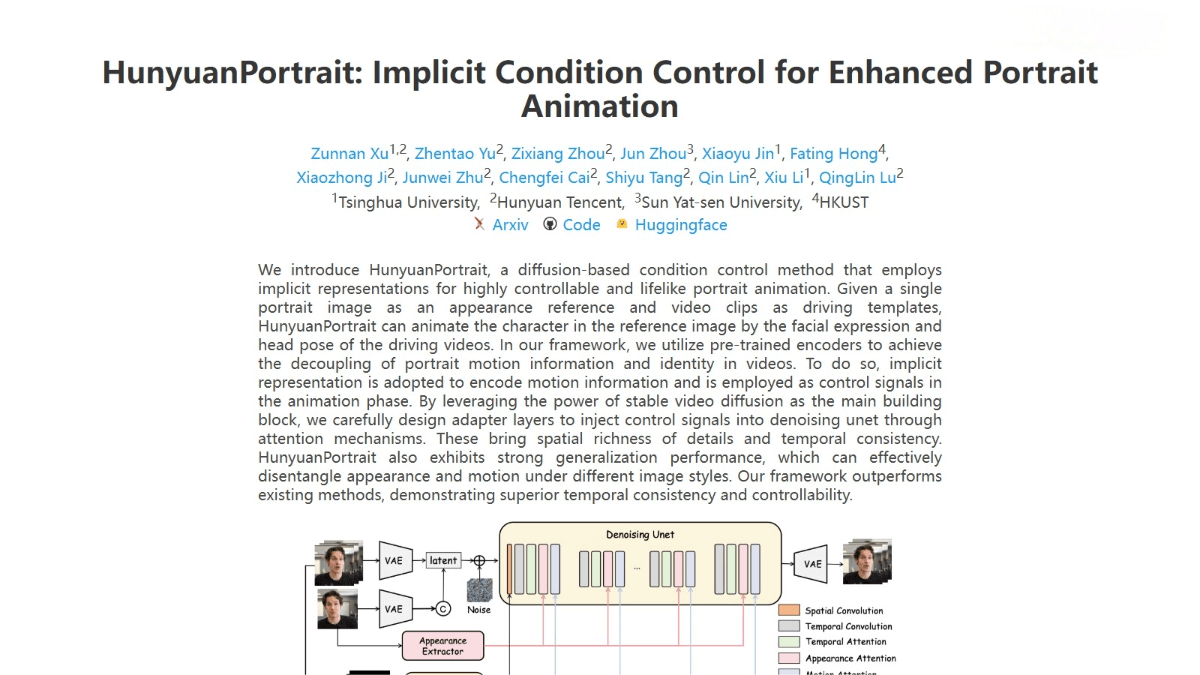

HunyuanPortrait is a diffusion-model-based framework jointly developed by Tencent’s Hunyuan team in collaboration with institutions such as Tsinghua University, Sun Yat-sen University, and the Hong Kong University of Science and Technology. It enables the generation of highly controllable and realistic portrait animations. By using a single portrait image as an appearance reference and a video clip as a motion driver, HunyuanPortrait animates the reference portrait based on the facial expressions and head movements in the driving video. It outperforms existing methods in terms of temporal consistency and controllability, demonstrating strong generalization capabilities. The framework effectively decouples appearance and motion across different image styles and is widely applied in areas such as virtual reality, gaming, and human-computer interaction.

Key Features of HunyuanPortrait

-

Highly controllable portrait animation generation: Using a single portrait image and a driving video clip, it accurately transfers facial expressions and head movements from the video to the reference image, producing smooth and natural animations.

-

Strong identity preservation: Maintains the identity characteristics of the reference portrait even when there are significant differences in facial structure and motion intensity, avoiding identity distortion.

-

Realistic facial dynamics capture: Captures subtle facial expression changes, such as gaze direction and lip synchronization, resulting in highly realistic animated portraits.

-

Temporal consistency optimization: Ensures high temporal coherence and smoothness in the generated video, avoiding issues like background jitter and blur.

-

Style generalization capability: Adapts to various image styles, including anime and photorealistic styles, offering broad applicability.

Technical Principles of HunyuanPortrait

-

Implicit conditional control: Encodes motion information using implicit representations to better capture complex facial motions and expression changes, avoiding artifacts caused by inaccurate keypoint extraction. The encoded motion data is injected into the denoising U-Net via attention mechanisms, enabling fine-grained control over the animation generation process.

-

Stable video diffusion model: Utilizes a diffusion-based framework that performs diffusion and denoising in latent space, enhancing generation quality and training efficiency. A VAE maps images from RGB space to latent space, where UNet is used for denoising to generate high-quality video frames.

-

Enhanced feature extractor: Improves motion feature representation by estimating motion intensity (e.g., degree of facial distortion and head movement). It combines ArcFace and DiNOv2 for background modeling and uses a multi-scale adapter (IMAdapter) to enhance identity consistency, ensuring the same identity across different frames.

-

Training and inference strategies: Incorporates data augmentation techniques such as color jitter and pose-guided transformations to enhance training diversity and model generalization. Various training strategies, including randomly removing certain skeletal edges, are used to improve robustness under different input conditions.

-

Attention mechanism: Introduces multi-head self-attention and cross-attention mechanisms in the UNet to enhance the model’s perception of spatial and temporal information, improving the detail richness and temporal consistency of the generated videos.

Project Links for HunyuanPortrait

-

Official Website: https://kkakkkka.github.io/HunyuanPortrait/

-

GitHub Repository: https://github.com/Tencent-Hunyuan/HunyuanPortrait

-

Hugging Face Model Hub: https://huggingface.co/tencent/HunyuanPortrait

-

arXiv Technical Paper: https://arxiv.org/pdf/2503.18860

Application Scenarios of HunyuanPortrait

-

Virtual Reality (VR) and Augmented Reality (AR): Create lifelike virtual characters to enhance user experience.

-

Game Development: Generate personalized game avatars, boosting player immersion.

-

Human-Computer Interaction: Develop more natural virtual assistants and customer service bots to improve interaction quality.

-

Digital Content Creation: Used in video production, advertising, and film special effects to rapidly generate high-quality animated content.

-

Social Media and Entertainment: Users can animate their photos into dynamic emojis or avatars for increased engagement.

-

Education and Training: Create personalized virtual instructors or training characters for more vivid and interactive teaching experiences.

Related Posts