MagicTryOn – A video-based virtual try-on framework jointly launched by Zhejiang University, vivo, and other institutions

What is MagicTryOn?

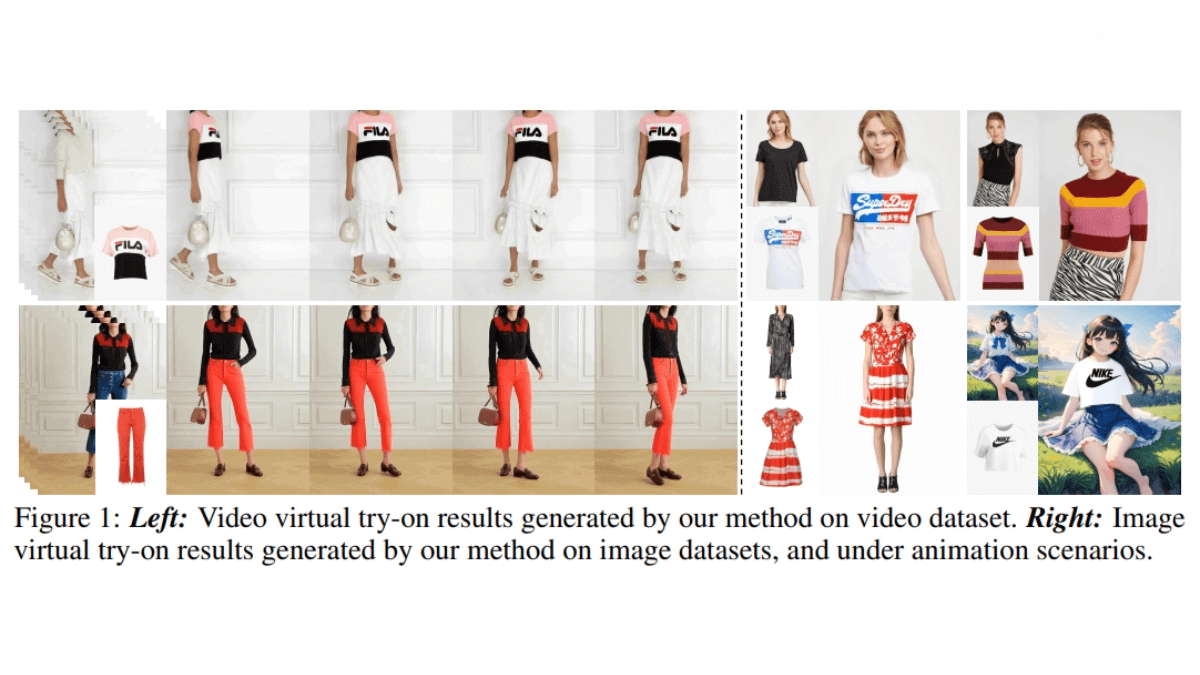

MagicTryOn is a video-based virtual try-on framework developed by the College of Computer Science and Technology at Zhejiang University, in collaboration with vivo Mobile Communication and other institutions. The framework replaces the conventional U-Net architecture with a more expressive Diffusion Transformer (DiT) and integrates full self-attention mechanisms to achieve spatiotemporal consistency in video modeling. MagicTryOn employs a coarse-to-fine garment preservation strategy, combining garment tokens in the embedding stage with semantic, texture, and contour-based conditions in the denoising stage to effectively retain garment details. It achieves state-of-the-art performance on both image and video try-on datasets, excelling in evaluation metrics, visual quality, and generalization to in-the-wild scenarios.

Key Features of MagicTryOn

-

Garment Detail Preservation: Accurately simulates garment texture, patterns, and contours, maintaining realism and stability during human motion.

-

Spatiotemporal Consistency Modeling: Ensures coherence between frames in a video to prevent flickering or jitter, delivering a smooth virtual try-on experience.

-

Multi-Condition Guidance: Utilizes various conditions such as text, image features, garment tokens, and contour maps to generate highly realistic and detailed try-on results.

Technical Foundations of MagicTryOn

-

Diffusion Transformer (DiT) Architecture: The modular design of DiT allows flexible injection of conditioning information. Its built-in full self-attention mechanism jointly models spatiotemporal consistency across the video, capturing both intra-frame details and inter-frame dynamics.

-

Coarse-to-Fine Garment Preservation Strategy:

-

Coarse Stage: During the embedding phase, garment tokens are injected into the input token sequence. The rotational position encoding (RoPE) grid size is expanded so that garment and input tokens share a consistent positional encoding.

-

Fine Stage: In the denoising phase, two modules—Semantic-Guided Cross Attention (SGCA) and Feature-Guided Cross Attention (FGCA)—are introduced to guide the synthesis of fine garment details. SGCA uses text and CLIP image tokens to provide global semantic representation of the clothing, while FGCA fuses garment and contour tokens to inject localized detail.

-

-

Mask-Aware Loss: This loss function guides the model to focus more on generating the garment regions, improving fidelity and realism of those areas in the synthesized output.

-

Spatiotemporal Consistency Modeling: Unlike traditional approaches that separately model spatial and temporal information, MagicTryOn uses full self-attention to jointly model both dimensions, ensuring smoother and more coherent results.

Project Links for MagicTryOn

-

Official Website: https://vivocameraresearch.github.io/magictryon/

-

GitHub Repository: https://github.com/vivoCameraResearch/Magic-TryOn/

-

arXiv Technical Paper: https://arxiv.org/pdf/2505.21325

Application Scenarios of MagicTryOn

-

Online Shopping: Allows users to virtually try on clothes, enhancing the online shopping experience.

-

Fashion Design: Enables designers to quickly preview how garments look, speeding up the design process.

-

Virtual Fitting Rooms: Offers virtual try-on solutions in physical retail stores, reducing reliance on physical fitting rooms.

-

Advertising and Marketing: Helps brands create personalized try-on advertisements to attract consumers.

-

Gaming and Entertainment: Enables real-time virtual clothing changes in games, enhancing immersion.

Related Posts