The PAM Model: A New Breakthrough in Image and Video Understanding

What is PAM?

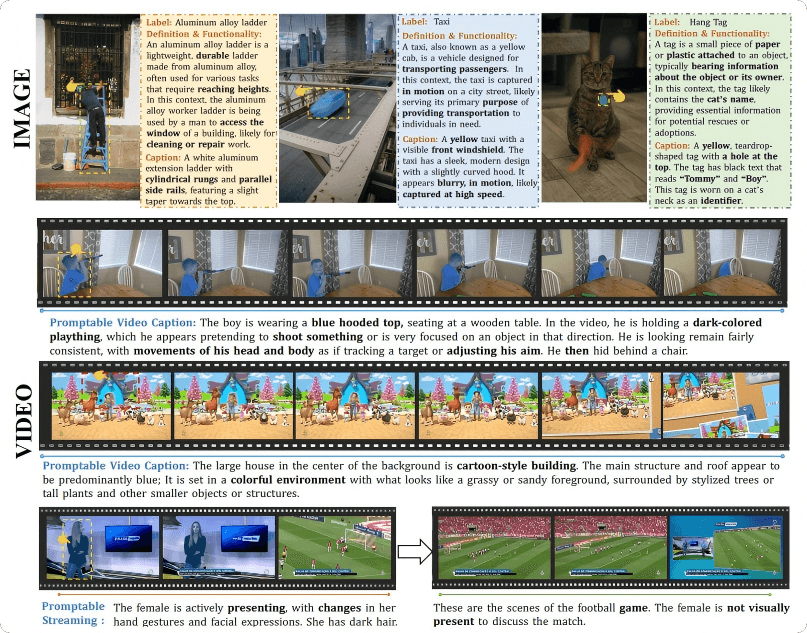

PAM (Perceive Anything Model) is a powerful visual understanding model open-sourced by institutions such as the MMLab at The Chinese University of Hong Kong, the Hong Kong Polytechnic University, and Peking University. It features capabilities in segmentation, recognition, and description. With only 3 billion parameters, the model has set new benchmarks in both image and video understanding while supporting efficient inference and memory management. PAM enables users to click on a specific area to obtain its category, explanation, and descriptive information, making it applicable to images, short videos, and long videos, with broad application potential. Its training dataset includes annotations for 1.5 million image regions and 600,000 video regions, ensuring the model’s efficiency and robustness.

Key Features

-

Region Segmentation: Uses SAM 2 to segment each image/video frame into distinct visual regions with high precision.

-

Semantic Recognition: Identifies and labels the category of each segmented region.

-

Functional Explanation: Provides a functional or contextual explanation for each object in the scene.

-

Natural Language Captioning: Generates natural language descriptions for each visual region, enabling region-level captioning.

-

Video Stream Support: Extends semantic understanding and description to video frame sequences for real-time applications.

How Does It Work?

-

Image Segmentation Backbone: Uses SAM 2 (Segment Anything Model) to produce high-quality, universal segmentation across any visual input.

-

Semantic Perceiver: Converts SAM 2’s high-dimensional vision features into multi-modal tokens that can be interpreted by an LLM, bridging vision and language understanding.

-

Large Language Model Integration: An LLM processes the tokens to generate explanations, classify objects, and describe regions in natural language.

-

Large-Scale Training: PAM is trained on an extensive dataset, including 1.5 million labeled image regions and 600,000 video region annotations, supporting fine-grained semantic comprehension.

-

Efficient and Lightweight: PAM offers 1.2–2.4× faster inference than current models, while significantly reducing GPU memory usage—ideal for real-world deployment.

Project Link

Application Scenarios

-

Smart Surveillance and Security: Adds contextual understanding to video monitoring by generating semantic insights for each detected region.

-

Autonomous Driving and Robotics: Enables intelligent systems to interpret their surroundings with fine-grained object understanding and functional descriptions.

-

Media and Content Creation: Automatically generates accurate region-level captions for use in video editing, summarization, and content indexing.

-

Medical Imaging: Assists in identifying and describing structures or anomalies in medical scans for clinical decision support.

-

Augmented Reality (AR): Powers real-time environment understanding and region-based annotations for immersive and interactive AR experiences.

Related Posts