DreamActor-H1 – a video generation framework for product demonstrations launched by ByteDance

What is DreamActor-H1?

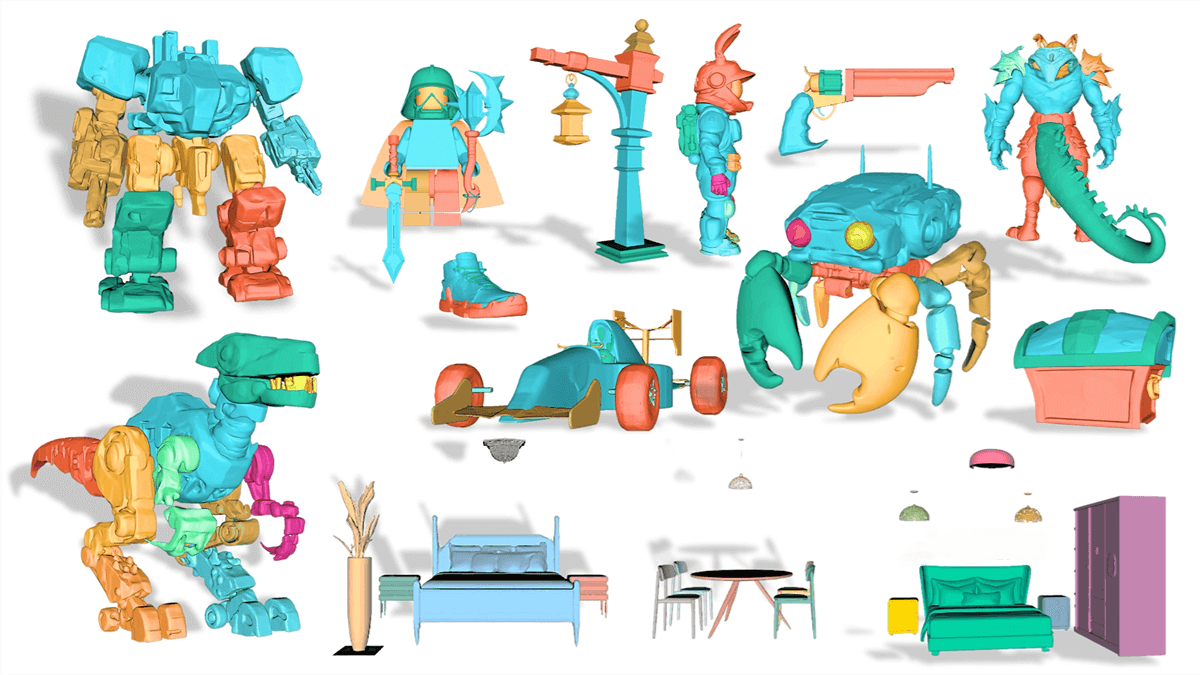

DreamActor-H1 is a framework developed by ByteDance, built on the Diffusion Transformer (DiT) architecture. It enables high-quality generation of human-product demonstration videos from paired images of humans and products. By injecting reference information of both the human and the product through a masked cross-attention mechanism, the model retains identity features of the human subject and detailed product attributes (such as logos and textures).

The framework incorporates 3D human mesh templates and product bounding boxes to guide motion generation with high precision, and enhances 3D consistency using structured text encoding. Trained on a large-scale hybrid dataset, DreamActor-H1 significantly outperforms existing methods and is particularly suited for personalized e-commerce advertising and interactive media applications.

Key Features of DreamActor-H1

-

High-Fidelity Video Generation: Generates realistic and high-quality demonstration videos from paired human and product images.

-

Identity Preservation: Maintains the identity of the human subject and preserves fine product details (e.g., logos, textures) throughout the generation process.

-

Natural Motion Generation: Utilizes 3D human mesh templates and product bounding boxes to guide natural and accurate hand-product interaction movements.

-

Semantic Enhancement: Enhances visual quality and 3D consistency—especially under minor viewpoint changes—using structured text encoding.

-

Personalized Applications: Supports diverse human and product inputs, enabling customized applications in e-commerce and interactive media.

Technical Foundations of DreamActor-H1

-

Diffusion Model: Utilizes diffusion-based generative capabilities to progressively generate video content from noise. This iterative denoising approach ensures high-quality visual outputs.

-

Masked Cross-Attention Mechanism: Injects paired human and product reference images into the generation process using masked cross-attention, ensuring accurate retention of both human identity and product features in the generated video.

-

3D Motion Guidance: Combines 3D human mesh templates with product bounding boxes to precisely guide action generation, ensuring that hand movements naturally align with product placement.

-

Structured Text Encoding: Leverages product descriptions and human attribute information extracted from Vision-Language Models (VLMs) to enhance semantic coherence, visual fidelity, and 3D stability in video outputs.

-

Multimodal Fusion: Integrates human appearance, product appearance, and textual descriptions into the diffusion process using full attention, reference attention, and object attention mechanisms to generate high-quality, coherent videos.

Project Links for DreamActor-H1

-

Project Website: https://submit2025-dream.github.io/DreamActor-H1/

-

arXiv Paper: https://arxiv.org/pdf/2506.10568

Application Scenarios for DreamActor-H1

-

Personalized Product Demonstrations: Generate realistic human-product interaction videos to showcase usage scenarios and product features, enhancing consumer purchase intent.

-

Virtual Try-On Experiences: Offer users virtual try-on capabilities for items like clothing or cosmetics, helping them better understand how products would look or function.

-

Product Marketing: Create high-quality product demonstration videos for e-commerce platforms to use on product detail pages or in advertisements, boosting product appeal and conversion rates.

-

Social Media Advertising: Generate engaging and visually appealing video content for social media ads, increasing user engagement and brand exposure.

-

Brand Promotion: Automatically generate videos featuring brand ambassadors interacting with products to strengthen brand identity and consumer connection.

Related Posts