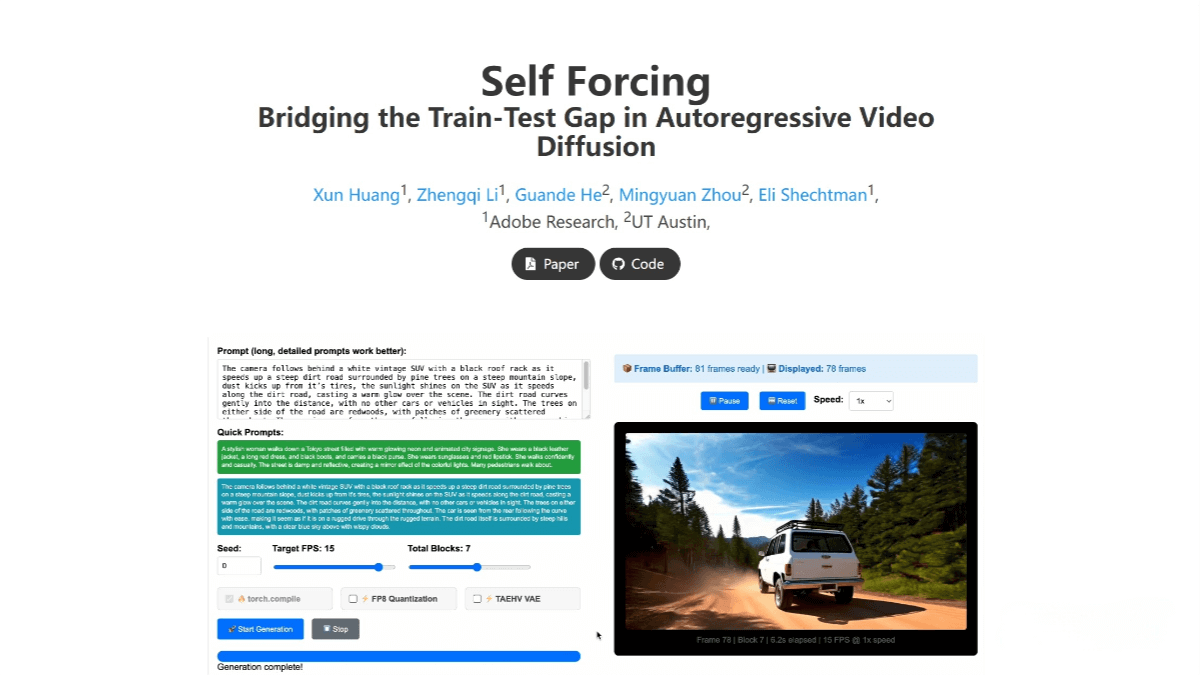

Self-Forcing – A video generation model jointly developed by Adobe and the University of Texas

What is Self Forcing?

Self Forcing is a novel autoregressive video generation algorithm developed jointly by Adobe Research and the University of Texas at Austin. It addresses the exposure bias problem commonly seen in traditional generative models during the mismatch between training and inference. By simulating the self-generation process during training — conditioning on previously generated frames rather than ground-truth frames — it effectively narrows the distribution gap between training and testing. Self Forcing introduces a rolling key-value (KV) cache mechanism that enables theoretically infinite video generation. It achieves real-time performance at 17 FPS with sub-second latency on a single H100 GPU. This breakthrough offers new possibilities for live streaming, gaming, and real-time interactive applications, such as real-time generation of virtual backgrounds or special effects. Its efficiency and low latency make Self Forcing a powerful tool for future multimodal content creation.

Key Features of Self Forcing

-

Efficient Real-Time Video Generation

Self Forcing achieves efficient real-time video generation at 17 FPS with latency under one second using a single GPU. -

Infinite-Length Video Generation

Thanks to its rolling KV cache mechanism, Self Forcing can generate videos of theoretically unlimited length, enabling continuous content creation without interruption. -

Bridging the Training-Inference Gap

By conditioning on previously generated frames during training instead of real frames, Self Forcing effectively mitigates exposure bias in autoregressive generation and improves the quality and stability of generated videos. -

Low Resource Requirements

Optimized for hardware efficiency, Self Forcing supports streaming video generation even on a single RTX 4090 GPU, reducing the barrier for deployment on standard consumer devices. -

Multimodal Content Creation

The model’s speed and real-time capability make it ideal for applications like generating dynamic virtual backgrounds or effects in livestreams and VR/AR scenarios, offering creators a broader creative toolkit.

Technical Overview of Self Forcing

-

Autoregressive Unrolling with Global Loss Supervision

Self Forcing simulates the inference-time autoregressive process during training by generating each frame based on previously generated ones rather than ground-truth frames. A sequence-level distribution matching loss supervises the entire generated video, allowing the model to learn directly from its prediction errors and reducing exposure bias. -

Rolling KV Cache Mechanism

To support long video sequences, the model maintains a fixed-size rolling KV cache, storing embeddings of the most recent frames. When a new frame is generated, the cache updates by removing the oldest entry and adding the newest one. -

Few-Step Diffusion with Gradient Truncation

For training efficiency, Self Forcing employs a few-step diffusion model combined with a randomized gradient truncation strategy. Specifically, it randomly selects the number of denoising steps during training and only performs backpropagation on the final denoising step. -

Dynamic Conditional Generation

At each frame generation step, Self Forcing dynamically incorporates two types of inputs: the previously generated clean frames and the current noisy frame. The denoising process is iterated to ensure coherence and naturalness in the generated sequence.

Project Links

-

Project Website: https://self-forcing.github.io/

-

GitHub Repository: https://github.com/guandeh17/Self-Forcing

-

arXiv Paper: https://arxiv.org/pdf/2506.08009

Application Scenarios for Self Forcing

-

Live Streaming & Real-Time Video Feeds

With its 17 FPS real-time generation and sub-second latency on a single GPU, Self Forcing is ideal for livestreaming scenarios — such as dynamically generating virtual backgrounds, special effects, or animated scenes during broadcasts — delivering fresh visual experiences to audiences. -

Game Development

In game development, Self Forcing can generate real-time scenes and effects based on player actions without requiring pre-rendered video assets. It enhances immersion and interactivity by dynamically rendering environments and visual effects. -

Virtual and Augmented Reality (VR/AR)

The model’s low latency and high efficiency support real-time visual content creation in VR and AR applications. It enables realistic virtual scenes or on-the-fly overlays of virtual objects in AR, improving immersion and user experience. -

Content Creation & Video Editing

Self Forcing can serve as a tool for short-form video creators, enabling rapid generation of high-quality video content with minimal manual editing. -

World Simulation & Training Environments

The model can be used to simulate realistic environments — from nature scenes to urban landscapes — for purposes like military training, city planning, or environmental simulations.

Related Posts