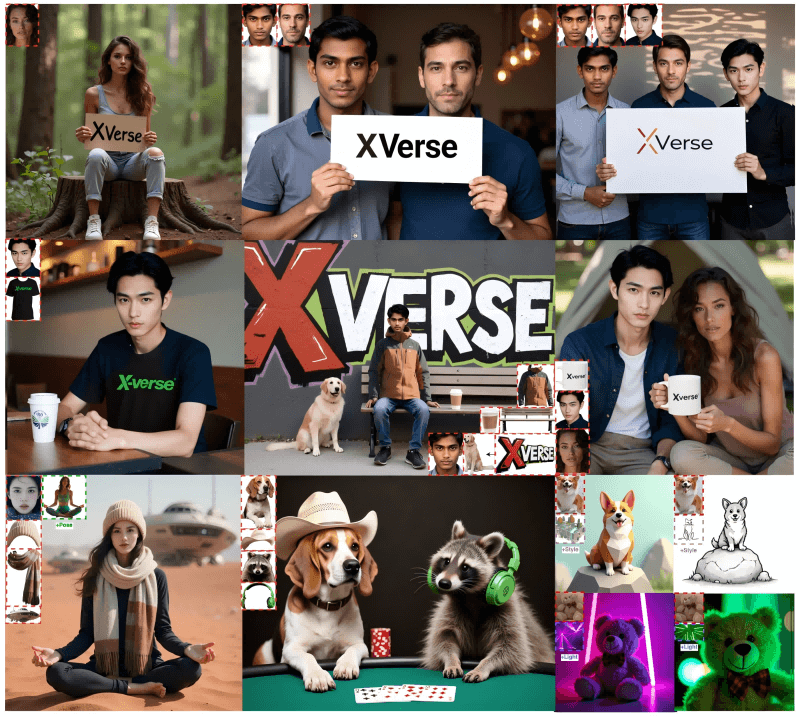

XVerse – A multi-subject controlled image generation model launched by ByteDance

What is XVerse?

XVerse is an advanced multi-subject controllable image generation model developed by the Intelligent Creation Team at ByteDance. Designed to push the boundaries of text-to-image generation, XVerse enables fine-grained control over multiple subjects in a single image—such as identity, pose, style, and lighting—while maintaining high-quality and consistent image synthesis. It transforms reference images into token-specific text-stream modulation offsets to independently control specific subjects without disrupting the underlying image features or latent variables. With its VAE-encoded visual feature module and regularization techniques, XVerse significantly enhances detail preservation and generation quality, making it a powerful tool for controllable image synthesis.

Key Features of XVerse

-

Multi-Subject Control: XVerse allows simultaneous control of multiple subjects within an image, enabling precise manipulation of identities, poses, styles, and more—ideal for generating complex scenes with multiple people or objects.

-

High-Fidelity Image Synthesis: The generated images exhibit high fidelity and accurately reflect the semantic details described in the text, while preserving global consistency and visual coherence.

-

Semantic Attribute Manipulation: Fine control over semantic attributes such as pose, style, and lighting empowers users to flexibly tailor image aesthetics and mood.

-

Strong Editability: Users can easily edit or personalize generated images using simple text prompts, enabling intuitive and customizable image creation.

-

Reduction of Artifacts and Distortions: The integration of VAE-encoded image features and regularization mechanisms helps minimize visual artifacts and distortions, leading to more natural and realistic outputs.

Technical Principles Behind XVerse

-

Text-Stream Modulation Mechanism: XVerse converts reference images into token-specific modulation offsets added to the model’s text embeddings. This mechanism enables precise control over specific subjects without affecting the shared latent representation of the image.

-

VAE-Encoded Visual Feature Module: To retain finer image details, XVerse incorporates a VAE-based visual feature module. This auxiliary component assists the model in preserving detailed visual information during the generation process.

-

Regularization Techniques:

-

Token Injection Regularization: Randomly retains modulation on one side of the image to enforce consistency in non-modulated regions.

-

Feature Regularization for Data Augmentation: Subject-specific features are regularized to help the model better distinguish and maintain subject identity in multi-subject scenarios.

-

Cross-Attention Map Consistency Loss: L2 loss is applied between the attention maps of the modulation model and the reference T2I (text-to-image) branch to maintain consistent semantic interactions and editable fidelity.

-

-

Training Dataset: XVerse is trained on a high-quality, multi-subject controllable dataset. The dataset combines:

-

Image-caption and phrase grounding data from Florence2

-

Accurate face extraction using SAM2

-

Diverse scenes featuring human-object interactions, human-animal compositions, and complex multi-person environments

This enhances the model’s generalizability across various applications.

-

Project Links for XVerse

-

Official Website: https://bytedance.github.io/XVerse/

-

GitHub Repository: https://github.com/bytedance/XVerse

-

HuggingFace Model Hub: https://huggingface.co/ByteDance/XVerse

-

arXiv Paper: https://arxiv.org/pdf/2506.21416

Application Scenarios of XVerse

-

E-commerce Advertising: Quickly generate diverse promotional images of different individuals using the same product, meeting brand customization needs at scale.

-

Game Character Design: Generate concept art for multiple unique characters based on text descriptions, streamlining the character development process for game designers.

-

Medical Educational Illustrations: Create detailed anatomical and physiological illustrations to help medical students better understand the human body.

-

Virtual Avatar Personalization: Users can generate personalized avatars from descriptions for use on virtual social platforms or in VR applications.

-

Urban Planning Visualization: Produce virtual renderings of public parks or city zones to help residents understand design proposals by urban planners.

Related Posts