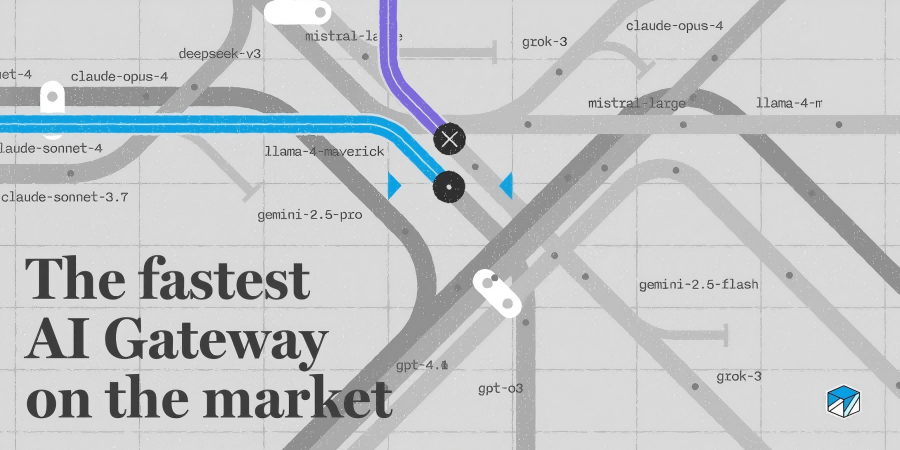

Helicone AI Gateway: A High-Performance Router Built for LLM Workloads

What is Helicone AI Gateway?

Helicone AI Gateway is an open-source, high-efficiency AI gateway developed by the Helicone team and built in Rust. It provides a unified OpenAI-compatible interface and supports 100+ LLM models. With no additional SDK required, developers can effortlessly route requests to major providers like OpenAI, Anthropic, Google, and AWS. Designed with performance, reliability, and ease of deployment in mind, it acts as a powerful control hub for large language model infrastructure.

Key Features

-

Unified Interface for Multi-Model Access: Compatible with OpenAI’s API format, it allows developers to switch between LLM providers without rewriting integration code.

-

Smart Load Balancing and Failover: Equipped with latency-aware strategies (P2C + PeakEWMA), the gateway automatically monitors system health and redirects traffic upon failure.

-

Response Caching: Supports both precise-match and bucketed caching, boosting cache hit rates by 30–50% in real use cases.

-

Multi-Tier Rate Limiting: Enables token, request, and cost-based limits across users, teams, or globally, preventing overuse.

-

Observability and Tracing: Built-in integration with Helicone’s platform or OpenTelemetry for logs, metrics, and full trace visibility.

-

Lightweight Deployment: A compact ~15 MB Rust binary deployable via Docker, K8s, bare metal, or subprocesses. P95 latency is as low as 1–5 ms.

How It Works

-

Rust + Tower for High-Performance Routing: Built using Rust and Tower middleware for efficient concurrent request handling.

-

Latency-Aware Scheduling: Monitors model response times and errors, automatically rerouting to fallback providers if needed.

-

Caching Layer: Hashes API parameters and stores results with support for TTL and bucketed variants, reducing redundant requests.

-

Embedded Observability: Supports Helicone and OpenTelemetry to emit performance metrics, logs, and tracing data.

-

Flexible Rate Limiting: Implements GCRA (leaky bucket) algorithm to apply precise rate limits, including burst control.

Project Links

-

GitHub Repository: github.com/Helicone/ai-gateway

Application Scenarios

-

Multi-Model LLM Switching: Ideal for applications that require dynamic switching between GPT-4, Claude, Gemini, etc., without integration overhead.

-

Reliable Production Systems: Ensures uptime with auto-failover and rate control, suitable for services with strict SLAs.

-

Cost Optimization: Reduces LLM invocation costs via caching and smart routing.

-

Latency-Sensitive Services: With P95 latency under 10 ms, it’s perfect for real-time AI agents, chatbots, and virtual assistants.

-

Full Observability: Enables performance monitoring and tracing pipelines using Helicone or OpenTelemetry integrations.

Related Posts