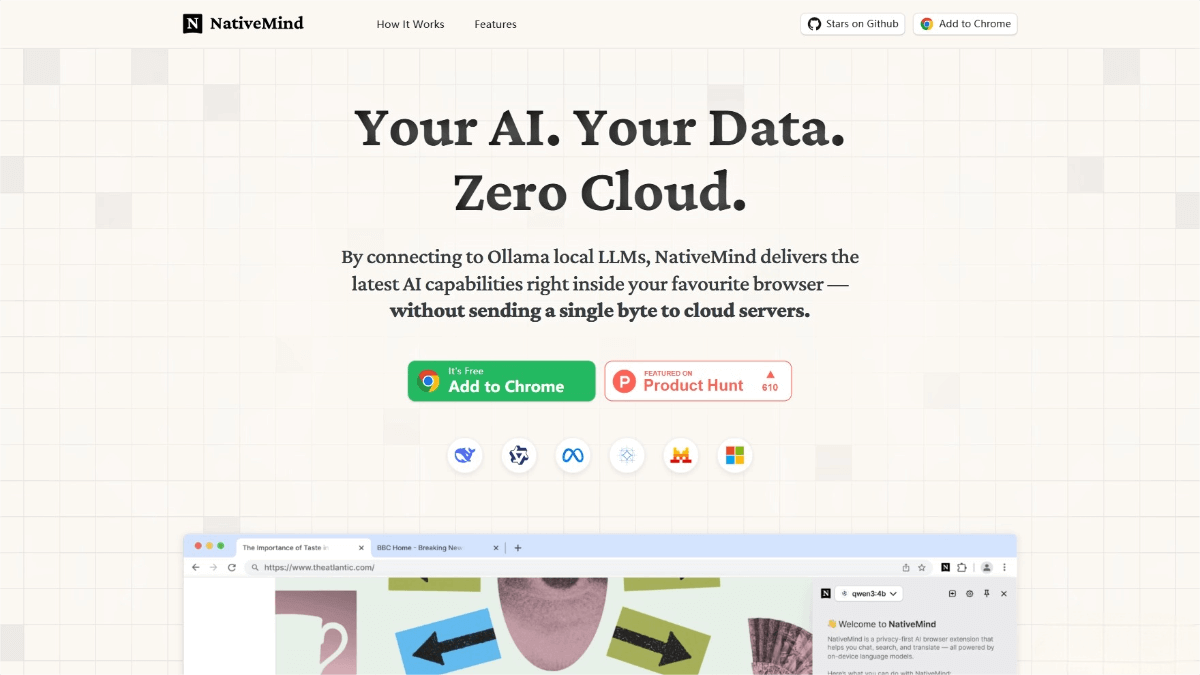

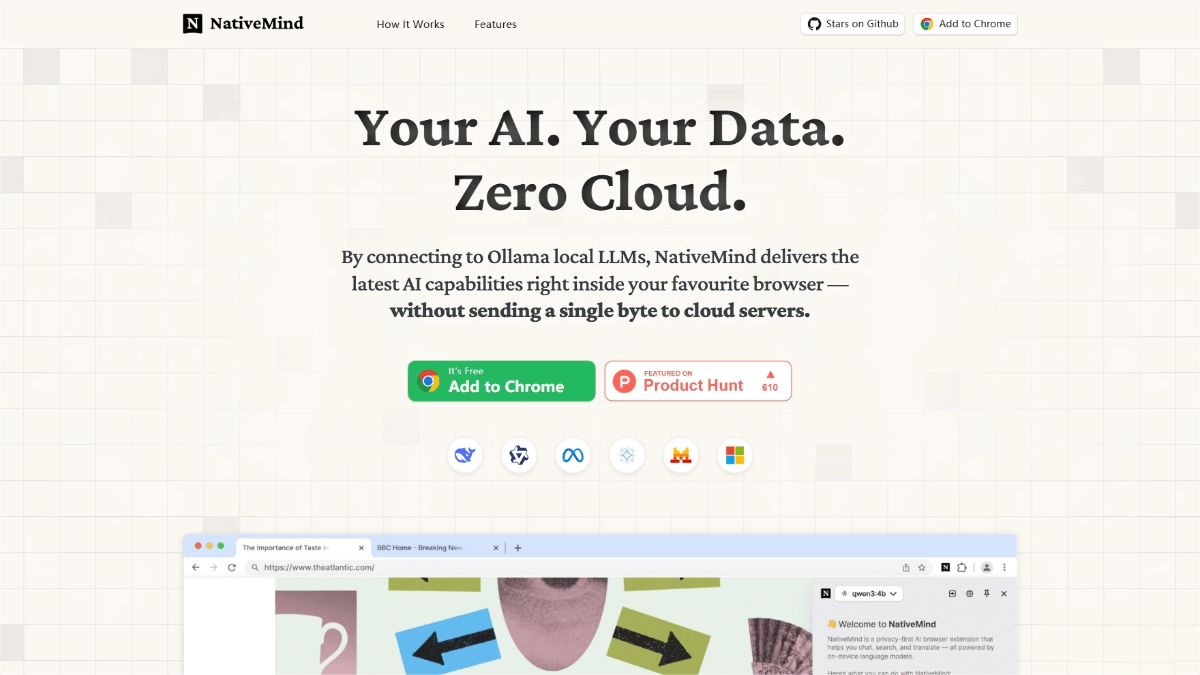

NativeMind – An open-source local AI assistant offering intelligent conversations, content analysis, writing assistance, and more

What is NativeMind?

NativeMind is an open-source AI assistant that runs entirely on local devices. Supporting models such as DeepSeek, Qwen, and LLaMA, NativeMind integrates seamlessly with Ollama for effortless model loading and switching. Its capabilities include intelligent conversation, web content analysis, translation, writing assistance, and more—all executed within the browser without relying on the cloud.

NativeMind ensures 100% local data processing—no cloud dependency, no tracking, no logs—giving users complete control over their data and privacy.

Key Features of NativeMind

-

Intelligent Conversation:

With multi-tab context awareness, NativeMind can conduct deep, coherent conversations, integrating information across different browser tabs for smarter responses. -

Content Analysis:

Summarizes web pages instantly and extracts key information. It also understands document content, helping users quickly grasp core ideas and save time on reading. -

Universal Translation:

Offers full-page translation with bilingual side-by-side viewing, as well as selective text translation—meeting various translation needs with ease. -

AI-Powered Search:

Enhances the browser’s search functionality. Users can ask questions directly, and NativeMind will browse web pages to provide accurate answers—improving search efficiency. -

Writing Enhancement:

Automatically detects text and provides intelligent rewriting, proofreading, and creative suggestions to help improve writing quality. -

Real-Time Assistance:

While browsing, a floating toolbar appears based on context, offering quick access to functions like translation, summarization, and more—convenient and efficient.

Technical Principles of NativeMind

-

Locally Executed AI Models:

The core of NativeMind is built on models that run entirely on local devices, ensuring privacy and security with no cloud server involvement. All data processing happens on the user’s machine. -

Ollama Integration:

NativeMind integrates tightly with Ollama, a local AI model management platform that supports a variety of powerful models including DeepSeek, Qwen, LLaMA, Gemma, Mistral, and more. Users can choose and switch models as needed. -

WebLLM Support:

NativeMind supports WebLLM, a lightweight AI model based on WebAssembly that runs directly in the browser—no additional software installation required. -

Browser Extension Architecture:

Distributed via the Chrome Web Store and similar platforms, NativeMind operates as a browser extension. It uses browser extension APIs to interact with users’ browsing activity.

The frontend is built with Vue 3 and TypeScript for a user-friendly interface.

The backend logic communicates with locally running AI models via WebLLM and Ollama APIs, using WebSockets or other communication protocols for real-time, low-latency interaction.

Project Links

-

Official Website: https://nativemind.app/

-

GitHub Repository: https://github.com/NativeMindBrowser/NativeMindExtension

Application Scenarios

-

Academic Research Assistant:

Helps students and researchers extract key information from academic papers and integrate insights across tabs to boost research productivity. -

Enterprise Document Management:

Empowers employees to quickly understand business documents using content analysis and refine document language with writing enhancement tools for clearer communication. -

Online Learning Support:

Assists online learners in overcoming language barriers with translation tools, and provides real-time summaries and explanations to deepen understanding. -

Market Research and Analysis:

Enables researchers to efficiently find and organize key information from local web searches, and generate market analysis reports using content analysis. -

Personal Knowledge Management:

Allows users to record ideas and answers through intelligent conversation, and consolidate multi-source information across tabs to build a personalized knowledge system.

Related Posts