Notebook Llama: The Open-Source Notebook LM Alternative for AI-Powered Podcasts

What is Notebook Llama?

Notebook Llama is a fully open-source document-to-audio tool developed by the run-llama community, designed as an alternative to Google’s Notebook LM. It enables users to locally deploy an AI system that transforms documents like PDFs into podcast-style spoken content. By leveraging LLaMA models and open-source TTS engines, it creates natural, multi-speaker dialogue from written material—ideal for document exploration, podcast creation, and privacy-conscious AI workflows.

Key Features

-

PDF-to-Podcast Workflow

A four-step pipeline that:-

Extracts and preprocesses text from PDFs

-

Uses LLaMA models to generate podcast-style scripts

-

Refines the dialogue into natural, conversational tone

-

Converts scripts into audio using open-source TTS tools like Parler-TTS or Bark

-

-

LlamaCloud Integration

Seamlessly connects with LlamaCloud to manage model inference and document indexing. -

Modular CLI Toolchain

Comes with command-line tools and Jupyter notebooks for text extraction, indexing, and backend orchestration—easy to customize and extend. -

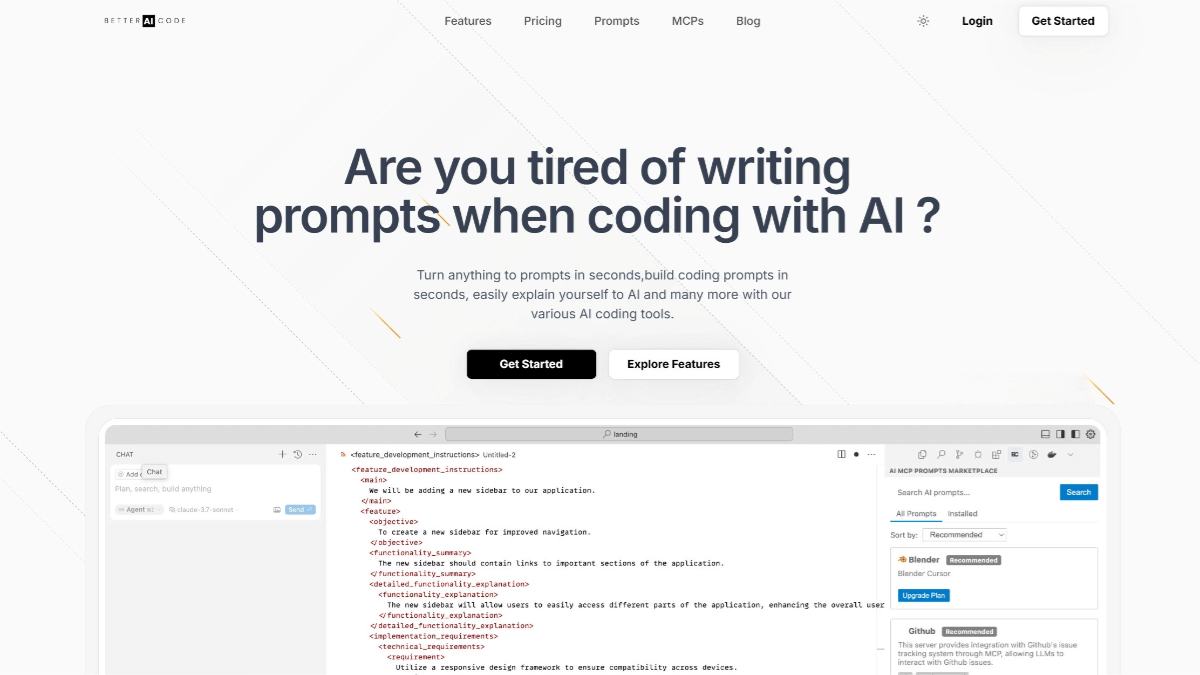

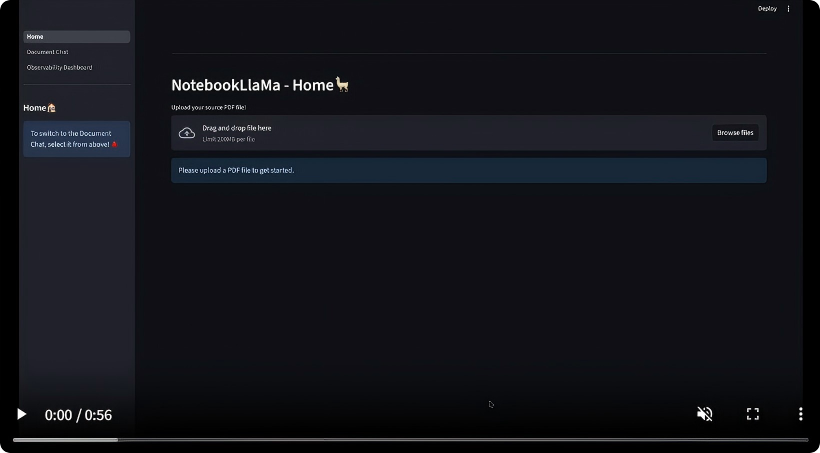

Streamlit Frontend

Provides a user-friendly web UI built with Streamlit, allowing users to upload documents, initiate sessions, and listen to the generated podcast content. -

Observability & Tracing

Integrated with Jaeger for performance monitoring and interactive session tracing.

Technical Architecture

Notebook Llama follows a two-layer architecture:

-

Core Processing Layer

-

Scripts handle text extraction and semantic indexing from documents

-

LLaMA-based models (e.g., LLaMA 3.2, 3.1) generate conversational scripts with simulated multi-speaker dialogue

-

Open-source TTS engines synthesize high-quality audio

-

-

Service & Interface Layer

-

Python-based backend server built with

uv, following MCP-style tool orchestration -

Streamlit frontend for user interactions and playback

-

PostgreSQL and Jaeger run via Docker Compose for session state and observability

-

-

Deployment Environment

-

LLaMA models run on LlamaCloud

-

Uses

ffmpegfor audio processing -

Fully deployable locally or in cloud environments

-

Project Info & Setup

-

GitHub Repo: run-llama/notebookllama

Use Cases

-

Document Audio Exploration

Interact with your PDFs, research papers, or manuals in conversational audio—backed by semantic indexing and retrieval. -

Podcast Content Generation

Automatically convert PDFs or plain text into multi-voice, AI-narrated podcast episodes. -

Educational Demos & Research

Perfect for learning or teaching LLM, NLP, TTS, and agent orchestration pipelines. -

Local Deployment & Privacy First

Run everything locally with your own models and TTS tools—ideal for compliance-sensitive or offline applications.

Related Posts