Intern-S1 – A scientific multimodal foundation model released by Shanghai AI Lab

What is Intern-S1?

Intern-S1 is a scientific multimodal foundation model officially open-sourced by the Shanghai Artificial Intelligence Laboratory at the World Artificial Intelligence Conference. It integrates both language and multimodal capabilities, demonstrating a high level of balanced development and rich interdisciplinary scientific knowledge, making it particularly strong in scientific domains.

Intern-S1 pioneers the Cross-Modal Scientific Parsing Engine, enabling precise interpretation of complex scientific modalities such as chemical formulas, protein structures, and seismic wave signals. It can predict compound synthesis pathways, assess the feasibility of chemical reactions, and more. It has outperformed leading proprietary models on a variety of scientific benchmarks, showcasing exceptional capabilities in scientific reasoning and understanding.

The model achieves deep multimodal fusion through dynamic tokenizers and temporal signal encoders, and employs a hybrid general-specialized data synthesis approach. This enables both powerful general reasoning and state-of-the-art performance in specialized scientific tasks.

Main Features of Intern-S1

-

Cross-modal scientific parsing

-

Chemistry: Accurately interprets chemical formulas, predicts synthesis routes, and evaluates reaction feasibility.

-

Biomedicine: Parses protein sequences to assist in drug target discovery and clinical value evaluation.

-

Earth Science: Recognizes and analyzes seismic wave signals to support earthquake research.

-

-

Language and vision fusion

Combines language and visual inputs for complex multimodal tasks like scientific visual question answering and explanation of scientific phenomena. -

Scientific data processing

Handles a wide range of complex data formats, such as light curves in materials science and gravitational wave signals in astronomy. -

Scientific problem-solving

Provides precise answers to science-related questions by leveraging its extensive knowledge base and strong reasoning capabilities. -

Experimental design and optimization

Assists researchers in designing and optimizing experiments to improve research efficiency. -

Multi-agent collaboration

Supports coordination with other intelligent agents to complete complex research tasks collaboratively. -

Autonomous learning and evolution

Possesses self-improving capabilities through continuous interaction with its environment. -

Data analysis tools

Offers tools for fast and efficient scientific data processing and analysis. -

Flexible deployment

Supports both local deployment and cloud-based services to meet diverse research application needs.

Technical Architecture of Intern-S1

-

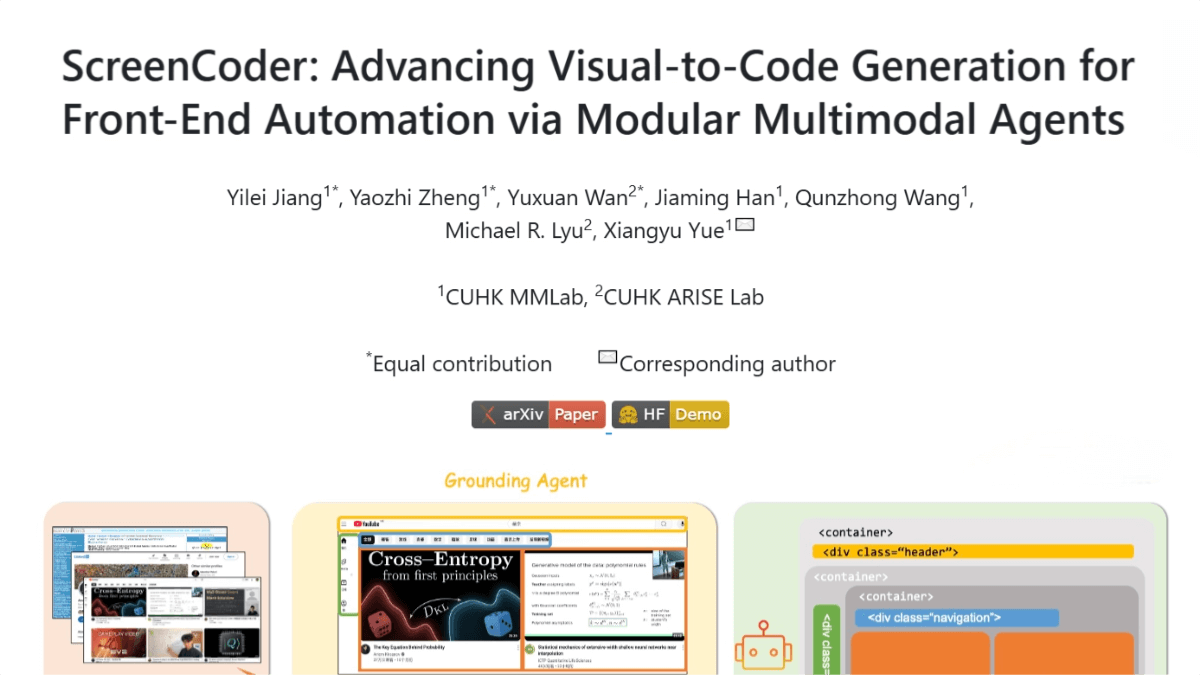

Innovative multimodal architecture

Utilizes newly introduced dynamic tokenizers and temporal signal encoders to process various complex scientific modalities—such as chemical formulas, protein sequences, light curves, gravitational wave signals, and seismic waveforms. It achieves deep understanding and efficient processing of such data, improving compression rates by over 70% compared to models like DeepSeek-R1 in chemical formula parsing. -

Large-scale scientific pretraining

Built on a 235B-parameter Mixture-of-Experts (MoE) language model and a 6B-parameter vision encoder, Intern-S1 is pretrained on 5 trillion multimodal tokens, with over 2.5 trillion from scientific domains. It excels in both general-purpose and domain-specific scientific tasks, including chemical structure analysis and protein sequence interpretation. -

Joint optimization of systems and algorithms

Achieves efficient, stable training of large multimodal MoE models under FP8 precision. Compared to other open MoE models, training costs are reduced by 90%. System-wise, it uses a training-inference separation strategy and a custom inference engine for large-scale asynchronous FP8 inference. Algorithm-wise, the new Mixture of Rewards learning mechanism combines multiple feedback signals to improve training stability and efficiency. -

Hybrid general-specialized data synthesis

To meet the demanding needs of high-value scientific tasks, Intern-S1 employs a hybrid approach. It uses large-scale general scientific data to broaden the model’s knowledge base, while specialized models generate highly readable scientific data that is quality-checked by domain-specific expert agents.

Project Links for Intern-S1

-

Official Website: InternLM

-

GitHub Repository: https://github.com/InternLM/Intern-S1

-

Hugging Face Model Hub: https://huggingface.co/internlm/Intern-S1-FP8

Application Scenarios for Intern-S1

-

Image and text fusion tasks:

Describes content in images and explains scientific phenomena by integrating vision and language. -

Processing complex scientific modalities:

Supports input of complex data types—e.g., light curves in material science or gravitational wave signals in astronomy—for deep fusion and high-efficiency processing. -

Integration into scientific research tools:

Enhances tools used by researchers for fast data processing and analysis. -

Answering scientific queries:

Acts as an intelligent assistant capable of accurately solving various science-related problems using its extensive reasoning and domain knowledge.

Related Posts