What is Qwen3-30B-A3B-Thinking-2507

Qwen3-30B-A3B-Thinking-2507 is a reasoning-focused open-source model developed by Alibaba’s Tongyi team, specifically designed for complex reasoning tasks. With 30.5 billion parameters (3.3 billion active), it natively supports a 256K-token context window, scalable up to 1 million tokens. The model excels in math, programming, multilingual instruction following, and exhibits significantly improved reasoning capabilities. It also features strong general abilities in writing, dialogue, and tool use. Its lightweight design allows deployment on consumer-grade hardware, and it’s already available for public use via Qwen Chat.

Key Features of Qwen3-30B-A3B-Thinking-2507

-

Advanced Reasoning Capabilities

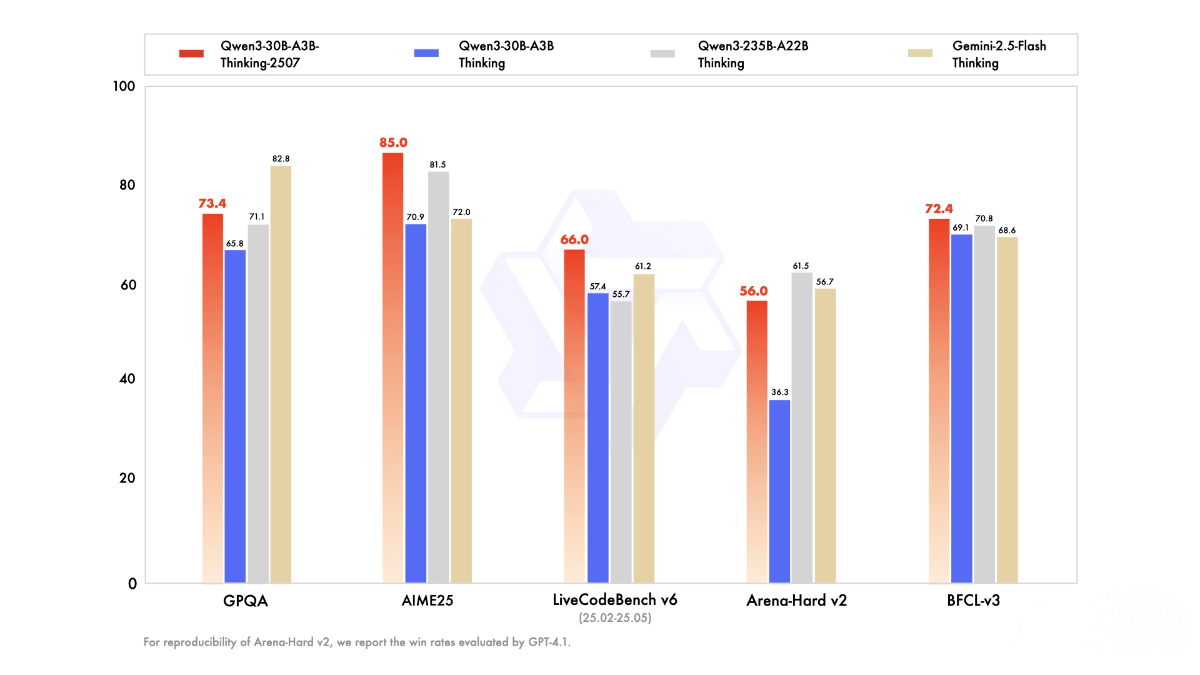

Performs exceptionally well on logical reasoning, math problem-solving, and scientific inference tasks. For instance, it scored 85.0 on the AIME25 math benchmark and 66.0 on LiveCodeBench v6 for code understanding and generation. -

Enhanced General Capabilities

Supports multilingual instruction following and can generate and understand content in multiple languages. -

Long-Context Comprehension

Natively supports 256K-token context length, extendable to 1M tokens—ideal for processing long-form content. -

Optimized “Thinking Mode”

Equipped with a mode that extends reasoning steps and encourages longer inferential chains, maximizing potential on complex tasks. -

Tool Use & Agent Integration

Supports tool invocation and can integrate with agent frameworks like Qwen-Agent to handle sophisticated workflows. -

Lightweight Deployment

Designed for local use on consumer hardware, making it accessible to developers across different scenarios.

Technical Highlights

-

Transformer Architecture

Built on a standard Transformer with 48 layers, each featuring 32 query heads and 4 key-value heads, optimized for parallel computation. -

Mixture of Experts (MoE)

Incorporates 128 expert modules, activating 8 per inference step using dynamic routing to match tasks with the most suitable experts, improving efficiency and adaptability. -

Long-Context Memory

Achieves extended context windows through memory management and architectural improvements, ideal for large-scale documents and contextual tasks. -

“Thinking Mode”

Introduces longer reasoning paths and optimized inferencing strategies to enhance depth and precision on complex problems. -

Pretraining & Fine-Tuning

Undergoes extensive pretraining on diverse data sources followed by targeted fine-tuning for domain-specific performance.

Project Page

Hugging Face Model Hub: https://huggingface.co/Qwen/Qwen3-30B-A3B-Thinking-2507

Application Scenarios

-

AI Tutoring

Offers step-by-step reasoning for solving complex math and science problems, boosting learning efficiency and comprehension. -

Software Development

Automatically generates code snippets or full frameworks based on developer descriptions, including optimization suggestions to improve software quality. -

Medical Literature Analysis

Rapidly interprets medical papers, extracts key insights, and summarizes findings to support clinical or research applications. -

Creative Writing

Assists in generating ideas, character arcs, dialogue, and story development for novels, scripts, or ad copy—stimulating creativity. -

Market Research

Analyzes data and generates industry insights, competitive analysis, and consumer trend reports, supporting data-driven business decisions.