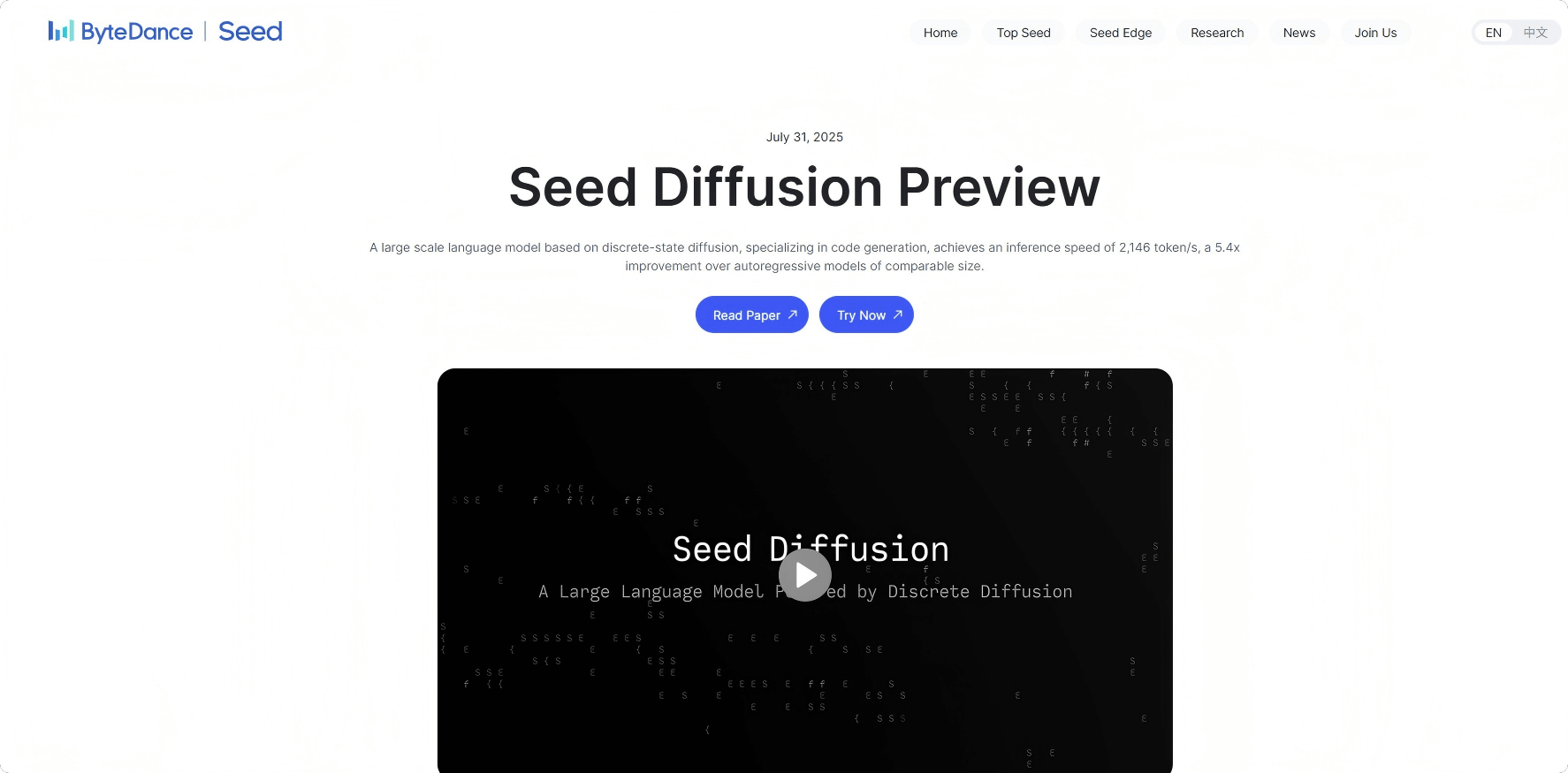

What is Seed Diffusion?

Seed Diffusion is an experimental diffusion language model launched by ByteDance’s Seed team, focusing on code generation tasks. The model employs key technologies such as two-stage diffusion training, constrained order learning, and reinforced efficient parallel decoding to achieve significant inference acceleration. Its inference speed reaches 2,146 tokens per second, 5.4 times faster than autoregressive models of the same scale. It performs comparably to autoregressive models on multiple code benchmarks and surpasses them in code editing tasks. Seed Diffusion demonstrates the great potential of discrete diffusion models as the foundational framework for the next generation of generative models.

Seed Diffusion’s Main Features

-

Efficient Code Generation: By leveraging parallel decoding mechanisms, it significantly boosts code generation speed, achieving an inference speed of 2,146 tokens per second — 5.4 times faster than autoregressive models of comparable scale.

-

High-Quality Code Generation: Delivers performance on par with autoregressive models across multiple code benchmarks and outperforms them in code editing tasks.

-

Code Logic Understanding and Repair: Based on two-stage diffusion training (masking phase and editing phase), it enhances the model’s ability to understand and repair code logic.

-

Flexible Generation Order: Introduces structural priors of code, enabling better handling of causal dependencies in code.

Technical Principles of Seed Diffusion

-

Two-Stage Diffusion Training: In the masking phase, dynamic noise scheduling replaces some code tokens with [MASK] tokens, allowing the model to learn local context and pattern completion. The editing phase introduces insertion/deletion operations constrained by edit distance to construct noise, enabling the model to re-examine and correct all tokens, avoiding “spurious correlation dependencies” on uncontaminated context.

-

Constrained Order Diffusion: By distilling high-quality generation trajectories, the model is guided to grasp correct dependency relationships, addressing the randomness problem of generation order in traditional diffusion models.

-

Consistent Policy Learning: Optimizes the number of generation steps while ensuring output quality, achieving efficient parallel decoding. It uses a proxy loss function minimizing generation steps, encouraging the model to converge more efficiently.

-

Block-Level Parallel Diffusion Sampling: Based on a block-level parallel diffusion sampling scheme that maintains causal order between blocks and uses KV-caching to reuse information from previously generated blocks, speeding up the generation process.

Project Links for Seed Diffusion

-

Official Website: https://seed.bytedance.com/zh/seed_diffusion

-

Technical Paper: https://lf3-static.bytednsdoc.com/obj/eden-cn/hyvsmeh7uhobf/sdiff_updated.pdf

-

Online Demo: https://studio.seed.ai/exp/seed_diffusion/

Application Scenarios of Seed Diffusion

-

Automated Code Generation: Quickly generates code prototypes to help developers efficiently kickstart projects.

-

Code Editing and Optimization: Automatically detects and fixes code errors, optimizes code performance, and improves code quality.

-

Education and Training: Generates sample code to assist programming education and help students quickly grasp coding concepts.

-

Software Development Collaboration: Serves as a team development assistant tool providing code completion and suggestions to improve development efficiency.

-

Intelligent Programming Assistant: Integrated into IDEs, Seed Diffusion offers intelligent code generation and optimization features to enhance the development experience.