SkyReels-A3 – A digital human video generation model launched by Kunlun Wanwei

What is SkyReels-A3?

SkyReels-A3 is an advanced AI model launched by Kunlun Wanwei, based on the DiT (Diffusion Transformer) video diffusion architecture, combined with frame interpolation, reinforcement learning, and camera movement control techniques. The model can “activate” a person in a photo or video through audio driving, making them speak or perform. Users only need to upload a portrait image and audio to generate natural and smooth video content. It supports single-shot outputs up to 60 seconds long and multi-shot creation of unlimited length. The model excels in lip-sync accuracy, natural motions, and camera movement effects, suitable for various scenarios such as advertising, live streaming, music videos, and more, providing an efficient and cost-effective solution for content creation. The model is available on the SkyReels platform and can be accessed via Talking Avatar.

Main Features of SkyReels-A3

-

Photo Activation: Upload a portrait photo and audio; the person in the photo will speak or sing according to the audio.

-

Video Creation: Input portrait images, audio, and text prompts; the model generates performance videos that meet the requirements.

-

Video Dialogue Replacement: Replace the original video’s audio, and the character automatically syncs lip movements, expressions, and performances, ensuring seamless visuals.

-

Motion Interaction: Supports natural interactive motions, such as interacting with products or hand gestures while speaking.

-

Camera Movement Control: Provides multiple camera movement effects (e.g., zoom, pan, tilt, rise/fall), allowing users to adjust the intensity for professional-quality videos.

-

Long Video Generation: Supports single-shot videos up to 60 seconds and unlimited length for multi-shot videos to meet diverse needs.

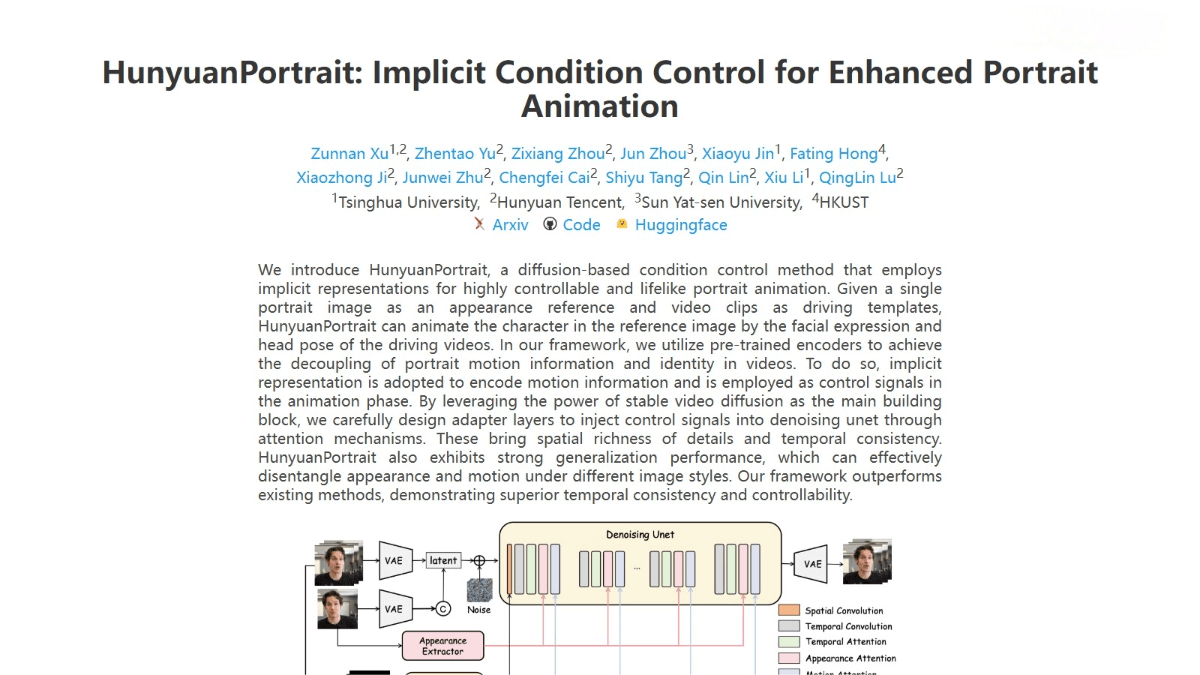

Technical Principles of SkyReels-A3

-

Architecture: Based on the DiT (Diffusion Transformer) video diffusion model, replacing traditional U-Net with Transformer structure to capture long-range dependencies.

-

3D-VAE Encoding: Uses a 3D variational autoencoder (3D-VAE) to compress video data spatially and temporally into a compact latent representation, reducing computational load.

-

Frame Interpolation & Extension: Extends video length by using frame interpolation models for long-duration video generation.

-

Reinforcement Learning Optimization: Incorporates reinforcement learning to improve the naturalness of character movements and interactions.

-

Camera Movement Control Module: Based on ControlNet structure, extracts depth information from reference images and combines it with camera parameters to generate videos with camera movement effects.

-

Multimodal Input: Supports various inputs including images, audio, and text prompts, enabling highly controllable video generation.

Project Link

- Official website: https://skyworkai.github.io/skyreels-a3.github.io/

Application Scenarios of SkyReels-A3

-

Advertising & Marketing: Generate dynamic ad videos featuring celebrity likenesses or product displays to enhance brand promotion.

-

E-commerce Live Streaming: Support virtual live streaming and sales videos, reducing the host’s workload and enhancing audience interaction.

-

Film & Entertainment: Produce music videos, movie clips, or animations to improve artistic appeal and audience immersion.

-

Education & Training: Create virtual teachers explaining courses or demonstrating operations, increasing teaching engagement and efficiency.

-

News Media: Produce virtual anchors for news broadcasts or special reports, enhancing news timeliness and diversity.

-

Personal Creation & Entertainment: Users upload personal photos and audio to generate personalized creative videos, such as birthday greetings or wedding videos.

Related Posts