A New Global Benchmark for Multimodal Reasoning: GLM-4.5V Officially Launched and Open-Sourced

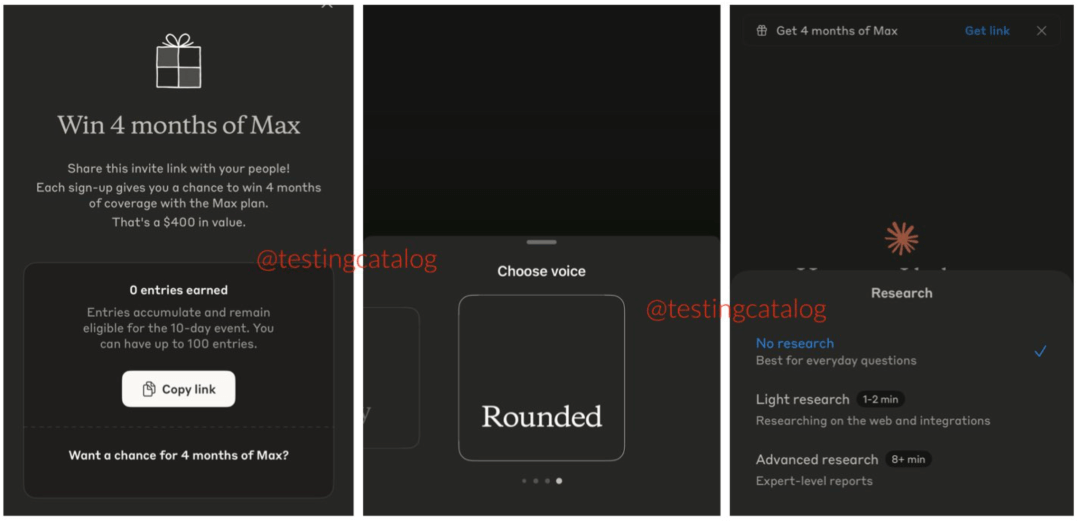

Zhipu has released and open-sourced GLM-4.5V, the world’s best-performing open-source vision reasoning model at the 100B-parameter scale, with a total of 106B parameters and 12B active parameters. Built on Zhipu’s next-generation text foundation model GLM-4.5-Air, it achieves state-of-the-art performance across 41 public vision–multimodal benchmarks, supporting tasks such as image, video, and document understanding, as well as GUI Agents.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...