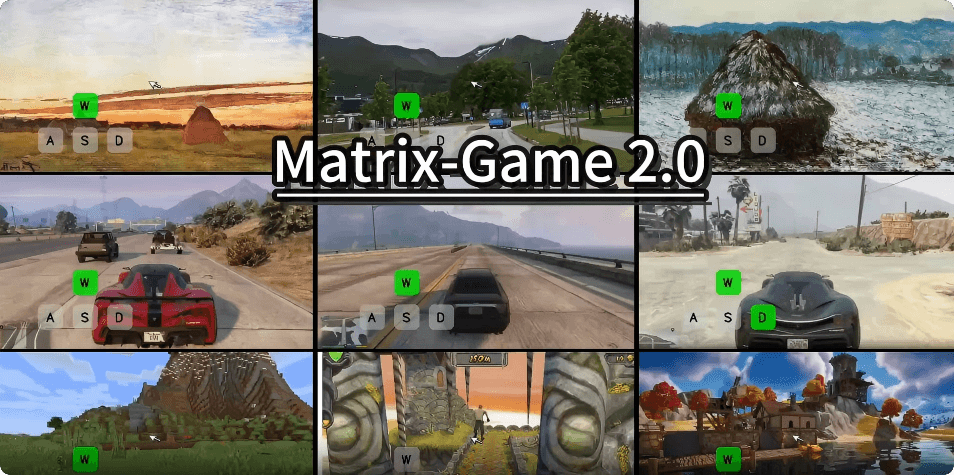

What is Matrix-Game 2.0?

Matrix-Game 2.0 is a self-developed world model released by Kunlun Wanwei’s SkyWork AI. It is the industry’s first open-source, general-purpose, real-time, long-sequence interactive generative model for complex scenarios. Fully open-sourced, it aims to drive the development of interactive world models. The model adopts a vision-driven interaction approach, combining a 3D Causal Variational Autoencoder (3D Causal VAE) with a multimodal Diffusion Transformer architecture to deliver low-latency, high-frame-rate long-sequence interactions. It can generate continuous video content at 25 FPS for durations extending to several minutes. With accurate physical laws and scene semantics understanding, users can freely manipulate virtual environments via simple commands, making it suitable for applications such as game development, virtual reality, and film production.

Key Features of Matrix-Game 2.0

-

Real-time long-sequence generation: Capable of stably generating continuous video content at 25 FPS across diverse and complex scenarios, with generation durations extended to several minutes, significantly improving coherence and practicality.

-

Precise interactive control: Allows users to freely explore and manipulate virtual environments via simple commands (e.g., keyboard arrow keys, mouse operations), responding accurately to user interactions.

-

Vision-driven modeling: Adopts a vision-centric interactive world modeling approach focused on understanding and generating virtual worlds through visual perception and physical laws, avoiding semantic bias from language priors.

-

Multi-scenario generalization: Demonstrates strong cross-domain adaptability, supporting various styles and environments, including urban and wilderness spaces, as well as realistic, oil painting, and other visual styles.

-

Enhanced physical consistency: Characters exhibit physically logical movement behaviors when facing complex terrains such as stairs or obstacles, improving immersion and controllability.

Technical Principles of Matrix-Game 2.0

-

Vision-driven interactive world modeling: Uses an image-centered perception and generation mechanism, focusing on visual understanding and physics-based learning to construct virtual worlds more realistically and accurately than traditional language-prompt-based generation.

-

3D Causal VAE: Achieves efficient compression in spatial and temporal dimensions, encoding and decoding spatiotemporal features to reduce computational complexity while preserving essential information.

-

Multimodal Diffusion Transformer (DiT): Combines visual encoders with user action inputs to generate physically coherent dynamic visual sequences frame-by-frame, decoded into full videos via 3D VAE.

-

Autoregressive diffusion generation mechanism: Based on a self-forcing training strategy, this innovation overcomes latency and error accumulation in traditional bidirectional diffusion models by generating the current frame from historical frames only.

-

Distribution Matching Distillation (DMD): Minimizes distribution differences with the base model to help the student model generate high-quality frames, aligning training and inference distributions to mitigate error accumulation.

-

KV Cache mechanism: Uses key-value caching to significantly improve efficiency and consistency in long video generation, enabling seamless rolling generation and supporting unlimited video lengths.

Project Links

-

Official website: https://matrix-game-v2.github.io/

-

GitHub repository: https://github.com/SkyworkAI/Matrix-Game

-

Hugging Face model hub: https://huggingface.co/Skywork/Matrix-Game-2.0

-

Technical report: https://github.com/SkyworkAI/Matrix-Game/blob/main/Matrix-Game-2/assets/pdf/report.pdf

Application Scenarios

-

Game Development: Generates physically coherent and highly realistic interactive videos in diverse game environments, supporting dynamic character actions and scene interactions—such as simulating vehicle operations or character movements in GTA or Minecraft-like scenarios.

-

Virtual Reality: Creates high-quality virtual environments in real time, enabling users to freely explore and manipulate them, providing robust technical support for VR applications.

-

Film Production: Rapidly generates high-quality virtual scenes and dynamic content, enabling production teams to efficiently create complex visual effects and animated environments.

-

Embodied AI: Supports embodied agent training and data generation, offering efficient solutions for training and testing agents in virtual environments.

-

Virtual Humans and Intelligent Interaction Systems: With its real-time interactivity and understanding of physical rules, it serves as an ideal solution for virtual humans and intelligent interactive systems, producing natural, smooth actions and responses.

Related Posts