CombatVLA – a 3D action game–specific VLA model launched by Taotian Group

What is CombatVLA?

CombatVLA is an efficient Vision-Language-Action (VLA) model developed by the Future Life Laboratory team at Taotian Group, specifically designed for combat tasks in 3D action role-playing games (ARPGs). Built on a 3B-parameter scale, the model is trained using video-action pairs collected via motion trackers, with data formatted into Action-of-Thought (AoT) sequences. Using a three-stage progressive learning paradigm—from video-level to frame-level to truncated strategies—the model achieves highly efficient reasoning. On combat understanding benchmarks, CombatVLA outperforms existing models, delivering 50x faster inference speed and a task success rate exceeding that of human players.

Key Features

-

Efficient combat decision-making: Capable of making real-time combat decisions in complex 3D game environments, such as dodging attacks, casting skills, or restoring health—achieving decision speeds up to 50x faster than traditional models.

-

Combat understanding and reasoning: Evaluates enemy states, predicts enemy attack intentions, and reasons out the optimal combat actions, significantly surpassing other models in battle comprehension.

-

Action command generation: Outputs executable keyboard and mouse operation instructions (e.g., pressing specific keys or performing mouse actions) to control in-game characters.

-

Generalization ability: Demonstrates strong generalization across varying task difficulties and different games, effectively executing combat tasks even in unseen game scenarios.

Technical Principles of CombatVLA

-

Motion tracker: Collects human player operation data (keyboard and mouse inputs) synchronized with in-game visuals to generate video-action pair datasets.

-

Action-of-Thought (AoT) sequences: Converts collected data into AoT sequences, where each action is paired with detailed explanations to help the model understand the semantics and logic of actions.

-

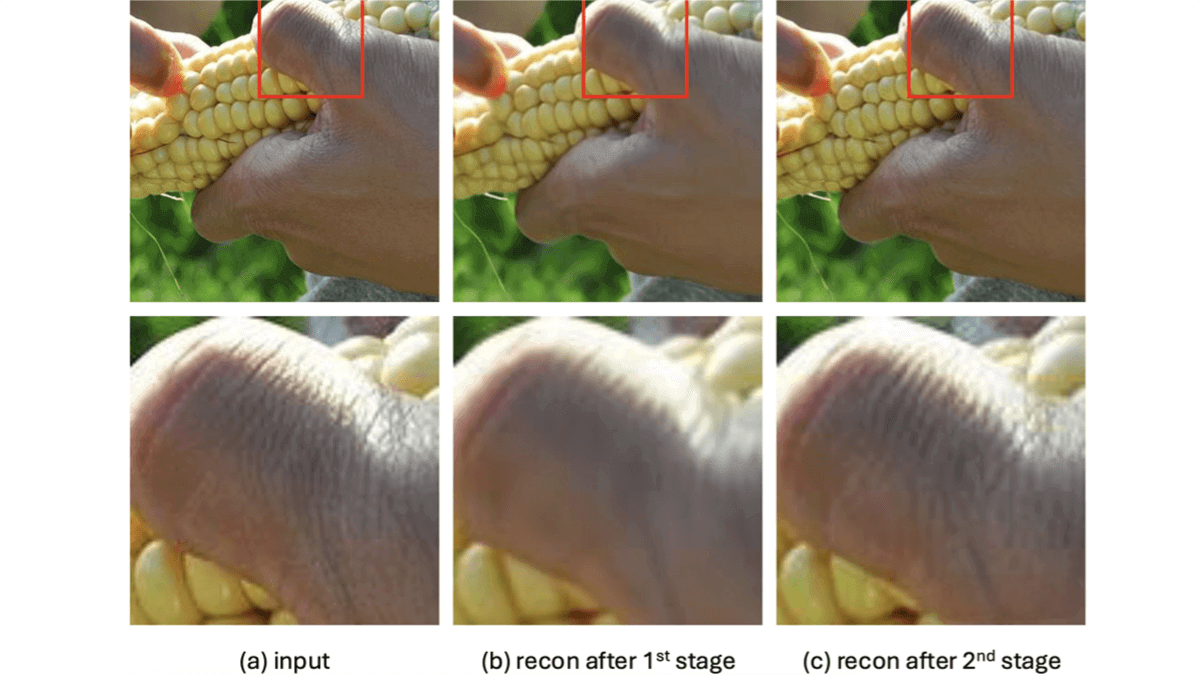

Three-stage progressive learning:

-

Stage 1: Video-level AoT fine-tuning for initial understanding of the combat environment.

-

Stage 2: Frame-level AoT fine-tuning to ensure strict alignment between actions and preceding frames.

-

Stage 3: Frame-level truncated AoT fine-tuning, introducing a special

<TRUNC>token to truncate outputs for faster inference.

-

-

Adaptive action-weighted loss: Combines action-alignment loss and modality-contrastive loss to optimize training and ensure accurate action output.

-

Action execution framework: Converts model-generated action instructions into actual keyboard and mouse operations to control game characters automatically.

Project Links

-

Official Website: https://combatvla.github.io/

-

GitHub Repository: https://github.com/ChenVoid/CombatVLA

-

arXiv Paper: https://arxiv.org/pdf/2503.09527

Application Scenarios of CombatVLA

-

3D ARPG gameplay: Real-time control of game characters during combat, enabling efficient decision-making and action execution for enhanced gameplay.

-

Game testing and optimization: Assists developers in testing combat systems, identifying issues, and optimizing game mechanics.

-

Esports training: Provides intelligent opponents for professional players, supporting practice in combat strategies and skill improvement.

-

Game content creation: Helps developers generate combat scenarios and narratives, accelerating the construction of complex levels and missions.

-

Robotics control: Extends to real-world robotics, enabling robots to make rapid decisions and execute actions in dynamic environments.

Related Posts