What is Waver 1.0?

Waver 1.0 is ByteDance’s next-generation video generation model, built on the Rectified Flow Transformer architecture. It supports text-to-video (T2V), image-to-video (I2V), and text-to-image (T2I) generation within a single unified framework, without switching between models. It supports resolutions of up to 1080p and flexible video lengths ranging from 2 to 10 seconds. The model excels at capturing complex motions, producing videos with impressive motion amplitude and strong temporal consistency. On Waver-Bench 1.0 and the Hermes motion benchmark, Waver 1.0 outperforms existing open-source and closed-source models. It also supports multiple artistic styles, including photorealism, animation, clay, plush, and more.

Key Features of Waver 1.0

-

Unified Generation: Supports T2V, I2V, and T2I generation within a single framework—no model switching required.

-

High Resolution & Flexible Length: Outputs up to 1080p with adjustable resolutions, aspect ratios, and durations between 2–10 seconds.

-

Complex Motion Modeling: Skilled at capturing intricate motion, ensuring high motion amplitude and temporal consistency.

-

Multi-Shot Storytelling: Capable of producing coherent multi-shot narrative videos with consistent themes, visual style, and atmosphere.

-

Artistic Style Support: Generates videos in diverse artistic styles such as ultra-realism, animation, clay, and plush.

-

Performance Advantage: Outperforms existing open- and closed-source models on Waver-Bench 1.0 and Hermes benchmarks.

-

Inference Optimization: Uses APG (Adaptive Parallel Guidance) to reduce artifacts and enhance realism.

-

Training Strategy: Begins training on low-resolution videos, then gradually increases resolution to optimize motion modeling.

-

Prompt Tagging: Employs labeled prompts to distinguish data types, improving generation quality and accuracy.

Technical Principles of Waver 1.0

-

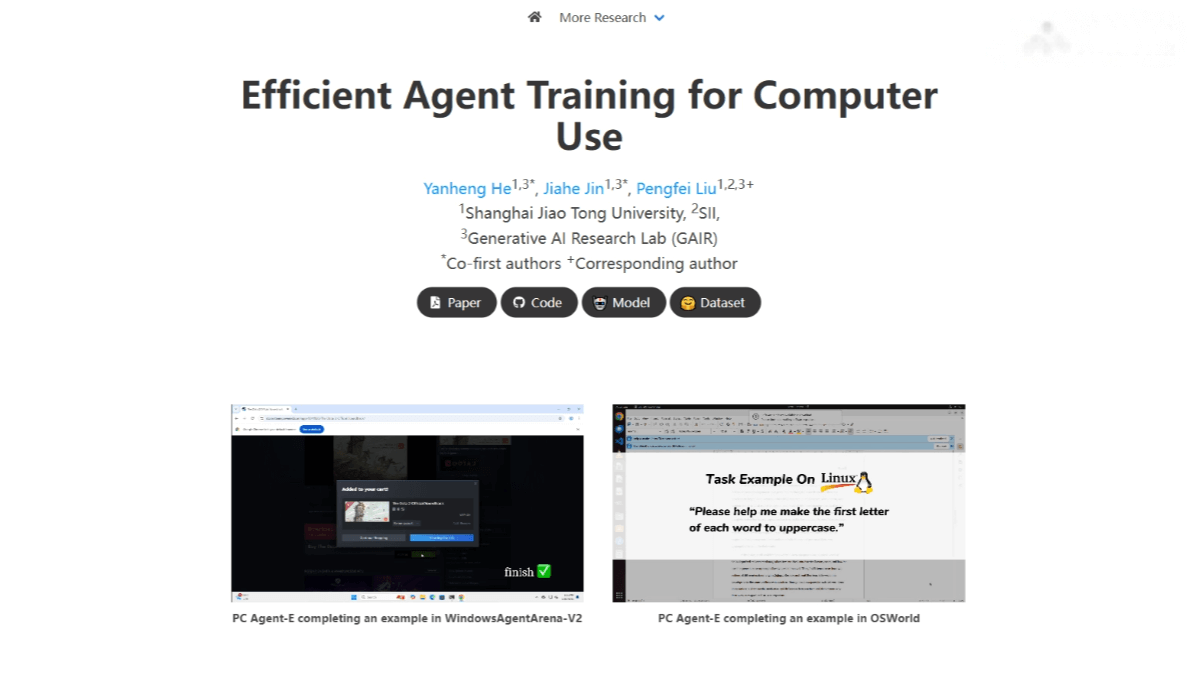

Model Architecture: Waver 1.0 adopts a Hybrid Stream DiT (Diffusion Transformer) architecture. It uses Wan-VAE for compressed video latent variables and integrates flan-t5-xxl and Qwen2.5-32B-Instruct for text features. Video and text modalities are fused via a dual-stream + single-stream approach.

-

1080p Generation: The Waver-Refiner (based on DiT) employs a flow-matching training method. Low-resolution videos (480p or 720p) are upsampled to 1080p, then noise is added, enabling high-quality 1080p outputs. A windowed attention mechanism reduces inference steps, significantly improving speed.

-

Training Methods: Motion learning begins with 192p videos using extensive compute resources, then scales up progressively to 480p and 720p. Following SD3’s flow-matching setup, sigma shift values are gradually increased during higher-resolution training.

-

Prompt Tagging: Different types of training data are distinguished with style and quality labels. During inference, negative prompts (e.g., “low resolution” or “slow motion”) are added to suppress poor-quality outputs.

-

Inference Optimization (APG): APG decomposes CFG (Classifier-Free Guidance) updates into parallel and orthogonal components, reducing the weight of the parallel component. This prevents oversaturation and enhances realism while reducing artifacts.

Waver 1.0 Project Links

-

Official Website: http://www.waver.video/

-

GitHub Repository: https://github.com/FoundationVision/Waver

-

arXiv Paper: https://arxiv.org/pdf/2508.15761

Application Scenarios of Waver 1.0

-

Content Creation: Transform text into vivid videos for storytelling, advertising, or short films.

-

Product Showcase: Convert product images into dynamic display videos for e-commerce, live streaming, or virtual try-on.

-

Education & Training: Turn teaching content or training documents into interactive videos, enhancing learning experiences.

-

Social Media: Rapidly generate engaging, shareable video content to attract user attention.

-

Animation Production: Convert static images into animations, suitable for character-driven stories and special effects.

-

Game Development: Generate dynamic scenes and character animations to enrich immersive gameplay experiences.

Related Posts