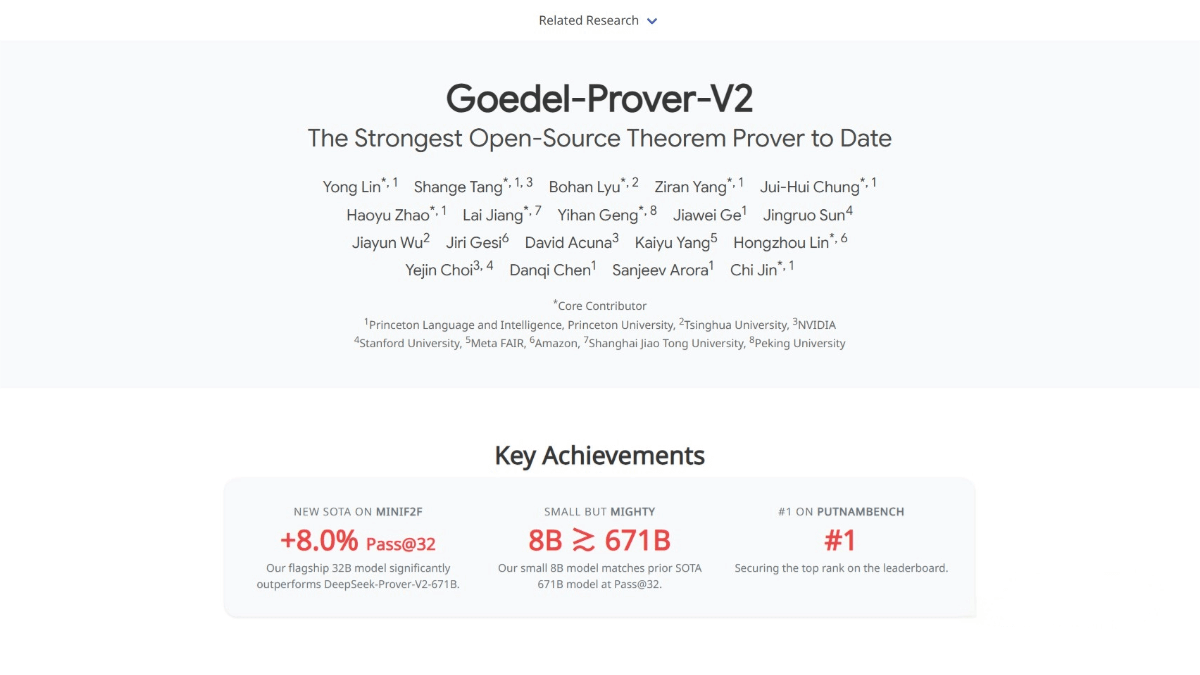

IndexTTS2 – Bilibili’s latest open-source text-to-speech model

What is IndexTTS2?

IndexTTS2 is a new text-to-speech (TTS) model developed by Bilibili’s speech team, now officially open-sourced. The model makes major breakthroughs in emotional expression and duration control, being the first autoregressive TTS model to support precise duration control. It enables zero-shot voice cloning—requiring only a single audio sample to accurately replicate timbre, rhythm, and speaking style—and supports multiple languages. IndexTTS2 also introduces emotion–timbre disentanglement, allowing users to independently specify the source of timbre and the source of emotion. In addition, it supports multimodal emotional inputs, meaning emotions can be controlled through reference audio, descriptive text, or emotional vectors.

Key Features of IndexTTS2

-

Zero-shot voice cloning: With just one reference audio sample, the model can accurately mimic the voice, intonation, and rhythm, supporting multilingual synthesis and highly personalized voice generation.

-

Emotion and duration control: Supports zero-shot emotion cloning by mimicking emotions from reference audio. Emotions can also be specified via text descriptions. It is the first in the world to support precise duration control, allowing generated speech length to be set down to the millisecond—useful for film dubbing, subtitle synchronization, and similar scenarios.

-

High-fidelity audio quality: With a sampling rate up to 48kHz, it supports lossless audio output. Combined with optimized vocoders, it produces natural, fluent, and emotionally rich speech while reducing mechanical artifacts.

-

Multimodal input support: Accepts multiple input types including text, audio, and emotional vectors, enabling users to flexibly control voice style and emotion for a richer synthesis experience.

-

Open-source and local deployment: Supports full local deployment, with model weights planned for release, empowering developers with robust tools to enable diverse applications and advance TTS technology adoption.

Technical Principles of IndexTTS2

-

Modular architecture: Composed of three main modules—Text-to-Semantics (T2S), Semantics-to-Melody (S2M), and vocoder—which work together to convert text into high-quality speech.

-

Emotion–timbre disentanglement: Uses techniques such as gradient reversal layers to decouple emotional and timbre features, allowing independent control of each for more flexible synthesis.

-

Multi-stage training strategy: Overcomes the shortage of high-quality emotional data by using staged training to enhance emotional expressiveness, improving naturalness and richness in synthesized speech.

-

High sampling rate and optimized vocoders: Employs 48kHz audio sampling and optimized vocoders like BigVGAN2 to generate high-fidelity, smooth, and natural speech while reducing mechanical sound artifacts.

-

Zero-shot cloning technology: Allows accurate replication of timbre, intonation, and rhythm from just one reference audio sample, supporting multilingual voice synthesis and highly personalized output.

Project Resources

-

Official site: https://index-tts.github.io/index-tts2.github.io/

-

GitHub repo: https://github.com/index-tts/index-tts

-

HuggingFace model hub: https://huggingface.co/IndexTeam/IndexTTS-2

-

arXiv paper: https://arxiv.org/pdf/2506.21619

Upgrades from IndexTTS1.5 to IndexTTS2

-

Precise duration control: IndexTTS2 is the first autoregressive TTS model supporting millisecond-level duration specification. IndexTTS1.5 did not have this feature.

-

Emotion–timbre disentanglement: Enables independent control of emotion and timbre, whereas IndexTTS1.5 had less refined controls.

-

Multimodal emotional input: Allows controlling emotions via audio references, text descriptions, or emotion vectors. IndexTTS1.5 supported emotion control but with fewer methods.

-

Enhanced emotional expressiveness: Improved modeling for richer and more natural emotional states compared to IndexTTS1.5.

-

Improved speech stability: Incorporates GPT latent representations and soft instruction mechanisms to improve speech generation stability, beyond what was available in IndexTTS1.5.

Application Scenarios of IndexTTS2

-

Film dubbing: Provides high-quality dubbing with precise control of duration and emotions, meeting requirements for audio–visual synchronization.

-

Virtual characters: Brings natural, emotionally rich voices to virtual characters, enhancing interactivity and user immersion.

-

Audiobooks: Produces fluent, natural narration for audiobooks, improving listener experience.

-

Intelligent assistants: Delivers natural and smooth voice interactions in virtual assistants and voice-broadcast applications.

-

Advertising production: Supports multilingual, emotionally varied, and personalized voice synthesis for more engaging advertisements.

-

Education: Provides lively and expressive narration for educational software and online courses, helping students better understand and learn.

Related Posts