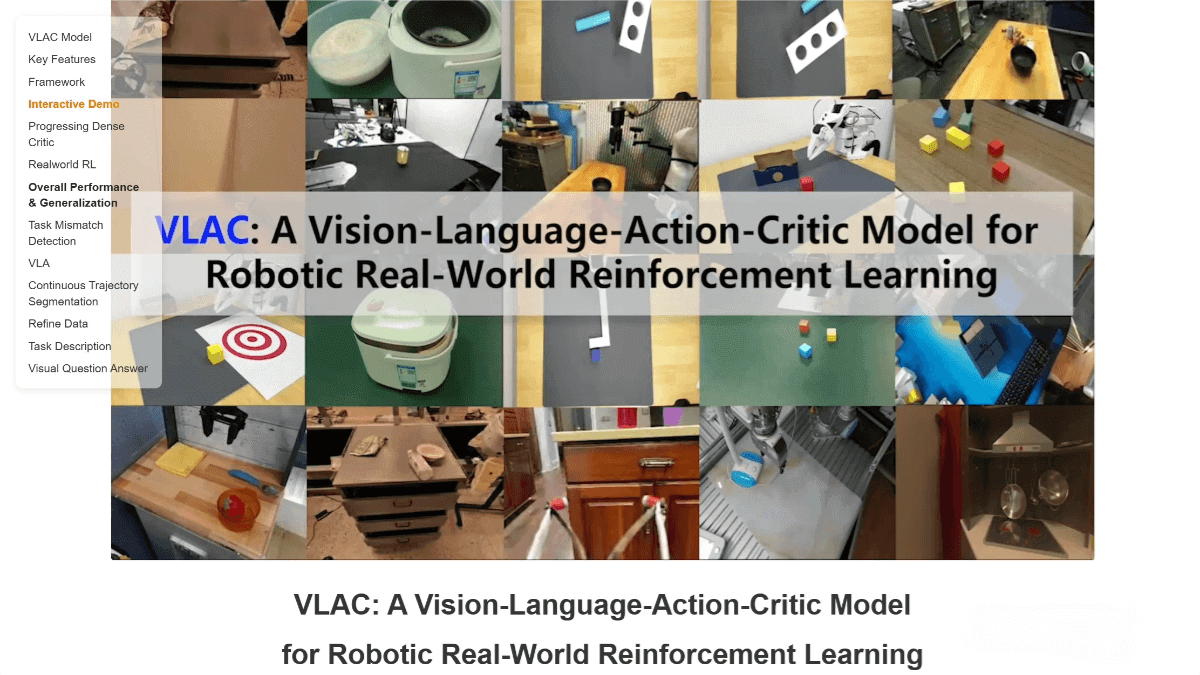

VLAC – An Embodied Reward Large Model Open-Sourced by Shanghai AI Laboratory

What is VLAC?

VLAC is an embodied reward large model released by Shanghai Artificial Intelligence Laboratory.

Built upon the InternVL multimodal large model, it integrates internet video data and robot operation data to provide process rewards and task completion estimation for reinforcement learning in real-world robotics. VLAC can effectively distinguish between normal progression and abnormal/stalled behavior, and supports few-shot rapid generalization through in-context learning. With local smoothness and negative reward mechanisms, it ensures stability and effectiveness in reinforcement learning. Beyond outputting reward signals, VLAC can also generate robot action commands, helping robots achieve autonomous learning and fast adaptation to new environments in the real world. It further supports human-robot collaboration modes to improve training efficiency.

Core Functions of VLAC

-

Provide process rewards and completion estimation: Offers continuous and reliable supervision signals for real-world reinforcement learning, judging whether tasks are completed and estimating progress.

-

Differentiate normal and abnormal behavior: Effectively identifies normal progression, abnormal, or stalled behaviors during robot operations, reducing ineffective exploration.

-

Support rapid few-shot generalization: Achieves fast adaptation in new scenarios through in-context learning.

-

Generate robot action commands: Outputs action instructions alongside reward signals to enable autonomous learning and behavioral adjustment.

-

Build reinforcement learning framework: Integrates into the VLA reinforcement learning framework, allowing robots to quickly adapt to new real-world scenarios and improve task success rates.

-

Enable human-robot collaboration: Supports various modes of human-robot cooperation to enhance training flexibility and reinforcement learning efficiency.

Technical Principles of VLAC

-

Multimodal fusion: Based on InternVL multimodal large model, combining vision, language, and other modalities to improve comprehensive understanding of tasks and environments.

-

Data-driven reward generation: Leverages internet videos and robot operation data to learn dense reward signals, providing stable feedback for reinforcement learning.

-

Task progress estimation: Estimates task completion progress in real time, offering process-level rewards.

-

Abnormal behavior detection: Analyzes robot operation data to detect anomalies or stalls, avoiding ineffective exploration and improving efficiency.

-

In-context learning mechanism: Enables rapid adaptation to new tasks with few samples, enhancing generalization ability.

-

Action command generation: Produces robot action commands while providing reward signals, achieving a closed-loop from perception to action.

-

Reinforcement learning framework integration: Supports the VLA framework that combines process rewards and task completion estimation to improve real-world learning and adaptability.

-

Human-robot collaboration enhancement: Incorporates modes such as expert data replay and manual assisted exploration to further optimize training.

Project Links

-

Official Website: https://vlac.intern-ai.org.cn

-

GitHub Repository: https://github.com/InternRobotics/VLAC

-

HuggingFace Model Hub: https://huggingface.co/InternRobotics/VLAC

Application Scenarios of VLAC

-

Robotics reinforcement learning: Provides process rewards and completion estimation for real-world reinforcement learning, helping robots quickly adapt to new tasks and environments.

-

Human-robot collaborative tasks: Supports modes such as expert data replay and manual exploration assistance to improve flexibility and efficiency in robot training.

-

Multi-robot collaborative learning: Through the VLA reinforcement learning framework, enables multiple robots to interact and learn simultaneously in real-world environments, boosting task success rates.

-

Complex task decomposition and learning: Breaks down complex tasks into subtasks, providing reward signals for each step to help robots gradually accomplish complicated missions.

-

Fast adaptation to new environments: With few-shot rapid generalization, robots can quickly learn and adapt to unfamiliar scenarios, improving task completion rates.

Related Posts