Glyph – A vision-text compression framework jointly open-sourced by Zhipu AI and Tsinghua University

What is Glyph?

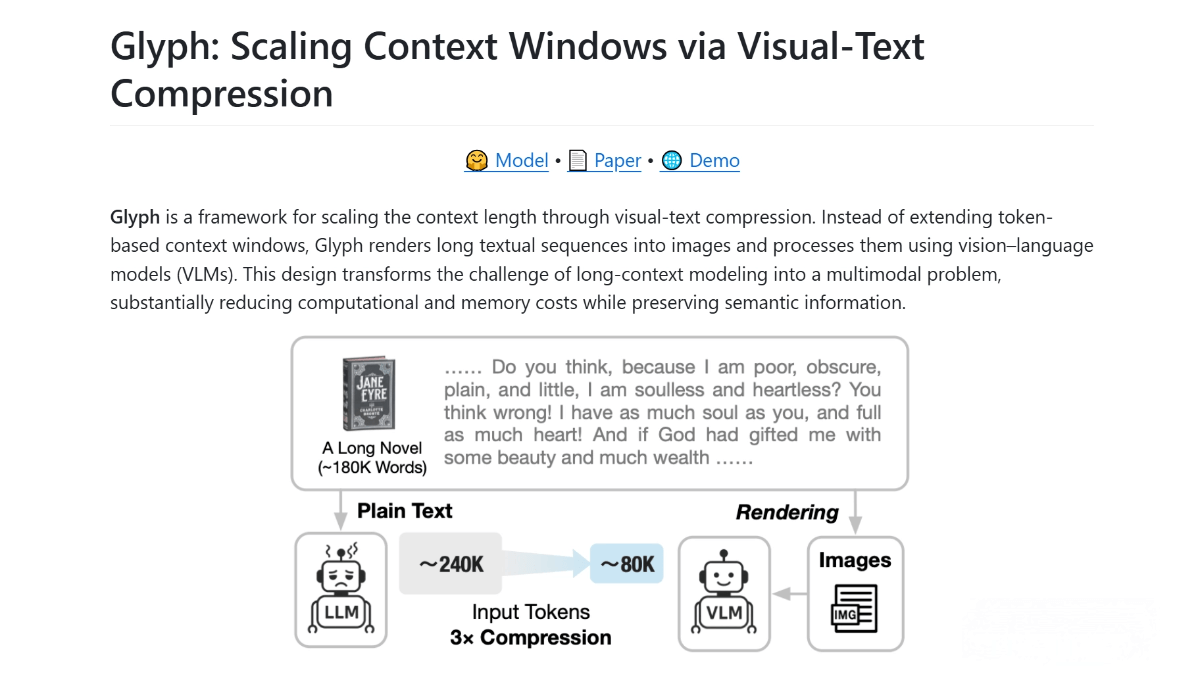

Glyph is an innovative framework open-sourced by Zhipu AI in collaboration with the CoAI Lab at Tsinghua University. It addresses the problem of excessively long contexts in large language models (LLMs) through vision-text compression. The framework renders long texts as images and processes them using a vision-language model (VLM), achieving 3–4× context compression. Glyph significantly reduces computational costs and memory usage while greatly improving inference speed. It performs exceptionally well in multimodal tasks, demonstrating strong generalization capabilities.

Key Features of Glyph

-

Long-context Compression: Glyph can render long texts (e.g., novels, legal documents) into compact images, which are processed by a VLM to achieve 3–4× context compression.

-

Efficient Inference Acceleration: During inference, Glyph achieves a 4.8× speedup in prefill and a 4.4× speedup in decoding, significantly reducing inference time, making it suitable for ultra-long text tasks.

-

Reduced Memory Usage: Due to the higher information density of visual tokens, Glyph reduces memory usage by approximately two-thirds, enabling operation on consumer-grade GPUs such as the RTX 4090 or 3090.

-

Enhanced Multimodal Tasks: Glyph can handle mixed text-image content. In multimodal tasks (e.g., PDF document understanding), accuracy improves by 13%, showing strong generalization.

-

Low-cost Modeling: Glyph does not require training ultra-long-context models. With a powerful VLM and an effective text-rendering strategy, it achieves efficient long-context modeling, lowering both hardware costs and training difficulty.

Technical Principles of Glyph

-

Vision-Text Compression: The core idea of Glyph is to render text as images and process them using a VLM. Images carry much higher information density than plain text; a single visual token can encode the semantics of multiple text tokens, enabling efficient context compression.

-

Three-stage Training Process:

-

Continual Pre-Training: Massive long texts are rendered into images of various styles to train the VLM to understand images. Tasks include OCR (text reconstruction), cross-modal language modeling, and generating missing paragraphs.

-

LLM-driven Rendering Search: Genetic algorithms optimize rendering parameters (e.g., font, DPI, line spacing) to find the optimal balance between compression ratio and accuracy.

-

Post-training: Using the optimal rendering configuration, supervised fine-tuning (SFT) and reinforcement learning (RL) are applied, with OCR auxiliary tasks to ensure the model can accurately “read” text details.

-

-

Advantages of Visual Tokens: Visual tokens have higher information density, allowing shorter context windows and faster inference. They encode text as well as visual attributes like color and layout, closer to human cognitive processing.

Project Links

-

GitHub Repository: https://github.com/thu-coai/Glyph

-

HuggingFace Model Hub: https://huggingface.co/zai-org/Glyph

-

arXiv Paper: https://arxiv.org/pdf/2510.17800

Application Scenarios of Glyph

-

Education: Helps teachers and students quickly analyze textbooks and online courses, extract key points and difficult concepts, improving learning efficiency.

-

Enterprise Applications: Processes long internal reports and customer support queries, helping management quickly extract critical data and conclusions, enhancing decision-making efficiency.

-

Creative Writing: Assists writers and creators in generating long-form stories and scripts, providing a global perspective and coherent plot development, improving creative efficiency.

-

Healthcare: Helps doctors and researchers quickly extract key information, improving diagnostic and research efficiency.

-

Finance: Assists analysts in rapidly identifying key data and trends, enhancing decision-making accuracy.

Related Posts