GigaBrain-0 – Open-source VLA Embodied Model, Based on Data Generated by World Models

What is GigaBrain-0?

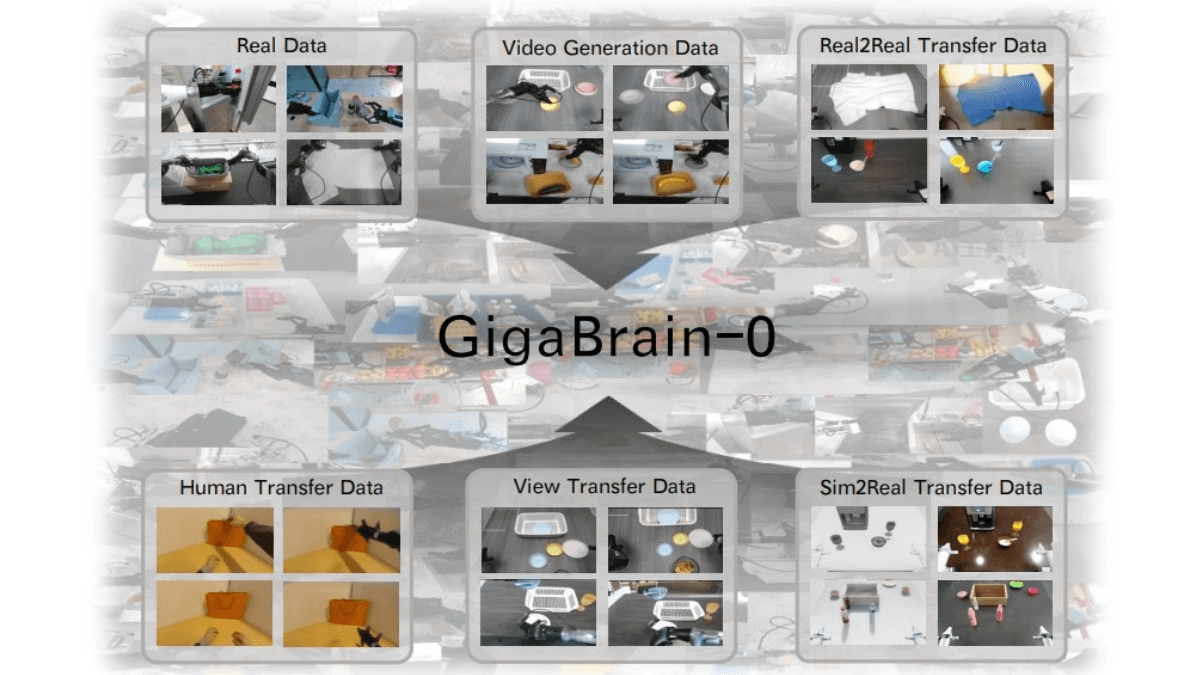

GigaBrain-0 is a next-generation Vision-Language-Action (VLA) foundational model driven by data generated from world models. By generating large-scale, diverse data, it reduces reliance on real robotic data and significantly improves cross-task generalization. Using RGB-D inputs enhances spatial perception, while supervision through an Embodied Chain-of-Thought (Embodied CoT) strengthens the model’s reasoning capabilities during task execution. GigaBrain-0 demonstrates excellent performance in dexterous manipulation, long-horizon tasks, and mobile manipulation in real-world scenarios. It also shows strong generalization across variations in object appearance, placement, and camera viewpoints. For edge platforms, a lightweight version, GigaBrain-0-Small, has been released, enabling efficient operation on devices like the NVIDIA Jetson AGX Orin.

Key Features of GigaBrain-0

-

Data Generation and Reduced Dependence: Leverages world models to generate diverse data, including video generation, Real2Real transfer, and human imitation, reducing the need for real robotic data and improving model generalization.

-

RGB-D Input and Spatial Perception: Enhances spatial awareness through RGB-D input, enabling more accurate understanding of 3D object positions and layouts, improving manipulation precision.

-

Embodied CoT Supervision and Reasoning: Generates intermediate reasoning steps during training, such as action trajectories and subgoal planning, simulating human thought processes to enhance reasoning for complex tasks.

-

Task Success Rate and Generalization: Exhibits high success rates and strong generalization across tasks like folding clothes, setting tables, and transporting boxes, adapting to changes in object appearance, placement, and camera viewpoints.

-

Lightweight Version and Edge Deployment: GigaBrain-0-Small is designed for edge platforms like NVIDIA Jetson AGX Orin, providing efficient inference for real-world deployment.

Technical Principles of GigaBrain-0

-

World Model-Driven: Uses world models to generate large-scale, diverse data, reducing reliance on real robotic data and enhancing generalization.

-

RGB-D Input Modeling: Improves spatial perception by allowing the model to accurately sense 3D object positions and layouts.

-

Embodied CoT Supervision: Generates intermediate reasoning steps such as action trajectories and subgoal plans during training, simulating human thought to improve reasoning in complex tasks.

-

Knowledge Isolation: Employs knowledge isolation to prevent interference between action prediction and Embodied CoT generation, improving stability and performance.

-

Reinforcement Learning and World Model Integration: Can integrate world models as interactive environments for reinforcement learning, reducing real-world trial-and-error and improving learning efficiency.

-

World Model as Policy Generator: World models can learn general representations of physical dynamics and task structures, evolving into “active policy generators” that directly propose feasible action sequences or subgoals.

-

Closed-Loop Self-Improvement: Through a closed-loop cycle of VLA policies and world models, real-world trajectories continuously improve the world model, which in turn generates higher-quality training data, driving autonomous and lifelong learning robotic systems.

Project Links

-

Official Website: https://gigabrain0.github.io/

-

GitHub Repository: https://github.com/open-gigaai/giga-brain-0

-

HuggingFace Model Hub: https://huggingface.co/open-gigaai

-

arXiv Paper: https://arxiv.org/pdf/2510.19430

Application Scenarios of GigaBrain-0

-

Dexterous Manipulation Tasks: Can perform precise operations like folding clothes or preparing tissues, demonstrating strong generalization across different textures and colors.

-

Long-Horizon Tasks: Capable of sequentially planned tasks like clearing tables or making juice, handling complex, time-extended processes.

-

Mobile Manipulation Tasks: Combines global navigation and local manipulation for tasks like transporting boxes or laundry baskets, enabling seamless mobile interaction.

-

Edge Platform Deployment: The lightweight GigaBrain-0-Small version is tailored for edge devices like NVIDIA Jetson AGX Orin, providing efficient performance under limited resources.

Related Posts