LongCat-Flash-Omni – A Real-Time Interactive All-Modal Large Model Open-Sourced by Meituan

What is LongCat-Flash-Omni?

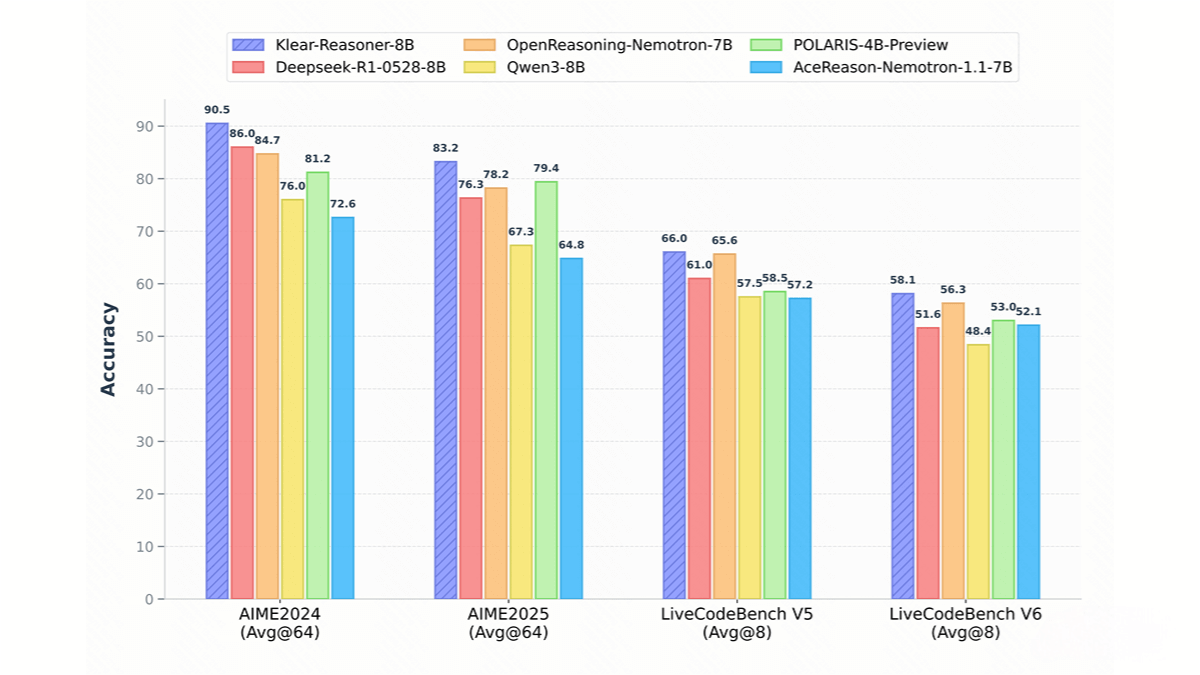

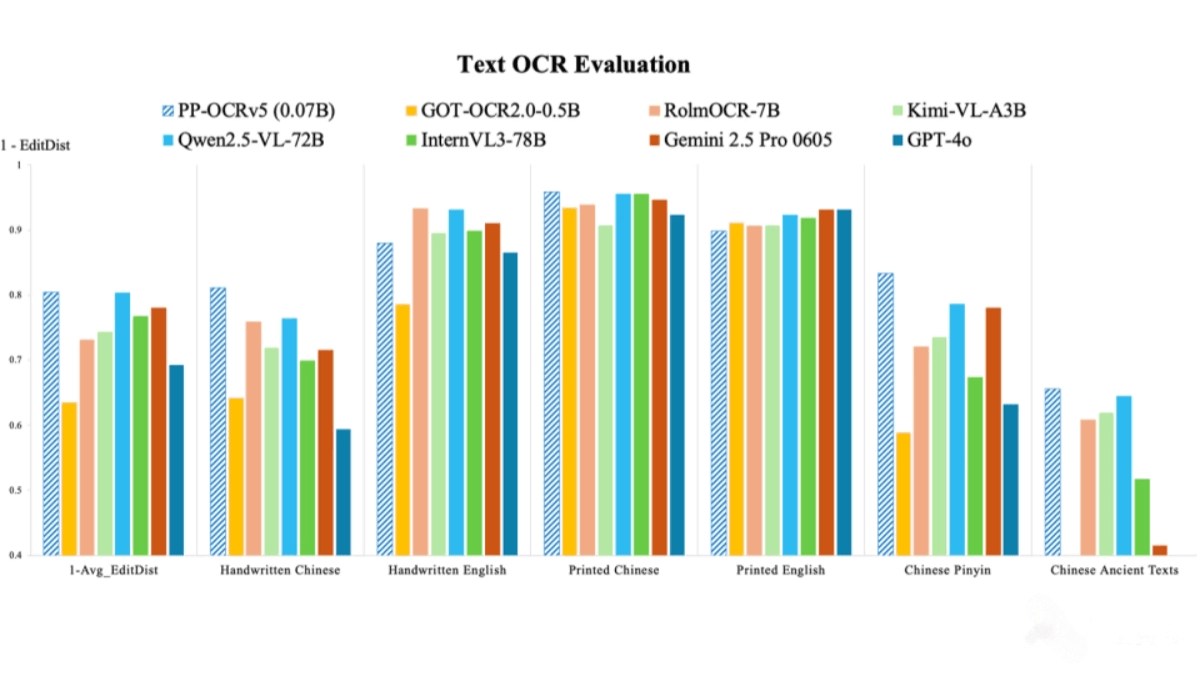

LongCat-Flash-Omni is an all-modal large language model open-sourced by Meituan’s LongCat team. Built on the efficient LongCat-Flash architecture, it innovatively integrates multimodal perception and speech reconstruction modules, featuring 560 billion total parameters (27 billion active). The model achieves low-latency, real-time audio and video interaction, and through a progressive multimodal fusion training strategy, it demonstrates powerful understanding and generation capabilities across text, image, audio, and video. LongCat-Flash-Omni has reached state-of-the-art (SOTA) performance among open-source models in multimodal benchmarks, providing developers with a high-performance solution for building multimodal AI applications.

Key Features of LongCat-Flash-Omni

1. Multimodal Interaction

Supports multimodal input and output across text, speech, image, and video, enabling cross-modal understanding and generation to meet diverse interactive needs.

2. Real-Time Audio and Video Interaction

Delivers low-latency, real-time audio and video interaction with smooth and natural dialogue experiences—ideal for multi-turn conversational scenarios.

3. Long-Context Processing

Supports an ultra-long 128K-token context window, enabling complex reasoning and long-form conversations suitable for multi-round dialogues and long-term memory applications.

4. End-to-End Interaction

Provides end-to-end processing from multimodal input to text and speech output, supporting continuous audio feature processing for efficient and natural interactions.

Technical Foundations of LongCat-Flash-Omni

1. Efficient Architecture Design

-

Shortcut-Connected MoE (ScMoE): Uses a mixture-of-experts architecture with zero-compute experts to optimize computational resource allocation and boost inference efficiency.

-

Lightweight Encoders/Decoders: Visual and audio encoders/decoders each contain about 600 million parameters, achieving an optimal balance between performance and efficiency.

2. Multimodal Fusion

Processes multimodal inputs efficiently through visual and audio encoders. A lightweight audio decoder reconstructs speech tokens into natural waveforms, ensuring realistic voice output.

3. Progressive Multimodal Training

Employs a progressive multimodal fusion strategy, gradually integrating text, audio, image, and video data. This ensures robust all-modal performance without degradation in any single modality. Balanced data distribution across modalities optimizes training and strengthens fusion capabilities.

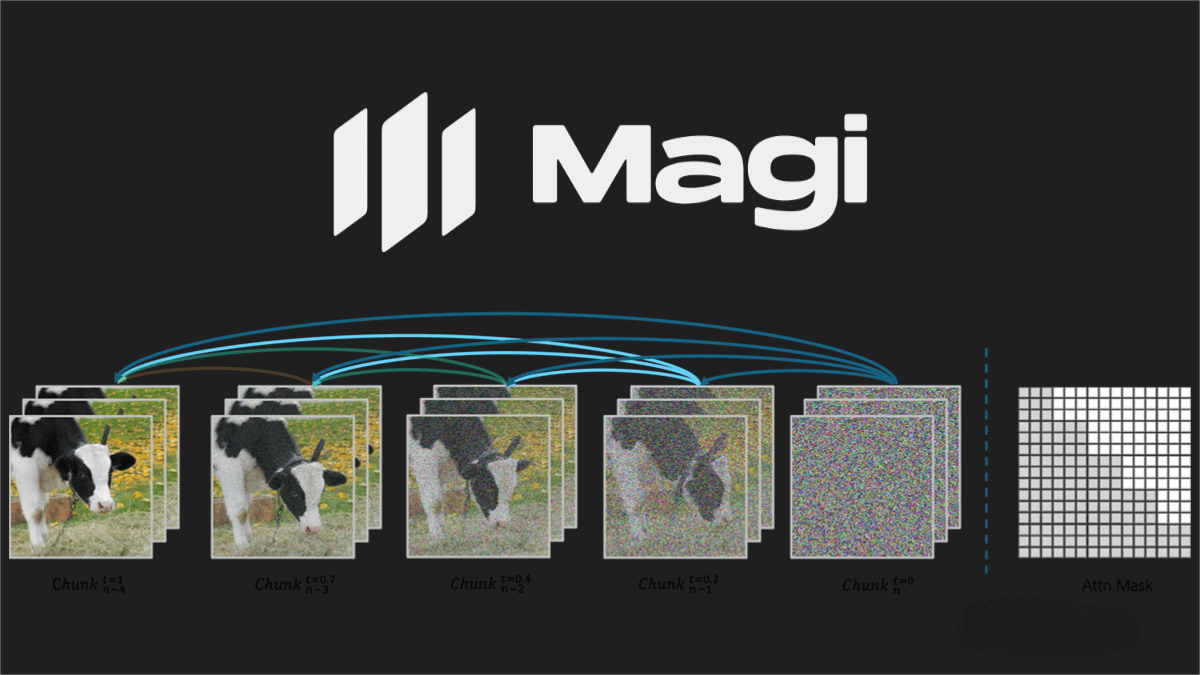

4. Low-Latency Interaction

All modules are optimized for streaming inference, enabling real-time audio-video processing. A chunk-based interleaving mechanism for audio and video features ensures low latency and high-quality output.

5. Long-Context Support

With a 128K-token context window, the model uses dynamic frame sampling and hierarchical token aggregation strategies to enhance long-context reasoning and memory retention.

Project Resources

-

GitHub Repository: https://github.com/meituan-longcat/LongCat-Flash-Omni

-

Hugging Face Model Hub: https://huggingface.co/meituan-longcat/LongCat-Flash-Omni

-

Technical Paper: https://github.com/meituan-longcat/LongCat-Flash-Omni/blob/main/tech_report.pdf

How to Use LongCat-Flash-Omni

-

Via Open-Source Platforms: Access the model on Hugging Face or GitHub for direct testing or local deployment.

-

Via Official Experience Platform: Log in to the LongCat official website to try image upload, file interaction, and voice call features.

-

Via Official App: Download the LongCat App to experience real-time search and voice interaction features.

-

Local Deployment: Follow GitHub documentation to download the model, configure your environment, and run it with compatible hardware (e.g., GPUs).

-

System Integration: Call LongCat-Flash-Omni APIs or integrate it into your existing systems to enable multimodal interaction capabilities.

Application Scenarios of LongCat-Flash-Omni

-

Intelligent Customer Service: Enables 24/7 multimodal support through text, voice, and image interaction for instant, human-like responses.

-

Video Content Creation: Automatically generates video scripts, subtitles, and media content to boost creative productivity.

-

Smart Education: Delivers personalized learning materials with speech narration, image presentation, and text interaction for diverse teaching needs.

-

Intelligent Office: Supports meeting transcription, document generation, and image recognition to improve efficiency and collaboration.

-

Autonomous Driving: Processes visual and video data to analyze road conditions in real time, enhancing driver assistance systems.

Related Posts