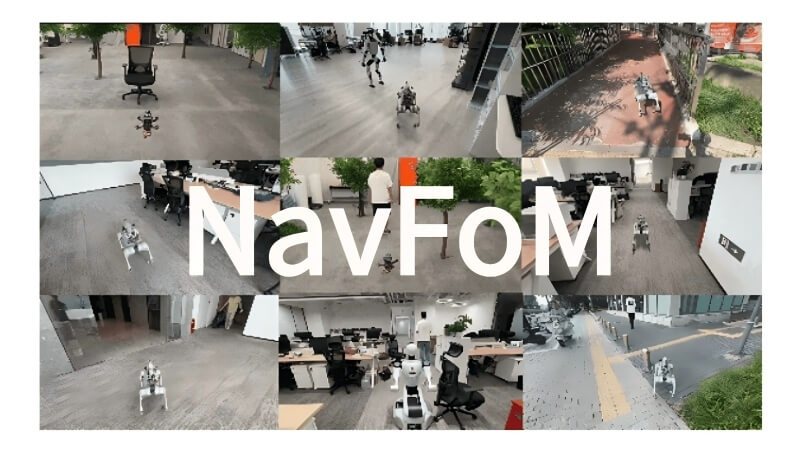

NavFoM – Surround-View Navigation Foundation Model Developed by Galaxy Universal

What is NavFoM?

NavFoM (Navigation Foundation Model) is the world’s first cross-embodiment all-domain surround-view navigation foundation model, jointly developed by Galaxy Universal, Peking University, the University of Adelaide, and Zhejiang University. It provides full-scene support, functioning seamlessly in both indoor and outdoor environments, and can perform zero-shot navigation in previously unseen settings. NavFoM supports multiple navigation tasks, such as goal following and autonomous navigation driven by natural language instructions. It can be quickly adapted to various embodiments, including quadruped robots, wheeled humanoids, drones, and autonomous vehicles.At its core, NavFoM introduces two key innovations — TVI Tokens and the BATS strategy — establishing a new universal paradigm:

“Video Stream + Text Instruction → Action Trajectory”, enabling end-to-end navigation from perception to action without modular dependencies.

Main Features of NavFoM

-

Full-Scene Support:

NavFoM can operate in both indoor and outdoor environments, achieving zero-shot navigation in unseen settings without additional mapping or data collection, demonstrating strong environmental adaptability. -

Multi-Task Capability:

Supports diverse navigation tasks such as goal following and autonomous navigation driven by natural language instructions, enabling flexible responses to a wide range of commands. -

Cross-Embodiment Adaptability:

Easily and cost-effectively adapts to various embodiments, including quadruped robots, wheeled or legged humanoids, drones, and vehicles, showcasing exceptional scalability. -

Technical Innovations:

Utilizes TVI Tokens (Temporal-Viewpoint-Indexed Tokens) to help the model understand time and orientation, and the BATS strategy (Budget-Aware Token Sampling) to maintain smart operation under limited computing resources — together enhancing performance and efficiency. -

Unified Paradigm:

Establishes a novel universal paradigm — “Video Stream + Text Instruction → Action Trajectory” — integrating vision, language, and motion into a single end-to-end process of seeing, understanding, and acting, simplifying traditional navigation pipelines. -

Extensive Dataset Construction:

Builds a massive cross-task dataset containing around 8 million navigation samples across different tasks and embodiments, along with 4 million open Q&A pairs, providing rich training data to enhance generalization.

Technical Principles of NavFoM

-

TVI Tokens (Temporal-Viewpoint-Indexed Tokens):

Encode both temporal and spatial viewpoint information, allowing the model to reason about time and direction for effective navigation in dynamic environments. -

BATS Strategy (Budget-Aware Token Sampling):

A compute-efficient token sampling strategy that ensures robust performance under limited computational budgets, improving real-world deployability. -

End-to-End Universal Paradigm:

Follows the paradigm “Video Stream + Text Instruction → Action Trajectory”, integrating perception, language understanding, and motion generation within one unified framework for direct perception-to-action mapping. -

Cross-Task Dataset:

Incorporates approximately 8 million navigation samples and 4 million open-domain QA pairs, providing diverse and scalable data to boost generalization across tasks and embodiments.

Project Links

(Not yet released)

Application Scenarios of NavFoM

-

Robotic Navigation:

Enables robots to autonomously navigate and follow targets in complex environments such as malls and airports, supporting efficient service and guidance tasks based on natural language commands. -

Autonomous Driving:

Enhances decision-making and navigation capabilities for autonomous vehicles in complex traffic conditions, improving safety and reliability. -

Drone Navigation:

Provides drones with autonomous flight capabilities in challenging terrains and environments, supporting applications such as logistics delivery, environmental monitoring, and aerial surveying. -

Humanoid Robots:

Supports both wheeled and legged humanoids, allowing them to adapt to various environments and perform complex navigation and interaction tasks. -

Application Model Development:

Developers can use NavFoM as a foundation model and conduct fine-tuning to build domain-specific navigation applications, expanding its use across diverse industries and research fields.

Related Posts