TapNow – an AI visual content creation platform that offers multiple preset workflows

What is TapNow?

TapNow is an AI visual content creation platform that integrates multiple advanced image and video generation models to offer a wide range of preset workflows. Users only need to upload their materials and select a corresponding workflow to quickly generate high-quality creative content such as advertising images, dynamic scenes, and realistic figurines. The platform features a simple and intuitive visual interface suitable for both beginners and professionals. TapNow’s creative process is open-source, allowing users to learn from and replicate the workflows behind outstanding creations. This significantly lowers the barrier to entry and boosts creative efficiency, making TapNow a powerful tool for AI-driven content creation.

Main Features of TapNow

Preset Workflows:

Offers a variety of preset workflows, such as e-commerce ad generation, outfit swapping for selfies, pet-to-figurine generation, dynamic scene creation, and storyboard image generation. Users can simply upload materials and select a workflow to quickly produce high-quality outputs.

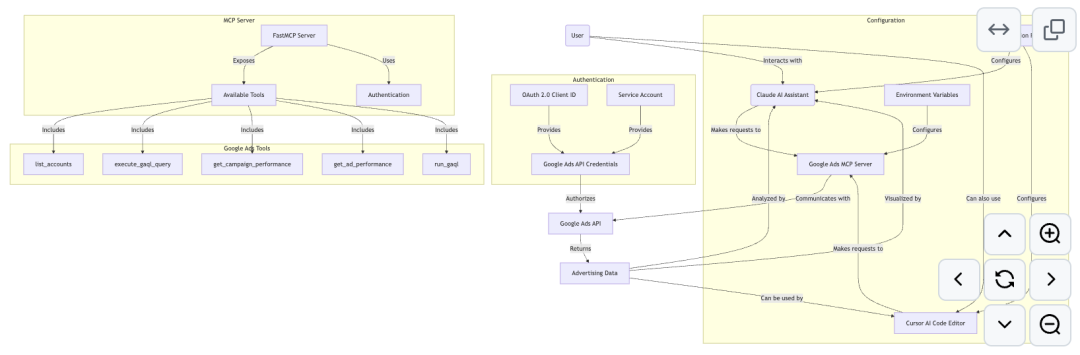

Powerful Generation Engine:

Integrates multiple state-of-the-art image and video generation models (such as Veo, Jimeng, Midjourney, and Imagen). Users can flexibly select models according to their creative needs, meeting diverse production requirements.

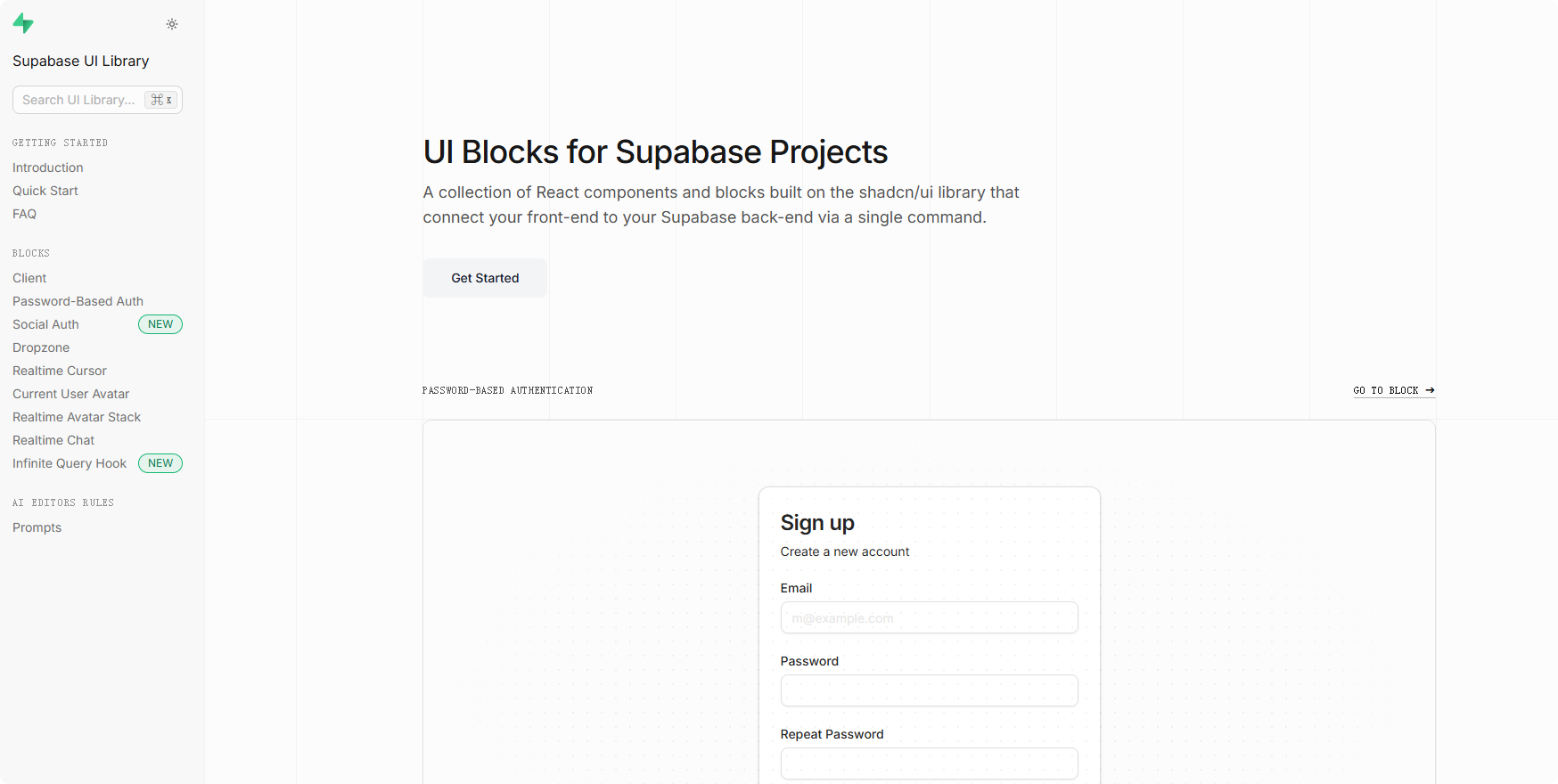

Visual Creation Interface:

Features a node-based visual editor that allows users to complete creations through drag-and-drop and click operations without the need for complex prompts, lowering the creative threshold.

Open Creation Process:

Creators can view and learn from others’ successful projects, including prompt engineering methods and generation logic. They can clone and modify projects for further learning and experimentation.

Auxiliary Functions:

Includes additional features such as sketch-to-image, sketch-to-video, pose control, and image/video enhancement, providing greater flexibility and improved output quality.

How to Use TapNow

-

Register and Log In:

Visit the TapNow official website https://www.tapnow.ai/zn to register and log in. -

Create a Project:

After logging in, click the homepage icon and select “New Project” to enter the creation interface. -

Select a Workflow:

In the workflow panel, choose the desired function module (e.g., e-commerce ad generation, outfit swapping, etc.). -

Upload Materials:

Upload the required images, videos, or other assets according to the workflow’s requirements. -

Enter Prompts:

Input your creative ideas or style preferences in the prompt box. Reference example prompts if needed. -

Click Generate:

Once materials and prompts are ready, click the “Generate” button and wait for the output. -

View and Edit:

Review the generated results; if unsatisfied, adjust prompts or re-upload materials for refinement. -

Save and Share:

When satisfied, click “Save” to download the work locally or share it on social media.

Application Scenarios of TapNow

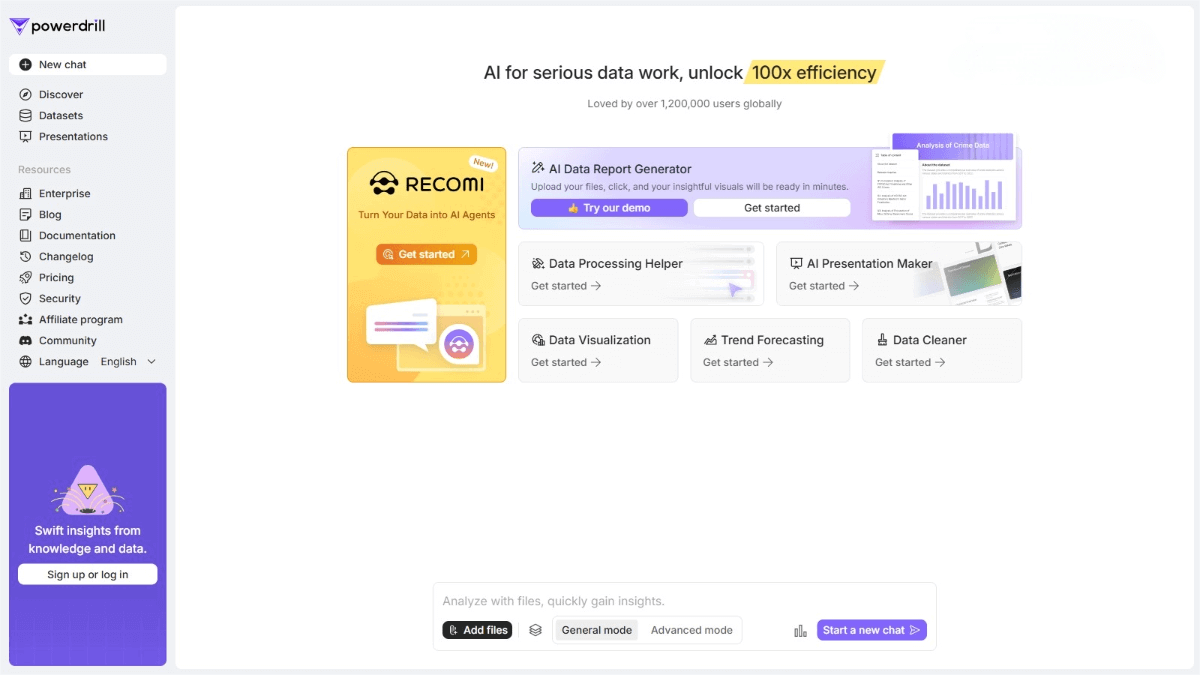

E-commerce and Advertising:

Quickly transform ordinary product images into professional advertising visuals for e-commerce platforms and social media campaigns.

Film and Animation:

Upload character images and rough sketches to generate smooth dynamic scenes or animation clips, supporting short video creation and film production.

Design and Creativity:

Generate ready-to-use graphic design materials for ads, banners, and social media in one click, enhancing design efficiency.

Education and Learning:

Produce educational visuals, videos, or animations to help students better understand and retain knowledge, improving teaching outcomes.

Personal Creation:

Upload selfies to instantly try on different outfits and hairstyles—perfect for fashion influencers or individuals exploring various styles.

Related Posts