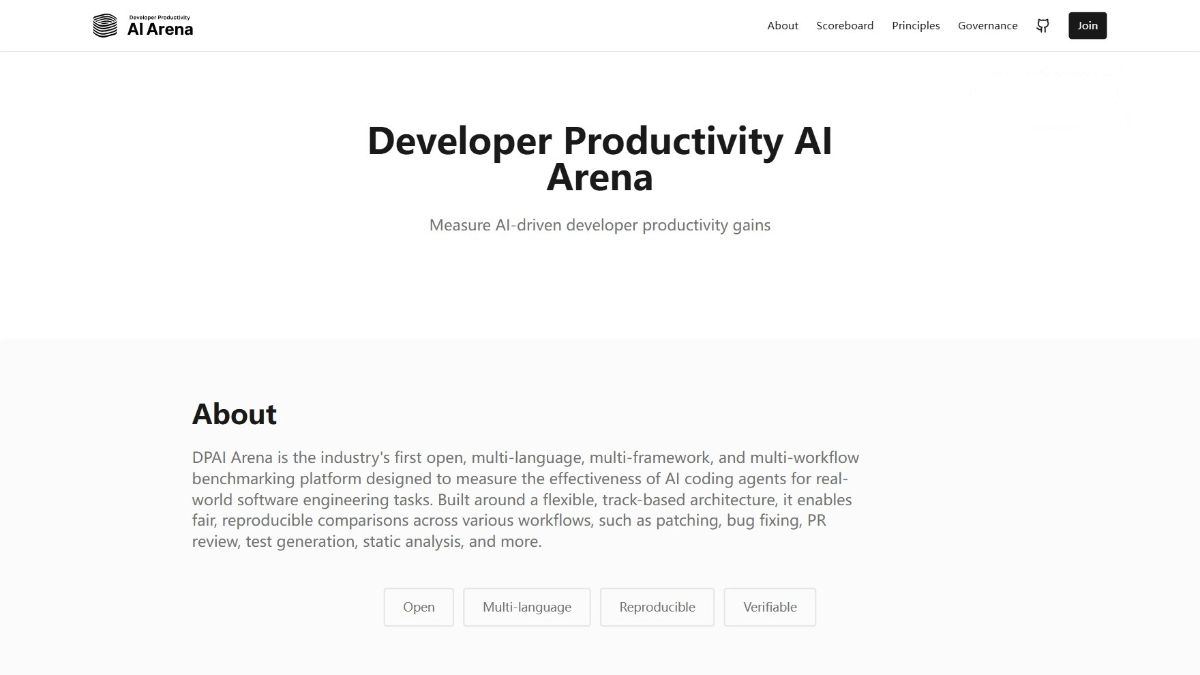

DPAI Arena – An AI Coding Agent Benchmarking Platform Launched by JetBrains

What is DPAI Arena?

DPAI Arena is an open AI coding agent benchmarking platform launched by JetBrains in collaboration with the Linux Foundation. It measures the real development efficiency of AI tools across multiple programming languages, frameworks, and workflows. Built on a multi-track architecture, it covers real-world workflows such as issue fixing, PR review, and test generation, providing a transparent and extensible evaluation system.Through community collaboration, DPAI Arena promotes transparency and trustworthiness in AI development tools, helping developers and enterprises better evaluate and choose AI-assisted coding solutions.

Main Features of DPAI Arena

Support for Multiple Languages and Frameworks

Supports evaluating AI tools across a variety of programming languages (such as Java, Python, and JavaScript) and frameworks (such as Spring and Quarkus).

Multi-track Architecture

Simulates real development workflows through tracks such as Issue → Patch, PR Review, Coverage, and Static Analysis, enabling comprehensive assessment of how AI performs in actual software development tasks.

Transparent and Extensible Evaluation System

Provides a transparent evaluation pipeline and reproducible infrastructure. The platform supports community contributions of datasets and evaluation rules, ensuring openness and inclusiveness.

Quality Assessment

Focuses on task completion rates and uses an LLM-driven evaluation framework to assess whether AI-generated code follows best practices and maintains high quality.

Technical Principles of DPAI Arena

Multi-track Architecture

DPAI Arena uses a multi-track architecture to simulate real software development workflows. Each track corresponds to a specific type of development task—for example, issue fixing (Issue → Patch), code review (PR Review), test coverage improvement (Coverage), and static code analysis (Static Analysis). This architecture covers all major phases of software development and more accurately reflects how AI coding agents perform in real-world environments.

Dataset Management

Dataset management in DPAI Arena emphasizes diversity and relevance to modern development needs. The platform allows community members and vendors to contribute domain-specific datasets, supports BYOD (Bring Your Own Dataset), and updates datasets regularly to match current development practices. This flexible approach ensures that the benchmarks cover a wide range of languages, frameworks, and tech stacks, providing comprehensive evaluation scenarios for AI coding agents.

Evaluation Mechanism

Introduces an LLM-based quality evaluation framework. AI-generated code is evaluated by “judges” across multiple dimensions, such as adherence to best practices and maintainability. This mechanism enables DPAI Arena to more accurately assess how AI tools perform in real development contexts.

Infrastructure

DPAI Arena’s infrastructure is designed for transparency, reproducibility, and scalability. All evaluation processes, scoring rules, and infrastructure components are open and verifiable to ensure credible results. The platform also integrates with CI/CD systems such as GitHub Actions and TeamCity, making it easy for developers to incorporate into existing workflows.

Project Links

-

Official Website: https://dpaia.dev/

-

GitHub Repository: https://github.com/dpaia

Application Scenarios of DPAI Arena

Developer Tool Evaluation

Developers can use DPAI Arena to compare the performance of different AI coding tools on standardized benchmarks and choose the ones that best improve their productivity.

Benchmark Contributions by Tech Vendors

Vendors can contribute domain-specific benchmarks and datasets to showcase the strengths of their tools and support the community.

Enterprise-level Tool Evaluation

Enterprises can use DPAI Arena to evaluate AI tools under real workloads, ensuring they meet development needs and quality standards.

Research and Innovation

Research institutions and academia can use DPAI Arena to study the effectiveness of AI coding agents, identify limitations, and explore new research directions.

Related Posts