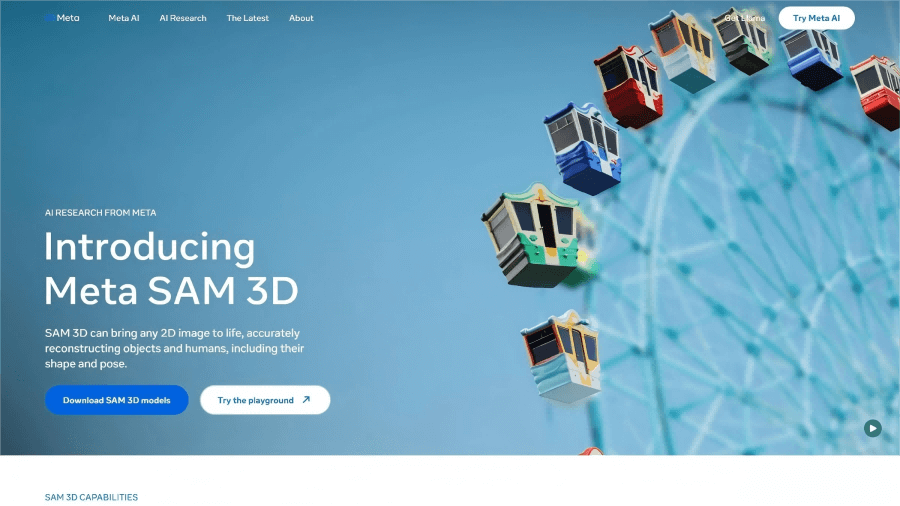

What is SAM 3D?

SAM 3D is an advanced 3D generation model released by Meta, consisting of two sub-models: SAM 3D Objects and SAM 3D Body.SAM 3D Objects reconstructs 3D models of objects and scenes from a single image, supporting multi-view consistency and handling complex occlusions.SAM 3D Body focuses on high-precision recovery of human poses, skeletons, and meshes, suitable for virtual humans and motion capture scenarios.Trained on large-scale datasets with multi-task learning, SAM 3D offers strong generalization and robustness. It can be applied across digital twins, robotic perception, AR/VR content generation, and other fields, providing a solid foundation for 3D visual applications.

Key Features of SAM 3D

SAM 3D Objects

-

3D Reconstruction from a Single Image: Predicts the 3D structure of objects, including depth estimation, mesh reconstruction, and material/surface appearance.

-

Multi-view Consistency: Generated 3D models remain consistent across different viewpoints, suitable for multi-view interaction.

-

Complex Scene Handling: Supports reconstruction under complex occlusion, non-frontal views, and low-light conditions, with strong generalization.

-

Applications: Digital twins, robotic perception, indoor/outdoor scene reconstruction, autonomous driving environment understanding, etc.

SAM 3D Body

-

Human Pose and Mesh Recovery: Recovers 3D human poses, skeletal structure, and animatable meshes from a single image, with high-precision hand, foot, and limb keypoint reconstruction.

-

High Robustness: Handles non-standard poses, occlusion, and partially out-of-frame scenarios.

-

Applications: Virtual human modeling, motion capture, digital asset creation, game development, etc.

Technical Principles of SAM 3D

-

Multi-Head Prediction Structure: SAM 3D outputs multiple modalities — depth, normals, masks, meshes — simultaneously, improving reconstruction accuracy and completeness, especially in complex scenes and occlusions.

-

Large-Scale Data Training & Weak Supervision: Combines human annotations and AI-generated data, using weakly supervised learning to reduce reliance on high-quality labels while enhancing generalization.

-

Transformer Encoder-Decoder Architecture: SAM 3D Body employs a Transformer structure, enabling prompt-based predictions (e.g., masks and keypoints) for high-precision human pose and mesh reconstruction under complex poses and occlusion.

-

Innovative Data Annotation Engine: Human annotators evaluate model-generated 3D data, enabling efficient large-scale labeling of real-world images and addressing the scarcity of 3D datasets.

-

Optimization & Efficient Inference: Utilizes techniques like diffusion models to accelerate inference, reduce memory usage, and achieve real-time reconstruction on standard hardware.

Project Links for SAM 3D

-

Official Website: https://ai.meta.com/sam3d/

-

GitHub Repositories:

-

SAM 3D Body: https://github.com/facebookresearch/sam-3d-body

-

SAM 3D Objects: https://github.com/facebookresearch/sam-3d-objects

-

-

Technical Report: https://ai.meta.com/research/publications/sam-3d-body-robust-full-body-human-mesh-recovery/

Application Scenarios of SAM 3D

-

Indoor/Outdoor Scene Reconstruction: Reconstruct 3D models of buildings, interior layouts, and other environments from a single photo for virtual design, architectural visualization, and digital twins.

-

Autonomous Driving Environment Understanding: Helps autonomous systems quickly interpret complex 3D environments, improving perception capabilities.

-

Single-Image Human Reconstruction: Generates high-precision human poses and meshes for virtual character modeling.

-

Low-Cost Motion Capture: Achieves motion capture from a single image without complex equipment, suitable for film, game pre-production, etc.

-

3D Model Generation: Quickly generates 3D models from a single image for AR/VR applications, enhancing content creation efficiency.

-

Virtual Scene Construction: Integrates with other models (e.g., SAM) to build realistic virtual scenes for immersive experiences.

Related Posts