GPT-5.1-Codex-Max – an intelligent programming model released by OpenAI

What is GPT-5.1-Codex-Max?

GPT-5.1-Codex-Max is an intelligent programming model released by OpenAI, designed for complex, long-duration development tasks. The model uses an updated reasoning architecture and employs a “compression” technique to span multiple context windows, enabling it to handle large-scale tasks involving millions of tokens, such as project-level refactoring and deep debugging.It demonstrates excellent performance on real software engineering tasks, including code review and frontend development, and runs well in Windows environments. GPT-5.1-Codex-Max significantly improves token efficiency, reducing development costs. It has been integrated into Codex, supporting CLI, IDE extensions, cloud deployment, and code review, with an API interface coming soon.

Key Features of GPT-5.1-Codex-Max

Long-Duration Task Handling:

The first model capable of spanning multiple context windows. Using compression technology, it maintains coherence across long tasks, supporting project-level refactoring, deep debugging, and multi-hour continuous development.

Efficient Code Generation:

Excels in real software engineering tasks, such as code review, frontend development, and PR creation, producing high-quality code while significantly reducing development costs.

Multi-Environment Support:

First model to support Windows environments, optimizing collaboration in Codex CLI for diverse development scenarios.

Improved Reasoning Efficiency:

Consumes fewer reasoning tokens while providing higher accuracy and performance compared to previous models under the same reasoning load.

Security and Reliability:

Enhanced performance for long-duration reasoning tasks in cybersecurity, strengthened by projects such as Aardvark.

Technical Principles of GPT-5.1-Codex-Max

-

“Compression” Across Multiple Context Windows:

Compresses historical information to free up space near context window limits while retaining critical context, enabling continuous long-duration tasks. -

Updated Reasoning Architecture:

Based on OpenAI’s latest base reasoning model, trained extensively on software engineering, mathematics, and research tasks for smarter performance on complex challenges. -

Efficient Token Management:

Optimizes the reasoning process to reduce unnecessary token usage, providing higher efficiency and lower costs for non-latency-sensitive tasks. -

Cross-Platform Optimization:

Specifically trained for Windows environments, enhancing collaboration in Codex CLI to better suit practical development scenarios.

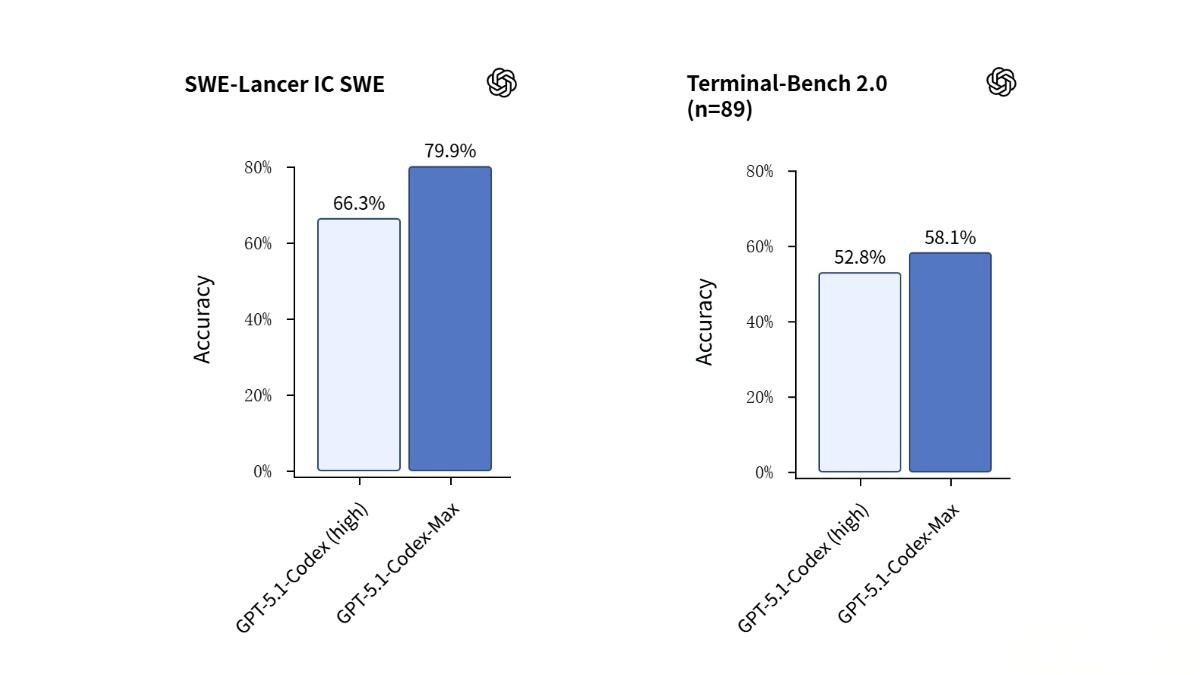

Performance

-

SWE-Lancer IC SWE Test: Accuracy improved from 66.3% → 79.9%

-

Terminal-Bench 2.0: Accuracy improved from 52.8% → 58.1%

Project Links

-

Official Website: https://openai.com/index/gpt-5-1-codex-max/

Application Scenarios

Code Refactoring:

Supports large-scale refactoring, spanning context windows via compression technology to optimize structure and improve quality.

Code Debugging:

Performs deep debugging, continuously tracking and fixing complex issues, reducing debugging time and human effort.

Code Generation:

Generates high-quality frontend and backend code, optimizing performance and lowering development costs while improving efficiency.

Code Review:

Automates code review, providing detailed feedback to help developers identify potential issues and improve code quality.

CI/CD Processes:

Automatically fixes code issues in continuous integration and deployment workflows, ensuring code passes tests and is deployed quickly.

Related Posts