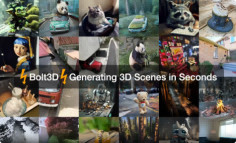

Bolt3D – A 3D scene generation technology jointly launched by the University of Oxford and Google

What is Bolt3D?

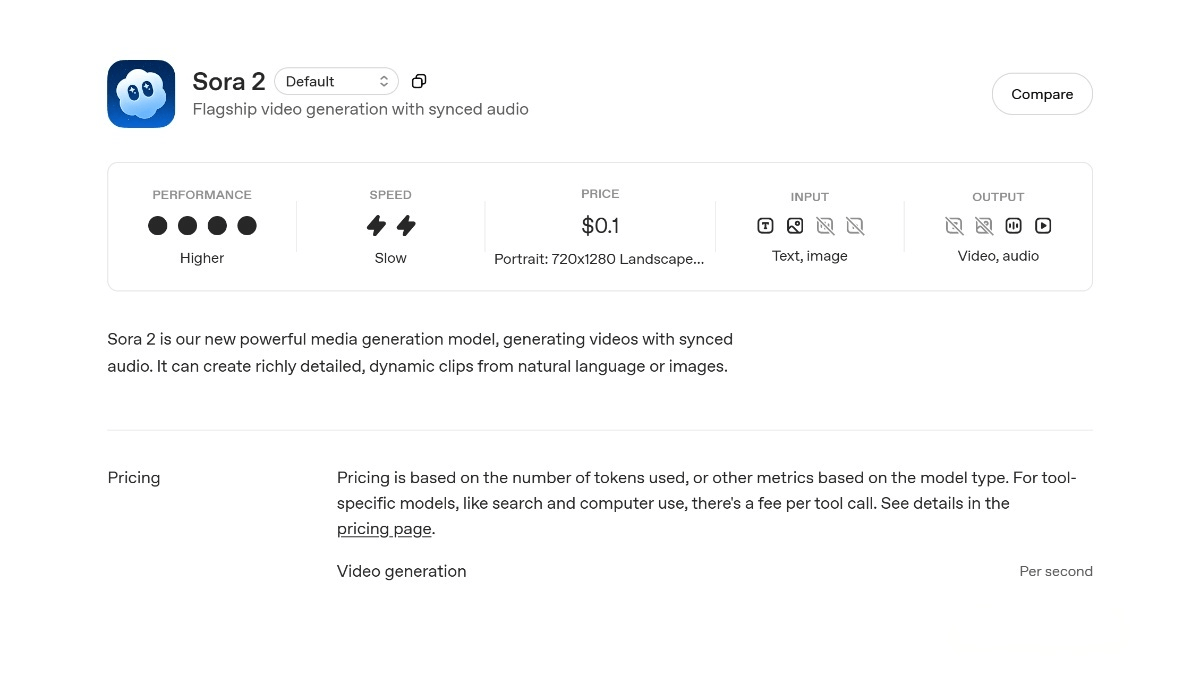

Bolt3D is a novel 3D scene generation technology jointly introduced by Google Research, the VGG team at the University of Oxford, and Google DeepMind. It is a latent diffusion model capable of directly sampling 3D scene representations from one or multiple images in less than seven seconds on a single GPU. On an NVIDIA H100 graphics processing unit, Bolt3D can process a photo into a complete 3D scene in just 6.25 seconds.

The main functions of Bolt3D

- Rapid 3D Scene Generation: Bolt3D is a feedforward generative method that can directly sample 3D scene representations from one or multiple input images. It boasts extremely fast generation speed, completing the process in just 6.25 seconds on a single GPU.

- Multi-view Input and Generalization Ability: It supports varying numbers of input images, ranging from single-view to multi-view, and can generate content for unobserved regions, demonstrating strong generalization capabilities.

- High-Fidelity 3D Scene Representation: It utilizes Gaussian Splatting technology to store data, constructing 3D scenes through three-dimensional Gaussian functions arranged on a 2D grid. Each function records position, color, transparency, and spatial information, resulting in high-quality 3D scenes.

- Real-time Interaction and Applications: Users can view and render the generated 3D scenes in real time within a browser. This technology has broad application prospects, including game development, virtual reality, augmented reality, architectural design, film and television production, and more.

The Technical Principles of Bolt3D

- Geometric Multi-View Latent Diffusion Model: We trained a multi-view latent diffusion model for jointly modeling images and 3D point clouds. The model takes one or more images and their camera poses as input and learns to capture the joint distribution of the target image, the target point cloud, and the source view point clouds.

- Geometric VAE: We trained a geometric Variational Autoencoder (VAE) to jointly encode the point cloud and camera ray map of a single view into a geometric latent feature. The model is optimized by minimizing a combination of the standard VAE objective and specific geometric losses, achieving high precision in compressing point clouds.

- Gaussian Head Model: Given the camera, the generated image, and the point cloud, we trained a multi-view feedforward Gaussian head model to output the refined color, opacity, and covariance matrix of 3D Gaussians stored in scatter images.

- Large-Scale Multi-View Consistent Dataset: To train Bolt3D, we created a large-scale, multi-view consistent 3D geometry and appearance dataset by applying state-of-the-art dense reconstruction techniques to existing multi-view image datasets.

- Three-Stage Training Process: We adopted a three-stage training process. First, the geometric Variational Autoencoder (Geometry VAE) is trained. Then, the Gaussian head model is trained. Finally, the latent diffusion model is trained.

The project address of Bolt3D

- Project official website: https://szymanowiczs.github.io/bolt3d

- arXiv technical paper: https://arxiv.org/pdf/2503.14445

Application scenarios of Bolt3D

- Game Development: Quickly generate 3D scenes in games to reduce development time and costs.

- Virtual Reality and Augmented Reality: Provide real-time 3D scene generation for VR and AR applications to enhance user experience.

- Architectural Design: Quickly generate 3D models of buildings for design and display purposes.

- Film and Television Production: Used for special effects production in movies and TV dramas, quickly generating complex 3D scenes.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...