RF-DETR – A real-time object detection model launched by Roboflow

What is RF-DETR?

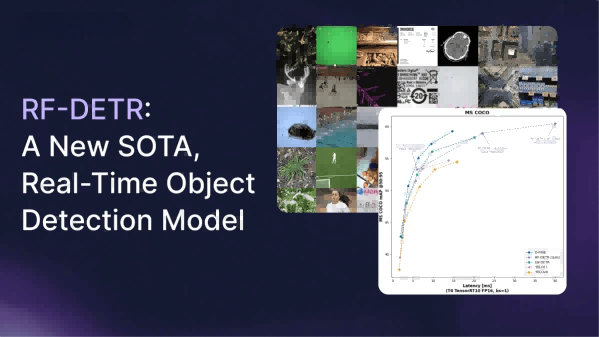

RF-DETR is a real-time object detection model introduced by Roboflow. It is the first real-time model to achieve a mean Average Precision (mAP) of over 60 on the COCO dataset, outperforming existing object detection models. RF-DETR combines LW-DETR with a pre-trained DINOv2 backbone, offering strong domain adaptability. It supports multi-resolution training, allowing flexible trade-offs between accuracy and latency based on specific needs. Additionally, RF-DETR provides pre-trained checkpoints, making it easy for users to fine-tune on custom datasets through transfer learning.

The main functions of RF-DETR

- High-Precision Real-Time Detection: Achieves a mean Average Precision (mAP) of over 60 on the COCO dataset while maintaining real-time performance (25+ FPS), making it suitable for scenarios requiring both high speed and precision.

- Powerful Domain Adaptability: Adapts to various domains and datasets, including but not limited to aerial images, industrial scenes, natural environments, etc.

- Flexible Resolution Options: Supports multi-resolution training and inference, allowing users to balance precision and latency based on actual needs.

- Convenient Fine-Tuning and Deployment: Provides pre-trained checkpoints, enabling users to fine-tune on custom datasets quickly for specific tasks.

The Technical Principles of RF-DETR

- Transformer Architecture: RF-DETR belongs to the DETR (Detection Transformer) family and performs object detection based on the Transformer architecture. Compared to traditional CNN-based object detection models (such as YOLO), Transformer can better capture long-range dependencies and global contextual information in images, thereby improving detection accuracy.

- Pre-trained DINOv2 Backbone: The model incorporates a pre-trained DINOv2 backbone network. DINOv2 is a powerful visual representation learning model that learns rich image features through self-supervised pre-training on large-scale datasets. By leveraging pre-trained features in RF-DETR, the model gains adaptability and generalization capabilities when facing new domains or small datasets.

- Single-Scale Feature Extraction: Unlike Deformable DETR’s multi-scale self-attention mechanism, RF-DETR extracts image feature maps from a single-scale backbone. This simplifies the model structure, reduces computational complexity, and maintains high detection performance, contributing to real-time applicability.

- Multi-Resolution Training: RF-DETR is trained at multiple resolutions, enabling the model to select an appropriate resolution during inference based on different application scenarios. Higher resolutions improve detection accuracy, while lower resolutions reduce latency. Users can flexibly adjust the resolution according to actual needs without retraining the model, achieving a dynamic balance between accuracy and latency.

- Optimized Post-Processing Strategy: During model performance evaluation, RF-DETR employs an optimized Non-Maximum Suppression (NMS) strategy to ensure that the total latency (Total Latency), considering NMS delay, remains low. This accurately reflects the model’s operational efficiency in real-world applications.

Project address of RF-DETR

- Project official website: https://blog.roboflow.com/rf-detr/

- GitHub repository: https://github.com/roboflow/rf-detr

- Online experience Demo: https://huggingface.co/spaces/SkalskiP/RF-DETR

Application Scenarios of RF-DETR

- Security Monitoring: Detect personnel, vehicles, etc. in surveillance videos in real time to improve security efficiency.

- Autonomous Driving: Detect road targets and provide decision-making evidence for autonomous driving.

- Industrial Inspection: Conduct quality inspection on production lines to improve production efficiency.

- UAV Monitoring: Detect ground targets in real time to support fields such as agriculture and environmental protection.

- Smart Retail: Analyze customer behavior, manage product inventory, and improve operational efficiency.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...