ACTalker – An End-to-End Video Diffusion Framework Jointly Developed by HKUST, Tencent, and Tsinghua University

What is ACTalker?

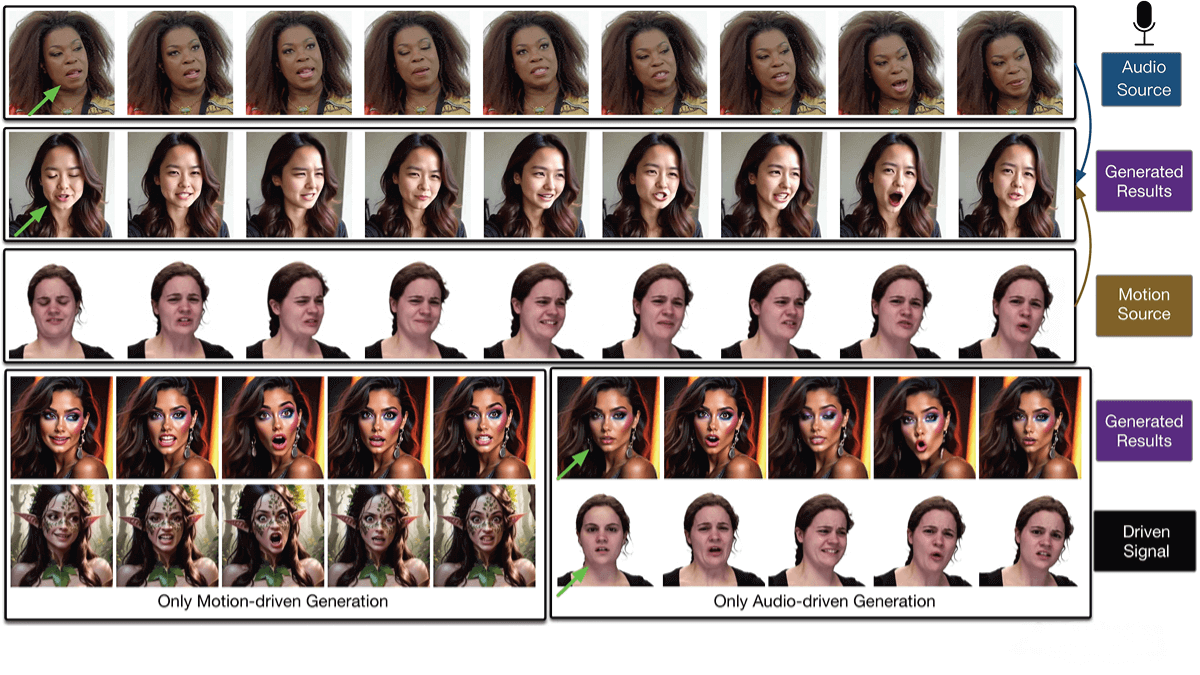

ACTalker is an end-to-end video diffusion framework designed for generating realistic talking-head videos. It supports single or multi-modal control signals, such as audio, facial expressions, etc. Its core architecture incorporates a parallel Mamba structure, which utilizes multiple branches to control different facial regions with distinct driving signals. Based on a gating mechanism and mask dropout strategy, it achieves flexible and natural video generation. On the CelebV-HQ dataset, ACTalker demonstrates excellent Sync-C and Sync-D scores of 5.317 and 7.869, respectively, along with an FVD-Inc score of 232.374, showcasing strong audio synchronization and high video quality.

The main functions of ACTalker

- Multi-signal Control vs. Single-signal Control: ACTalker supports both multi-signal and single-signal control, enabling the generation of speaker head videos driven by various signals such as audio and facial expressions.

- Naturally Coordinated Video Generation: Through the Mamba structure, driving signals can manipulate feature tokens across both temporal and spatial dimensions in each branch, ensuring that the generated videos are naturally coordinated in time and space.

- High-Quality Video Generation: Experimental results demonstrate that ACTalker can generate natural and realistic facial videos. Under multi-signal control, the Mamba layers can seamlessly integrate multiple driving modalities to generate videos without conflicts.

The Technical Principle of ACTalker

- Parallel Mamba Structure: ACTalker adopts a parallel Mamba structure, which consists of multiple branches. Each branch utilizes separate driving signals (such as audio, expressions, etc.) to control specific facial regions. This enables signals of different modalities to act on the video generation process simultaneously without interference, achieving multi-signal control.

- Gating Mechanism: A gating mechanism is applied across all branches. During training, it is randomly activated or deactivated, and during inference, it can be manually adjusted as needed. The gating mechanism provides a flexible control method for video generation, supporting the use of either a single signal or multiple signals for driving under different conditions.

- Mask-Drop Strategy: ACTalker introduces a mask-drop strategy, which allows each driving signal to independently control its corresponding facial region. During the training process, the strategy randomly discards feature tokens unrelated to the control region, enhancing the effectiveness of the driving signals, improving the quality of the generated content, and preventing control conflicts.

- State Space Modeling (SSM): To ensure the natural coordination of controlled videos in both temporal and spatial dimensions, ACTalker employs state space modeling (SSM). The model enables driving signals to manipulate feature tokens across both temporal and spatial dimensions within each branch, achieving natural coordination of facial movements.

- Video Diffusion Model Foundation: ACTalker is built upon a video diffusion model. During the denoising process, a multi-branch control module is introduced. Each Mamba branch processes specific modality signals, and the gating mechanism dynamically adjusts the influence weights of each modality.

The project address of ACTalker

- Project Website: https://harlanhong.github.io/publications/actalker

- GitHub Repository: https://github.com/harlanhong/ACTalker

- Hugging Face Model Hub: https://huggingface.co/papers/2504.02542

- arXiv Technical Paper: https://arxiv.org/pdf/2504.02542

Application scenarios of ACTalker

- Virtual Host: ACTalker can generate natural and smooth talking head videos through various signals such as audio and facial expressions, making virtual hosts more vivid and realistic, better interacting with the audience, and enhancing the viewing experience.

- Remote Meetings: In remote meetings, ACTalker can generate natural talking head videos using audio signals and the facial expression signals of participants. It can solve the problem of lip-sync issues caused by network latency. When the video signal is poor, it can still generate natural facial videos through audio and facial expression signals, enhancing the sense of reality in remote communication.

- Online Education: In the field of online education, teachers can use ACTalker to generate natural talking head videos. By controlling audio and facial expression signals, teaching videos can become more vivid and interesting, capturing students’ attention and improving teaching effectiveness.

- Virtual Reality and Augmented Reality: In virtual reality (VR) and augmented reality (AR) applications, ACTalker can generate talking head videos that match the virtual environment or augmented reality scenarios.

- Entertainment and Gaming: In the fields of entertainment and gaming, ACTalker can generate natural talking head videos for characters, enhancing their expressiveness and sense of immersion.

© Copyright Notice

The copyright of the article belongs to the author. Please do not reprint without permission.

Related Posts

No comments yet...