Skywork-OR1 – Kunlun Wanwei’s open-sourced high-performance series of inference models

What is Skywork-OR1?

Skywork-OR1 (Open Reasoner 1) is an open-sourced high-performance inference model series launched by Kunlun Wanwei, which breaks through the capability bottlenecks of large language models in logical reasoning and complex task-solving. The Skywork-OR1 series comprises three models: Skywork-OR1-Math-7B specializes in mathematical reasoning with exceptional problem-solving abilities; Skywork-OR1-7B-Preview serves as a general-purpose model combining mathematical and coding capabilities; while Skywork-OR1-32B-Preview stands as the flagship version designed for higher-complexity tasks with enhanced reasoning power. In terms of performance, the Skywork-OR1 series demonstrates outstanding results across multiple benchmarks. For instance, Skywork-OR1-Math-7B achieved high scores of 69.8% and 52.3% on the AIME24 and AIME25 mathematics datasets respectively, significantly outperforming mainstream models of comparable scale. In competitive programming tasks, Skywork-OR1-32B-Preview approaches the performance of DeepSeek-R1 (671B parameters) on the LiveCodeBench dataset, showcasing remarkable cost-effectiveness.

The main functions of Skywork-OR1

- Logical Reasoning Ability: Possesses strong logical reasoning capabilities, capable of handling complex logical relationships and multi-step reasoning tasks.

- Programming Task Support: Supports the generation of high-quality code and is compatible with multiple programming languages.

- Code Optimization and Debugging: Capable of optimizing and debugging code to improve its readability and execution efficiency.

- Multi-domain Task Adaptability: Equipped with general reasoning abilities, supporting the handling of complex tasks in other domains.

- Multi-turn Conversation and Interaction: Supports multi-turn conversations, progressively solving problems based on contextual information to provide a more coherent reasoning process.

The Technical Principle of Skywork-OR1

- High-Quality Dataset: Based on high-quality mathematical datasets such as NuminaMath-1.5 (approximately 896,000 problems), we have filtered high-difficulty subsets including AIME, Olympiads, etc., totaling approximately 110,000 mathematical problems. The dataset primarily consists of LeetCode and TACO data, which has undergone rigorous screening and deduplication to retain only problems with complete unit tests and successful validation, resulting in 13,700 high-quality coding problems.

- Data Preprocessing and Filtering: Each problem undergoes multiple rounds of sampling to validate answers, removing questions with “all correct” or “all wrong” responses to eliminate the impact of invalid data on training. Combined with human review and LLM-based automatic grading mechanisms, we clean up problems that are semantically unclear, incomplete, improperly formatted, or contain irrelevant content.

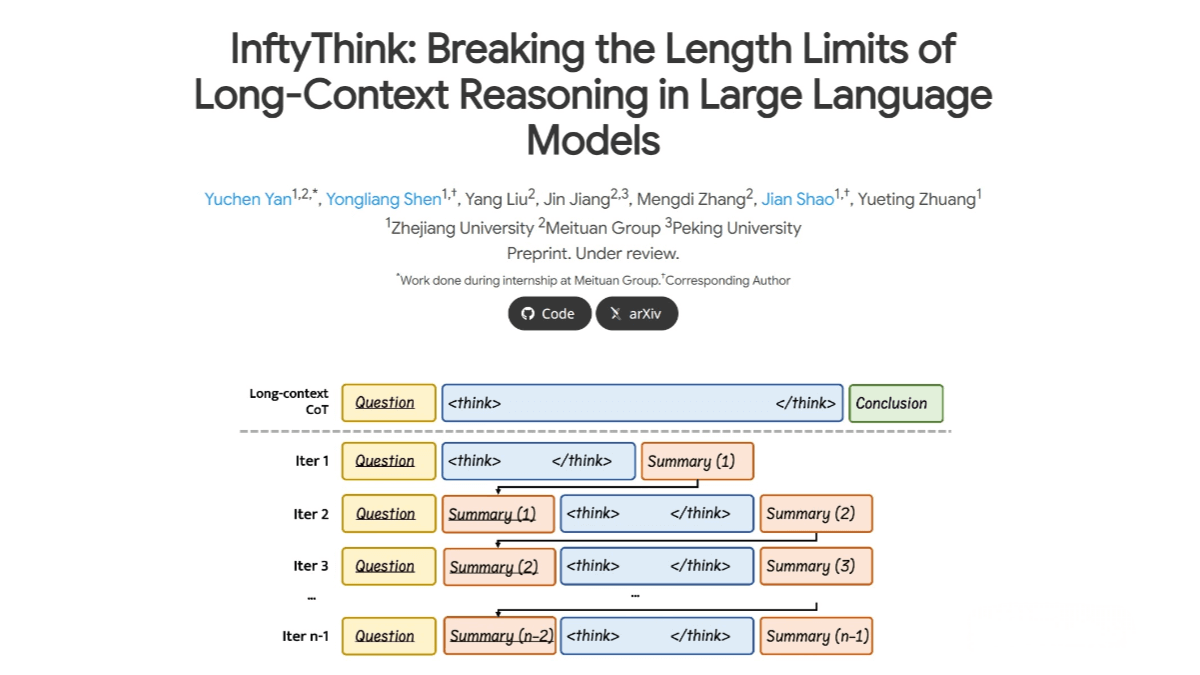

- Training Strategy: The model is trained using GRPO, with a multi-stage training process that gradually increases the context window length to enhance the model’s long-chain reasoning ability. Offline and online filtering are performed before and during training to dynamically remove invalid samples, ensuring the validity and challenge of the training data. During reinforcement learning sampling, a high sampling temperature (τ=1.0) is used, and an adaptive entropy control mechanism is applied to enhance the model’s exploratory capabilities and prevent premature convergence to local optima.

- Loss Function Optimization: The KL loss term is removed during training to allow the model to fully explore and optimize its reasoning capabilities. The policy loss is averaged across all tokens within each training batch to improve the consistency and stability of the optimization process.

- Multi-Stage Training: A multi-stage training approach is employed to gradually expand the context window length, enabling the model to efficiently complete tasks within a limited token limit and progressively master complex long-chain reasoning abilities. In the early stages of multi-stage training, specific strategies are applied to handle truncated samples, ensuring that the model’s performance is rapidly improved as it progresses to the next stage.

Performance of Skywork-OR1

- Mathematical Reasoning Task:

◦ The general-purpose models, Skywork-OR1-7B-Preview and Skywork-OR1-32B-Preview, achieve state-of-the-art performance among models of the same scale on the AIME24 and AIME25 datasets, demonstrating strong mathematical reasoning capabilities.

◦ The specialized model, Skywork-OR1-Math-7B, attains high scores of 69.8 and 52.3 on AIME24 and AIME25, respectively, significantly outperforming current mainstream 7B-level models and highlighting its professional advantages in advanced mathematical reasoning.

◦ Skywork-OR1-32B-Preview surpasses QwQ-B on all benchmarks and achieves performance comparable to R1 on AIME25. - Programming Competition Task:

◦ The general models Skywork-OR1-7B-Preview and Skywork-OR1-32B-Preview achieve the best performance in the same parameter scale on the LiveCodeBench dataset.

◦ Skywork-OR1-32B-Preview demonstrates code generation and problem-solving capabilities close to DeepSeek-R1 (with 671B parameters), achieving outstanding cost-effectiveness while reducing model size, reflecting the advanced nature of its training strategy. - Performance of Skywork-OR1-Math-7B:

◦ The training accuracy curve on AIME24 shows a steady performance improvement. The model achieves 69.8% and 52.3% on AIME24 and AIME25, respectively, surpassing OpenAI-o3-mini (low) and reaching SOTA performance for its current size.

◦ On Livecodebench, the performance improves from 37.6% to 43.6%, significantly outperforming the baseline model and demonstrating good domain generalization of the training method.

Project address of Skywork-OR1

- Project official website: https://capricious-hydrogen-41c.notion.site/Skywork-Open-Reasoner

- GitHub repository: https://github.com/SkyworkAI/Skywork-OR1

- Hugging Face model hub: https://huggingface.co/collections/Skywork/skywork-or1

Application scenarios of Skywork-OR1

- Mathematics Education: Assist students in solving problems by providing ideas and step-by-step solutions, and support teachers in lesson preparation.

- Scientific Research Assistance: Aid researchers in exploring complex models, verifying hypotheses, and deriving formulas.

- Programming Development: Generate code frameworks, optimize code, assist in debugging, and improve development efficiency.

- Data Analysis: Support decision-making in finance, business, and other fields, predict trends, and assess risks.

- AI Research: Serve as a research platform to advance the improvement of reasoning model architectures and algorithms.

Related Posts