InstantCharacter – Tencent Mixyuan’s Open-Source Customized Image Generation Plugin

What is InstantCharacter?

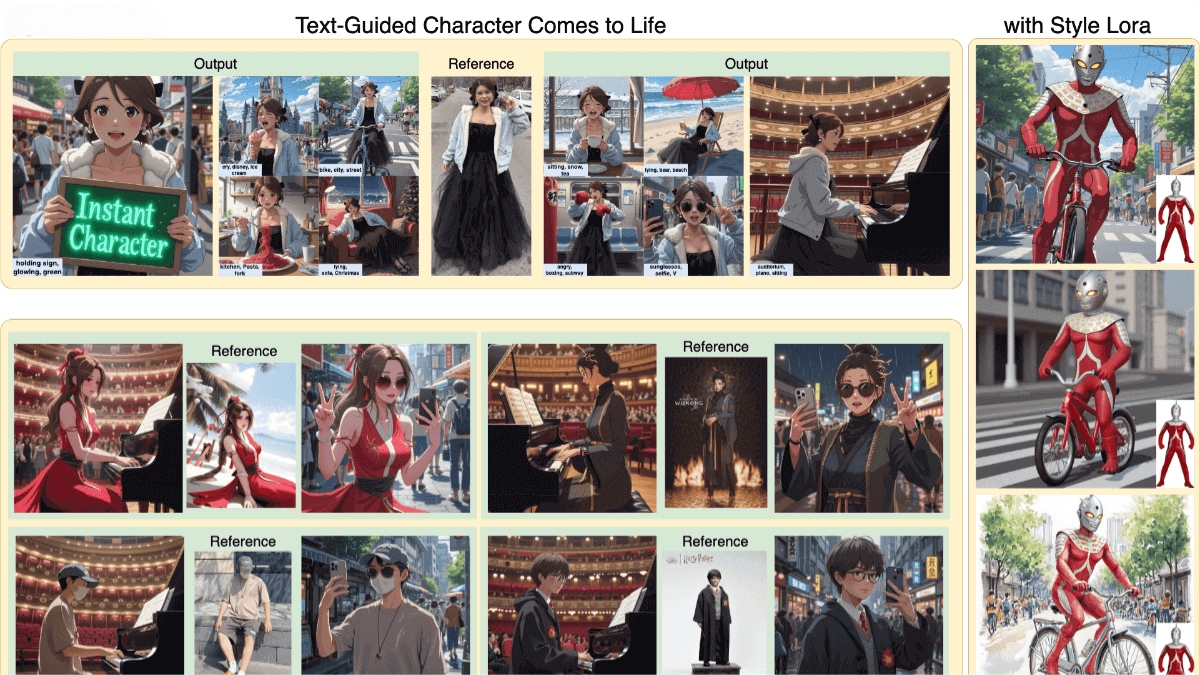

InstantCharacter is a customized image generation plugin open-sourced by Tencent Mixyuan. Based on the Diffusion Transformer (DiT) framework, it introduces scalable adapters (comprising multiple Transformer encoders) and a large-scale character dataset with tens of millions of samples, enabling high-fidelity, text-controlled, and character-consistent image generation. InstantCharacter allows users to provide a character image and a simple text description, enabling the character to appear in various poses and scenes. InstantCharacter has broad application prospects in fields like comic books and film production, setting a new benchmark for character-driven image generation.

Main Features of InstantCharacter

-

Character Consistency: Maintains the appearance, style, and identity of the character across different scenes and poses.

-

High-Fidelity Image Generation: Generates high-quality, high-resolution images with rich details and realism.

-

Flexible Text Control: Allows users to control the character’s actions, scenes, and style based on simple text descriptions.

-

Open-Domain Character Customization: Supports various character appearances, poses, and styles.

-

Fast Generation: No need for complex fine-tuning for each character, allowing for rapid image generation that meets the desired specifications.

Technical Principles of InstantCharacter

-

Diffusion Transformer (DiT) Architecture: Based on modern Diffusion Transformers as the base model, DiT offers better generation capability and flexibility compared to traditional U-Net architectures. It utilizes the Transformer structure to better handle complex image features and long-range dependencies.

-

Scalable Adapter: Introduces a Transformer-based scalable adapter module that interacts with the latent space of DiT to interpret character features. The adapter consists of multiple stacked Transformer encoders that progressively refine character traits, ensuring seamless integration with the base model. Pre-trained visual encoders like SigLIP and DINOv2 are used to extract detailed character features, preventing feature loss.

-

Large-Scale Character Dataset: A massive character dataset containing tens of millions of samples is constructed, divided into paired (multi-view characters) and unpaired (text-image combinations) subsets. The paired data optimizes character consistency, while the unpaired data enhances text controllability.

-

Three-Phase Training Strategy:

-

Phase 1: Pretraining with unpaired low-resolution data to maintain character consistency.

-

Phase 2: Training with paired low-resolution data to enhance text controllability.

-

Phase 3: Joint training with high-resolution data to improve image fidelity.

-

Project Information

-

Official Website: InstantCharacter Official Site

-

GitHub Repository: InstantCharacter GitHub

-

arXiv Technical Paper: InstantCharacter Paper on arXiv

-

Online Demo: InstantCharacter Demo on Hugging Face

Application Scenarios

-

Comic and Manga Creation: Quickly generate characters in different poses and expressions, maintaining consistency across scenes, reducing manual drawing workload.

-

Film and Animation Production: Generate character concept art and animation scenes, rapidly iterating on character designs to meet the needs of various storylines.

-

Game Design: Generate multiple poses and scenes of game characters, supporting various styles, and quickly producing images that align with the game’s aesthetic.

-

Advertising and Marketing: Quickly generate character images that match the theme of advertising copy, enhancing the creativity and appeal of ads.

-

Social Media and Content Creation: Users can generate personalized character images based on text descriptions, adding fun and interactivity to content.

Related Posts