WanXiang Head-Tail Frame Model – An Open-Source Head-Tail Frame Video Generation Model by Alibaba Tongyi

Wan2.1-FLF2V-14B: The WanXiang Head-Tail Frame Model

The WanXiang Head-Tail Frame Model (Wan2.1-FLF2V-14B) is an open-source, 14B-parameter head-tail frame video generation model. Based on user-provided first and last frame images, the model automatically generates smooth video transitions, supporting a variety of styles and special effects transformations. WanXiang’s head-tail frame model is built on the advanced DiT architecture, combined with an efficient video compression VAE model and cross-attention mechanism, ensuring high spatial and temporal consistency in the generated video. Users can experience this for free on the Tongyi WanXiang official website.

Main Features of WanXiang Head-Tail Frame Model

-

Head-Tail Frame Video Generation: Generates natural and smooth videos with a duration of 5 seconds and a resolution of 720p, based on user-provided first and last frame images.

-

Multiple Styles Supported: Generates videos in various styles, such as realistic, cartoon, comic, and fantasy.

-

Detail Replication and Realistic Motion: Accurately replicates the details of the input images, producing lively and natural motion transitions.

-

Instruction Compliance: Based on user prompts, the video content can be controlled, including camera movements, subject actions, and special effect changes.

Technical Principles of WanXiang Head-Tail Frame Model

-

DiT Architecture: The core architecture is based on DiT (Diffusion in Time), designed specifically for video generation. The Full Attention mechanism is used to accurately capture the long-term temporal-spatial dependencies in the video, ensuring high consistency in both time and space.

-

Video Compression VAE Model: Introduces an efficient Video Compression VAE (Variational Autoencoder) model, significantly reducing computational costs while maintaining high video quality. This makes high-definition video generation more economical and efficient, supporting large-scale video generation tasks.

-

Conditional Control Branches: The first and last frames provided by users serve as control conditions. Additional control branches enable smooth and accurate head-tail frame transitions. The first and last frames, along with several zero-padded intermediate frames, form the control video sequence. This sequence is further combined with noise and masks (mask) to serve as input for the diffusion transformation model (DiT).

-

Cross-Attention Mechanism: Extracts semantic features of the first and last frames through CLIP and injects them into the DiT generation process via the cross-attention mechanism. Frame stability is controlled to ensure that the generated video maintains high semantic and visual consistency with the input head-tail frames.

-

Training and Inference: The training strategy uses a combination of data parallelism (DP) and fully-sharded data parallelism (FSDP), supporting 720p, 5-second video slice training. The model performance is progressively improved in three stages:

-

Stage 1: Hybrid training to learn the mask mechanism.

-

Stage 2: Specialized training to optimize head-tail frame generation capabilities.

-

Stage 3: High-precision training to improve detail replication and motion smoothness.

-

Project Links for WanXiang Head-Tail Frame Model

-

GitHub Repository: https://github.com/Wan-Video/Wan2.1

-

HuggingFace Model Hub: https://huggingface.co/Wan-AI/Wan2.1-FLF2V-14B-720P

Application Scenarios for WanXiang Head-Tail Frame Model

-

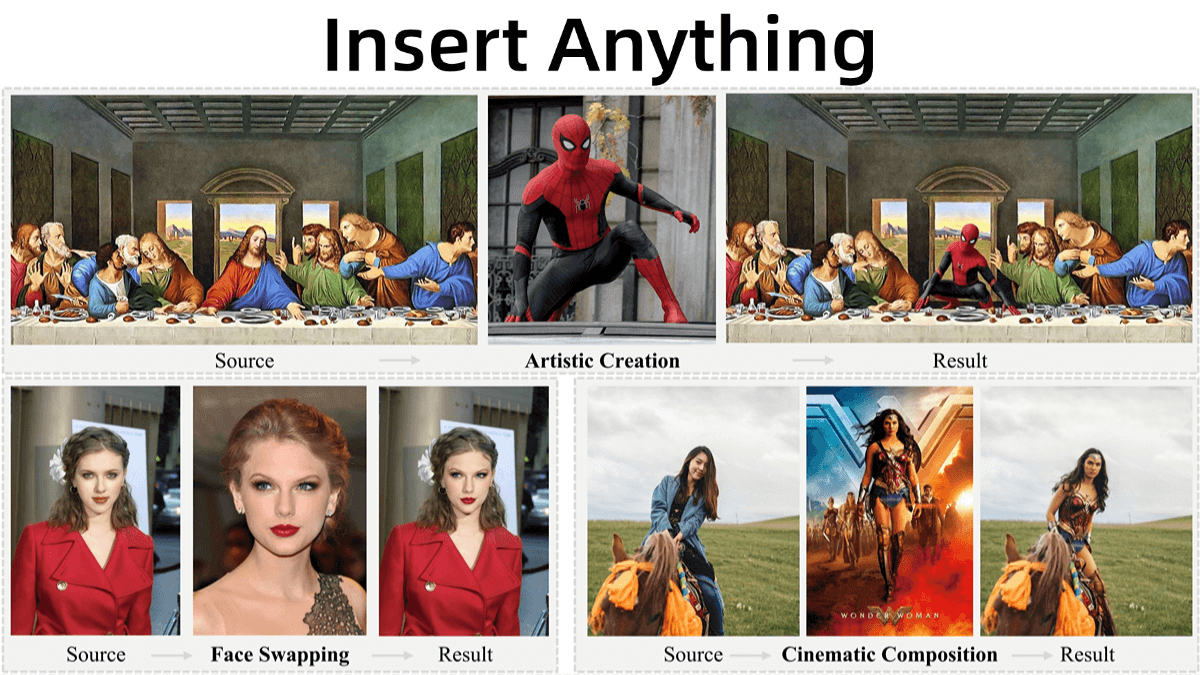

Creative Video Production: Quickly generate creative videos with scene transitions or special effect changes.

-

Advertising & Marketing: Create attractive video advertisements to enhance visual effects.

-

Film Special Effects: Generate effect shots like seasonal changes, day-night transitions, etc.

-

Education & Demonstration: Produce vivid animation effects to aid teaching or presentations.

-

Social Media: Generate personalized videos to engage fans and increase interaction.

Related Posts