Insert Anything – An image insertion framework jointly launched by Zhejiang University, Harvard University and Nanyang Technological University

What is Insert Anything

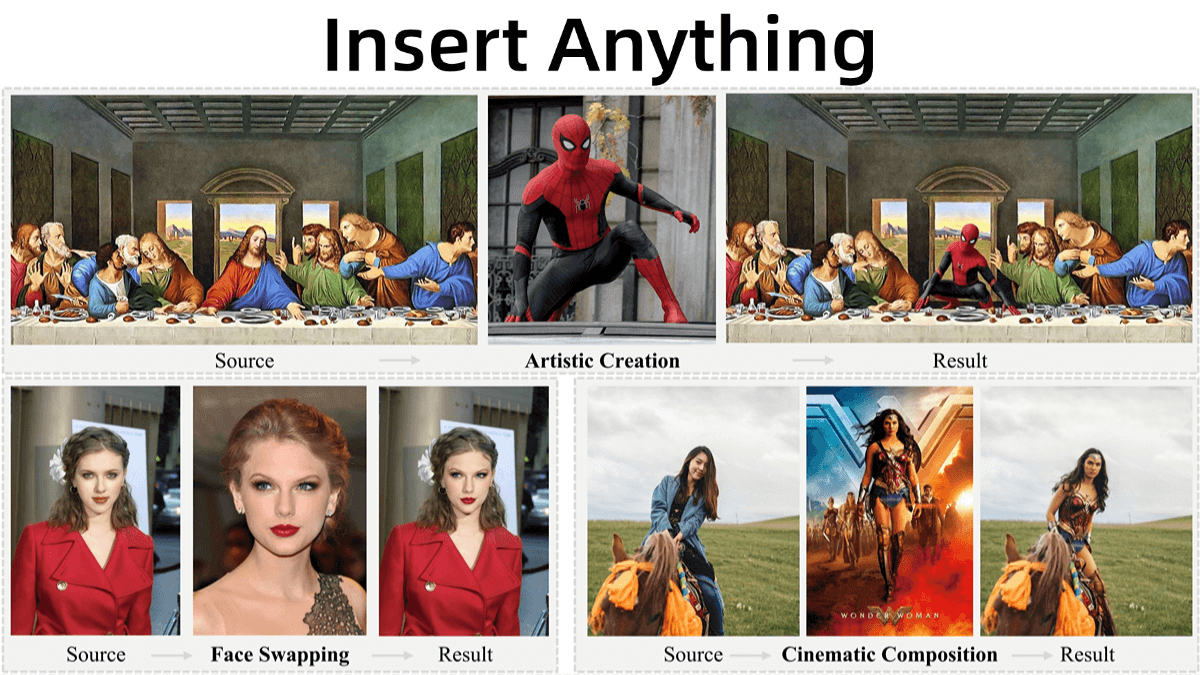

Insert Anything is a context-aware image insertion framework developed jointly by researchers from Zhejiang University, Harvard University, and Nanyang Technological University. It enables seamless insertion of objects from reference images into target scenes, supporting a wide range of practical use cases such as artistic creation, realistic face replacement, movie scene composition, virtual try-on, accessory customization, and digital prop substitution. Trained on the AnyInsertion dataset containing 120K prompt-image pairs, Insert Anything can flexibly adapt to various insertion tasks, offering powerful support for creative content generation and virtual fitting applications.

Key Features of Insert Anything

-

Multi-Scenario Support: Capable of handling diverse image insertion tasks including person insertion, object insertion, and clothing insertion.

-

Flexible User Control: Offers both mask-guided and text-guided control modes. Users can manually draw masks or input text prompts to specify the insertion region and content.

-

High-Quality Output: Generates high-resolution, high-fidelity images while maintaining detail and style consistency of the inserted elements.

Technical Principles of Insert Anything

-

AnyInsertion Dataset: The framework is trained on the large-scale AnyInsertion dataset with 120K prompt-image pairs, covering tasks like person, object, and clothing insertion.

-

Diffusion Transformer (DiT): Utilizes a DiT-based multimodal attention mechanism to process both textual and visual inputs. DiT jointly models relationships among text, masks, and image patches, enabling flexible editing control.

-

Contextual Editing Mechanism: Uses a polyptych format (e.g., diptych for mask guidance and triptych for text guidance) to combine reference and target images, allowing the model to understand contextual information and produce natural insertions.

-

Semantic Guidance: Integrates image encoders (e.g., CLIP) and text encoders to extract semantic information, ensuring that inserted elements align with the style and semantics of the target scene.

-

Adaptive Cropping Strategy: Dynamically adjusts the crop region for small targets, ensuring sufficient contextual information and attention to detail are preserved for high-quality results.

Project Resources for Insert Anything

-

Project Page: https://song-wensong.github.io/insert-anything/

-

GitHub Repository: https://github.com/song-wensong/insert-anything

-

arXiv Paper: https://arxiv.org/pdf/2504.15009

Application Scenarios for Insert Anything

-

Artistic Creation: Rapidly combine different elements to spark creative ideas.

-

Virtual Try-On: Enable consumers to preview clothing, enhancing online shopping experiences.

-

Film and Visual Effects: Seamlessly insert virtual elements into scenes, reducing production costs.

-

Advertising Design: Quickly generate diverse creative advertisements to boost appeal.

-

Cultural Heritage Restoration: Virtually restore artifacts or architectural details for research and exhibition purposes.

Related Posts