LiveCC – A real-time video commentary model open-sourced jointly by ByteDance and the National University of Singapore

What is LiveCC?

LiveCC is a real-time video commentary model jointly developed by the Show Lab team at the National University of Singapore and ByteDance. It is extensively trained using automatic speech recognition (ASR) subtitles. Like a professional commentator, LiveCC quickly analyzes video content and synchronously generates natural, fluent audio or text commentary.

LiveCC introduces two key datasets:

-

Live-CC-5M for pretraining

-

Live-WhisperX-526K for high-quality supervised fine-tuning

Additionally, LiveCC designed the LiveSports-3K benchmark to evaluate the model’s real-time video commentary capabilities.

Experiments demonstrate that LiveCC excels in real-time video commentary and video question answering tasks, showing low latency and high-quality generation.

Key Features of LiveCC

-

Real-time Video Commentary

Generates continuous, human-like commentary in real time based on video content. Applicable to sports events, news broadcasting, educational videos, and more. -

Video Question Answering

Answers questions related to the video content, helping users better understand events and details within the video. -

Low-latency Processing

Processes video streams with extremely low latency (under 0.5 seconds per frame), enabling real-time applications. -

Multi-scenario Adaptation

Suitable for various types of video content, including sports, news, education, and entertainment.

Technical Principles of LiveCC

-

Streaming Training Method

ASR words are densely interleaved with video frames according to timestamps. This trains the model to learn time-aligned vision-language relationships, simulating the human real-time perception of video content. -

Large-scale Datasets

Built two datasets from YouTube video ASR subtitles:-

Live-CC-5M for pretraining

-

Live-WhisperX-526K for high-quality supervised fine-tuning

These datasets provide rich training resources for the model.

-

-

Model Architecture

Based on the Qwen2-VL architecture, LiveCC combines a vision encoder with a language model. It processes video frames and text inputs, predicting text tokens autoregressively while using video tokens as non-predictive context. -

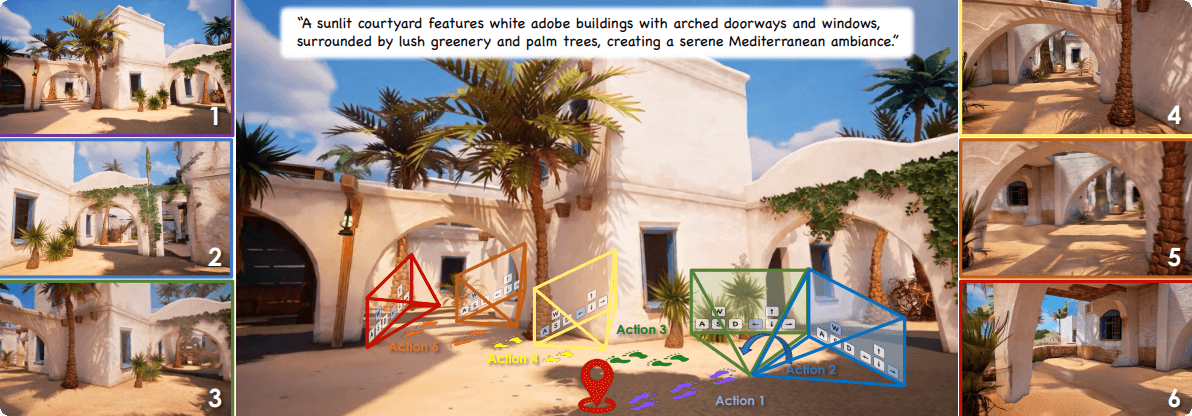

Real-time Inference

LiveCC processes input videos frame-by-frame during inference, generating commentary in real time. The model caches previous prompts, visual frames, and generated texts to speed up language decoding. -

Evaluation Method

Evaluated using the LiveSports-3K benchmark and the LLM-as-a-judge framework to compare the quality of commentary generated by different models.

Project Links for LiveCC

-

🌐 Official Website: https://showlab.github.io/livecc/

-

🧑💻 GitHub Repository: https://github.com/showlab/livecc

-

🤗 Hugging Face Model Hub: https://huggingface.co/collections/chenjoya/livecc

-

📄 arXiv Technical Paper: https://arxiv.org/pdf/2504.16030

-

🚀 Online Demo: https://huggingface.co/spaces/chenjoya/LiveCC

Application Scenarios for LiveCC

-

Sports Events

Deliver real-time commentary and match analysis, enhancing the viewer experience. -

News Reporting

Assist in real-time interpretation of news, improving the depth and professionalism of reports. -

Education

Generate instructional commentary for educational videos to support skill training and learning. -

Entertainment Media

Provide real-time plot explanations for movies and TV shows to boost engagement and interactivity. -

Smart Assistants

Offer real-time information based on video content to enhance user interaction experiences.

Related Posts